Functional Programming in Python

Keyword call outs

Implicit and explicit line continuation: Wrap long lines using implied line continuation inside parentheses, brackets, and braces, or use explicit line continuation with the

\character to terminate a line (no space afterwards). Use PEP 8 as a reference.Comments and docstrings: There are no multiline comments in Python. Multiline strings can be used with triple single- or double-quote delimiters:

'''or""". These can also be used for docstrings. See PEP 257 for docstring conventions.Keywords: List of keywords can be found by running

import keyword

print(keyword.kwlist)whileandforloops can haveelseblocks: Whatever code is in theelseclause will be executed if thewhileorforloop never encounters abreakstatement. Hence, if you use anelseclause in awhile/forloop that does not have abreakstatement, then the code within theelseblock will be automatically executed at the end of the loop. The takeaway: theelseclause only fires if the loop terminates properly (i.e., without hitting abreakstatement).finallyalways executes: Thewhileloop starts by always evaluating the conditional expression first, which does not guarantee that your loop will run at least once. If you need to ensure that yourwhileloop runs at least once, then you can use thewhile Trueapproach. Thewhileloop has anelseclause that can be attached which executes if and only if thewhileloop terminates normally (i.e., without encountering abreakstatement). Finally,continueandbreakstatements can be used inside atrystatement inside a loop and thefinallywill always execute before eitherbreaking orcontinueing from that point forward.Iterables: In Python, an iterable is an object capable of returning values one at a time. The

forloop in Python iterates an iterable.

Preliminaries

The Zen of Python and "Idiomatic Python"

To program in "idiomatic Python", we need to embrace the Zen of Python. From PEP 8:

Beautiful is better than ugly.

Explicit is better than implicit.

Simple is better than complex.

Complex is better than complicated.

Flat is better than nested.

Sparse is better than dense.

Readability counts.

Special cases aren't special enough to break the rules.

Although practicality beats purity.

Errors should never pass silently.

Unless explicitly silenced.

In the face of ambiguity, refuse the temptation to guess.

There should be one--and preferably only one--obvious way to do it.

Although that way may not be obvious at first unless you're Dutch.

Now is better than never.

Although never is often better than *right* now.

If the implementation is hard to explain, it's a bad idea.

If the implementation is easy to explain, it may be a good idea.

Namespaces are one honking great idea -- let's do more of those!

This listing can also be obtained by running import this in the Python interpreter.

Basics (Python refresher)

The Python type hierarchy

The following is a subset of the Python type hierarchy but the subset you will deal with most often.

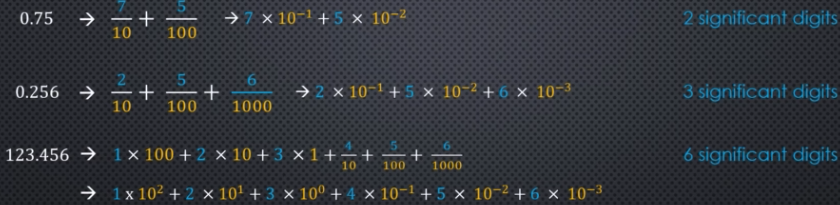

Numbers

| Integral | Non-Integral |

|---|---|

| Integers | Floats |

| Booleans | Complex |

| Decimals | |

| Fractions |

Collections

| Sequences | Sets | Mappings |

|---|---|---|

| Lists (mutable) | Sets (mutable) | Dictionaries |

| Tuples (immutable) | Frozen Sets (immutable) | |

| Strings (immutable) |

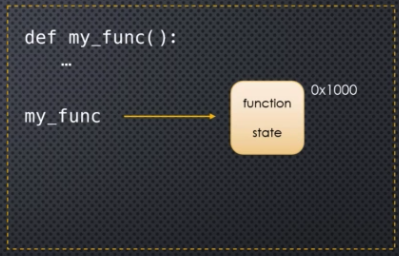

Callables

A callable is anything you can "call" or invoke:

- User-defined functions

- Generators

- Classes

- Instance methods

- Class instances (

__call__()) - Built-in functions (e.g.,

len(),open()) - Built-in methods (e.g.,

my_list.append(x))

Singletons

NoneNotImplemented- Ellipsis (

...)

Multi-line statements and strings

Overview

A Python program is basically nothing more than just a text file that contains physical lines of code. These physical lines of code are often separated by physical newlines that are inserted by you by pressing the Enter key on your keyboard. The physical code is processed by the Python compiler, and it is then combined to produce logical lines of code which are then tokenized so that the Python compiler and interpreter can understand what your code is trying to do and execute it accordingly:

Python program

-> physical lines of code

-> logical lines of code

-> tokenized

-> executed

Physical lines of code end with a physical newline character and once the code has been processed, logical lines of code will end with a logical NEWLINE token. Exactly how this gets done is not really the important part. That's what the Python development team is for!

The important thing for us to understand is that there is a difference between a physical newline character and a logical NEWLINE token. They're not necessarily the same thing. Very often they are but not always. Sometimes physical newlines are ignored and they are ignored in order to combine multiple physical lines into a single logical line of code which is then terminated by a logical newline token. This is what allows us to write code over multiple lines that technically should be written as a single line (kind of like a SQL query). But we want to be able to write code over multiple lines for the sake of readability. It allows us to make our code easier to read and understand. The Python interpreter and compiler really doesn't care whether you write your code over one line, two lines, thousands of lines, etc. At the end of the day, it will recombine lines of code into single lines as needed, it will tokenize everything, etc. It does not care at all about human readability. But we humans should care about readability!

Implicit and explicit conversions

The conversion between physical and logical newlines (i.e., the removal of physical newline characters) is done either implicitly or explicitly. Many times we can get by and let implicit conversions do their thing. But sometimes we have to be explicit.

Implicit examples

The following expressions may be written across multiple physical lines (they also support inline comments):

- list literals:

[] - tuple literals:

() - dictionary literals:

{} - set literals:

{} - function arguments / parameters

So a list like [1,2,3] does not have to be written as a single line. It can be written across several lines (i.e., across physical newlines):

[1,

2,

3]

Of course, it makes little sense in this example to write the list like this, but you can imagine scenarios where maybe you have lists with variables with very long names. Sometimes it might be much clearer to write a list using physical newlines. Those physical newlines will be implicitly removed by Pyhon.

You can also add comments to the end of each physical line (before the physical newline character):

[

1, # first element

2, # second element

3 # third element

]

Although not as pretty or conventional, you can also write the above in the following manner (both approaches are valid):

[

1 # first element

,2 # second element

,3 # third element

]

Note that if you do use comments, however, you must close off the collection on a new line. For example, the following will not work since the closing ] is actually part of the comment:

[1, # first element

2 #second element]

This works the same way for tuples

a = (

1, # first element

2, # second element

3, # third element

)

and sets

a = {

1, # first element

2, # second element

}

and dictionaries

a = {

'key1': 'value1', #comment

'key2': 'value2' #comment

}

We can also break up function arguments and parameters (which can be quite useful for when your function has numerous parameters or the arguments provided have long names);

def my_func(a, # arg1 comment

b, # arg2 comment

c): # arg3 comment

print(a, b, c)

my_func(

10, # param1 comment

20, # param2 comment

30 # param3comment

)

All of the examples above involve implicit conversion of physically multi-line code into logically single-line code (including the implicit stripping of comments and the like).

Explicit examples

The PEP 8 -- Style Guide for Python Code is worth taking a look at. In particular, note what it says about the preferred way of wrapping long lines:

The preferred way of wrapping long lines is by using Python's implied line continuation inside parentheses, brackets and braces. Long lines can be broken over multiple lines by wrapping expressions in parentheses. These should be used in preference to using a backslash for line continuation.

Backslashes may still be appropriate at times. For example, long, multiple

with-statements cannot use implicit continuation, so backslashes are acceptable:

with open('/path/to/some/file/you/want/to/read') as file_1, \

open('/path/to/some/file/being/written', 'w') as file_2:

file_2.write(file_1.read())

As can be seen above, when necessary, you can use the backslash character \ (with no trailing space after the backslash) to explicitly create multi-line statements:

a = 10

b = 20

c = 30

if a > 5 \

and b > 10 \

and c > 20:

print('yes!!')

The identation in explicitly continued lines does not matter:

a = 10

b = 20

c = 30

if a > 5 \

and b > 10 \

and c > 20:

print('yes!!')

The takeaway from all of this is that multi-line statements (i.e., as opposed to expressions within braces or brackets) are not implicitly converted to a single logical line in Python. Something like

if a

and b

and c:

will not work. Python will throw an error. It will place a NEWLINE token after if a, but it will expect a colon.

One workaround for using the multi-line format is to use PEP 8's advice and take advantage of "Python's implied line continuation inside parentheses, brackets and braces":

a = 10

b = 20

c = 30

if (a > 5

and b > 10 # this comment is fine

and c > 20):

print('yes!!')

Or we can be explicit with our multi-line format and use the \ character (note, however, that comments cannot be part of a statement, not even a multi-line statement, so if you use \ then your comments will need to go above where you start using \ characters or below the last occurrence):

# this comment is fine

if a \

and b \ # this comment is NOT fine

and c: # this comment is fine

Multi-line strings

You can create multi-line strings by using triple delimiters (single or double quotes):

>>> a = '''this is

... a multi-line string'''

>>> a

'this is\na multi-line string'

Note how the physical newline we typed in the multi-line string was preserved (i.e., the physical newline character \n is preserved).

The results are somewhat less visible if we run this as part of a script:

a = '''this is

a multi-line string'''

print(a)

this is

a multi-line string

In general, any character you type is preserved. You can also mix in escaped characters like any normal string.

a = """some items:\n

1. item 1

2. item 2"""

print(a)

some items:

1. item 1

2. item 2

Be careful if you indent your multi-line strings. The extra spaces are preserved!

def my_func():

a = '''a multi-line string

that is actually indented in the second line'''

return a

print(my_func())

a multi-line string

that is actually indented in the second line

In such a case, it is appropriate to manually remove what otherwise might look like normal indenting:

def my_func():

a = '''a multi-line string

that is actually not indented in the second line'''

return a

print(my_func())

a multi-line string

that is actually not indented in the second line

Important to note is that these multi-line strings are not comments--they are real strings and, unlike comments, are part of your compiled code. They are, however, sometimes used to create comments, such as docstrings (something that will be covered later). See the article PEP 257 -- Docstring Conventions for more.

In general, use # to comment your code, and use multi-line strings only when actually needed (like for docstrings).

Also, there are no multi-line comments in Python. You simply have to use a # on every line.

# this is

# a multi-line

# comment

The following works, but the above formatting is preferrable:

# this is

# a multi-line

# comment

Variable names

We will mostly look at rules and conventions related to identifier names. We can start with the fact that identifiers are case-sensitive. So the following are all different identifiers:

my_var

my_Var

ham

Ham

Rules

Identifiers must follow certain rules and should follow certain conventions:

Strict rules

start with an underscore (

_) or letter (a-z,A-Z) You can technically go beyond this, but it's wise to stick with this character set.followed by any number of underscores (

_), letters (a-z,A-Z), or digits (0-9)cannot be reserved words. Most of these words you'll already be familiar with (e.g.,

True,False,while, etc.), but you can find the complete list by using thekeywordmodule that is part of the standard library:import keyword

print(keyword.kwlist)This gives us the following list (string quotes removed):

[False, None, True, __peg_parser__, and, as, assert, async, await, break, class, continue, def, del, elif, else, except, finally, for, from, global, if, import, in, is, lambda, nonlocal, not, or, pass, raise, return, try, while, with, yield]All of the following are legal names although the last three conventionally have a special meaning associated with them:

var

my_var

index1

index_1

_var

__var

__lt__

Implied rules (conventions)

_my_var: It is convention to use a single underscore as part of an identifier to indicate "internal use" or "private" objects. This is a way to communicate to anyone looking at or using your code that they really shouldn't mess around with that variable. Worth noting is that objects named this way will not get imported by a statement such as the following:from module import *.__my_var: The double underscore or "dunder" is used for so-called name mangling. This basically changes your variable in a very set way that can be useful for inheritance chains if you have the same name, say attribute or property in a class, and you have the same attribute in a chain of classes, then how do you differentiate which is which? This is where name mangling comes in. When you want name mangling to occur, you use__in front of your variable name (short version:__is used to mangle class attribues which is something useful in inheritance chains).__my_var__: Double underscores that both start and end an identifier name are used for system-defined names that have a special meaning to the interpreter. For example, suppose you have instancesxandyof a class that you have created. If you try to evaluatex < y, then the Python interpreter will automatically look for an__lt__method defined on the class for whichxandyare instances and runx.__lt__(y). Point being: don't invent so-called magic methods (i.e., double underscore methods)! Stick to the ones pre-defined by Python. Which ones exist? The special method names article in the Python language reference is a good place to look. There you can find more information about frequently used dunder methods like__init__and others.- Packages: Short, all-lowercase names. Preferably no underscores. So something like

utilitieswould work well. - Modules: Short, all-lowercase names. Can have underscores. Something like

db_utilsordbutilswould be fine. - Classes: CapWords (upper camel case) convention. So something like

BankAccountwould work fine. - Functions: Lowercase, words separated by underscores (snake_case). So something like

open_accountwould work well. - Variables: Lowercase, words separated by underscores (snake_case). So something like

account_idwould work well. - Constants: All-uppercase, words separated by underscores. So something like

MIN_APRwould work well.

The while loop

Python does not have a do ... while construction like some other langauges have to ensure the while loop fires at least once. But it is easy to mimic:

i = 5

while True:

print(i)

if i >= 5:

break

i += 1

When it comes to prompts especially, you may find yourself doing something not quite DRY:

min_length = 2

name = input("Please enter your name: ")

while not (len(name) >= min_length and name.isprintable() and name.isalpha()):

name = input("Please enter your name: ")

print("Hello, {0}".format(name))

We can clean this up fairly easily:

min_length = 2

while True:

name = input("Please enter your name: ")

if (len(name) >= min_length and name.isprintable() and name.isalpha()):

break

print("Hello, {0}".format(name))

The break statement breaks out of the loop and terminates the loop immediately. The continue statement, however, is a little bit different. It's like saying, "Hey, the current iteration of the loop we're in right now ... I want you to break out of that. Skip everything that comes after this continue statement and go back to the beginning of the loop."

A trivial but illustrative example:

a = 0

while a < 10:

a += 1

if a % 2 == 0:

continue

print(a)

This results in printing off 1, 3, 5, 7, and 9. The continue statement ensures the print statement is never reached for even numbers, but the loop continues to run.

Something interesting about while loops in Python is that you can use an else statement with them. The else clause of the while loop will execute if and only if the while loop did not encounter a break statement; that is, if the loop ran normally, then it will end up executing the code inside the else statement. This can be quite useful.

A simple example might be something like the following:

l = [1, 2, 3]

val = 10

found = False

idx = 0

while idx < len(l):

if l[idx] == val:

found = True

break

idx += 1

if not found:

l.append(val)

print(l)

This looks through the list l and adds the val if it is not found (we could obviously do this in more effective ways but the goal here is to use a while loop). The else statement could be helpful here:

l = [1, 2, 3]

val = 10

idx = 0

while idx < len(l):

if l[idx] == val:

break

idx += 1

else:

l.append(val)

print(l)

The outcome is the same for both of these programs. The takeaway: the else clause for a while loop only fires if the while loop terminates properly (i.e., without hitting a break statement). Hence, something like

i = 1

while i < 10:

print(i)

i += 1

else:

print('Woohoo!')

will have the following output:

1

2

3

4

5

6

7

8

9

Woohoo!

Since there is no possibility of running into a break in the while loop above, the code in the else statement will automatically be executed once the while loop terminates.

break, continue, and try statements

We are now going to look at what happens when you try to use break and continue when you are inside a try statement inside a loop. So the question is: What happens? Recall that for try statements we have try...except...finally, where try is what we are trying to do, except is where we can trap specific exceptions, but there is also a finally clause that exsits that always runs even when there is an exception.

For example:

a = 10

b = 1

try:

a/b

print(a/b)

except ZeroDivisionError:

print('Division by zero')

finally:

print('This always executes.')

Output:

10.0

This always executes.

And if we change b to 0 to have

a = 10

b = 0

try:

a/b

print(a/b)

except ZeroDivisionError:

print('Division by zero')

finally:

print('This always executes.')

then we will get

Division by zero

This always executes.

Now consider the following code block:

a = 0

b = 2

while a < 4:

print('-------------')

a += 1

b -= 1

try:

a/b

except ZeroDivisionError:

print("{0}, {1} - division by 0".format(a,b))

continue

finally:

print('{0}, {1} - always executes'.format(a,b))

print("{0}, {1} - main loop".format(a,b))

We end up with the following output:

-------------

1, 1 - always executes

1, 1 - main loop

-------------

2, 0 - division by 0

2, 0 - always executes

-------------

3, -1 - always executes

3, -1 - main loop

-------------

4, -2 - always executes

4, -2 - main loop

Note how the finally block executes even though we had a continue statement. If we replace continue with break, then the finally block will still execute and then the loop will be broken out of:

a = 0

b = 2

while a < 4:

print('-------------')

a += 1

b -= 1

try:

a/b

except ZeroDivisionError:

print("{0}, {1} - division by 0".format(a,b))

break

finally:

print('{0}, {1} - always executes'.format(a,b))

print("{0}, {1} - main loop".format(a,b))

This gives us

-------------

1, 1 - always executes

1, 1 - main loop

-------------

2, 0 - division by 0

2, 0 - always executes

The upshot of all this is that finally will always run! Exception or not. break or continue or not! We can combine this useful information with the else clause if we want. Recall when else executes: Whenever a while loop terminates normally (i.e., no break clause is encountered).

Recap: The while loop starts by always evaluating the conditional expression first, which does not guarantee that your loop will run at least once. If you need to ensure that your while loop runs at least once, then you can use the while True approach. The while loop has an else clause that can be attached which executes if and only if the while loop terminates normally (i.e., without encountering a break statement). Finally, continue and break statements can be used inside a try statement inside a loop and the finally will always execute before either breaking or continueing from that point forward.

The for loop

The for loop construct in most C-like languages is as follows:

for (int i = 0; i < 5; i++) { ... }

The int i = 0 statement is executed at the beginning of the loop and the condition i < 5 is checked before the loop runs every time, and the last statement declares what happens at the end of each iteration. Python has no such construct!

To understand the for loop construct in Python, we first need to understand what an iterable is:

In Python, an iterable is an object capable of returning values one at a time.

We could construct something similar to the for loop syntax above in Python:

i = 0

while i < 5:

print(i)

i += 1

i = None

The last part is to mimic how i goes out of scope at the end of the for loop in most languages. We really don't need to do this in practice though (everything gets cleaned up for us).

In any case, this is obviously not what we do in Python to use for loops. The for statement in Python is something to iterate over an iterable. A very simple iterable we can create is by using the range function:

for i in range(5):

print(i)

The important takeaway here is that for iterates an iterable in Python. In fact, range is neither a collection nor a list. It is specifically an iterable. Of course, in general, lists, strings, tuples, etc., are iterables and can be iterated over.

We can use break and continue in a for statement just like we used them in a while loop previously. We can also attach an else to a for loop in Python which will, just like with the while loop, only execute if a break statement is not encountered:

for i in range(1,5):

print(i)

if i % 7 == 0:

print('multiple of 7 found')

break

else:

print('no multiples of 7 in the range')

1

2

3

4

no multiples of 7 in the range

We got the output above because no multiple of 7 was found in the iterable range(1,5) which yielded iterated values of 1, 2, 3, and 4, respectively. Since the break statement was not encountered, the else block was executed.

Something worth remarking on is how to get at indexes for indexed iterables (e.g., lists, tuples, and strings are indexed iterables while, for example, sets and dictionaries are not). To illustrate, you might normally iterate through a string like so:

s = 'hello'

for c in s:

print(c)

But, of course, c here refers to the item being iterated from the iterable. Not the index of the item. We could get at this index in the following way:

s = 'hello'

i = 0

for c in s:

print(i,c)

i += 1

Sure, this works, but it's kind of annoying. We can use something a little similar:

s = 'hello'

for i in range(len(s)):

print(i, s[i])

This also works, but there is a better way of going about this.

s = 'hello'

for i, c in enumerate(s):

print(i, c)

Note that the built-in function enumerate returns a tuple where the first element of the tuple is an index while the second element of the tuple is the actual value that we are getting back from the iteration.

Classes

We can create a class in Python using the class keyword, but we need an initializer (i.e., a way to initialize or create an instance of our class). This is done or implemented in Python using the __init__ method. This method runs once the object has been created--so there are actually two steps when we create an instance of a class. We first have this "new" step (i.e., creation of a new object), but then the initializer is a step after that. After the object has been created, we can go on to the initialization phase.

So what happens in Python with these instance methods is that the first argument of the method is the object itself. So our first argument is going to be the object itself, and this is conventionally denoted by self, but you can call it anything you like:

class Rectangle:

def __init__(new_object_that_was_just_created, ...)

But it is customary to use self to denote the instance or object just created. For the Rectangle class we're going to require a width and height:

class Rectangle:

def __init__(self, width, height):

We are now going to set properties or attributes of the class--in this case they are going to be value attributes so we call them properties as opposed to methods which are callables essentially:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

It's worth noting that Python takes care of actually passing self to __init__ upon class instantiation. This is not something we have to do ourselves.

To clearly illustrate everything described about __init__ and self so far, consider the following code block:

object_set_atts = set(dir(object))

class Rectangle:

def __init__(self, width, height):

print('----- SELF OBJECT IN MEMORY -----')

print(self, end='\n\n')

print('----- DEFAULT OBJECT ATTRIBUTES -----')

print(object_set_atts, end='\n\n')

print('----- SELF OBJECT ATTRIBUTES BEFORE MODIFICATION -----')

print(set(dir(self)), end='\n\n')

print('ATTRIBUTES IN SELF NOT IN OBJECT')

print(set(dir(self)).difference(object_set_atts), end='\n\n')

print('ATTRIBUTES IN OBJECT NOT IN SELF')

print(object_set_atts.difference(set(dir(self))), end='\n\n')

self.width = width

self.height = height

print('ATTRIBUTES IN SELF NOT IN OBJECT AFTER ASSIGNMENT')

print(set(dir(self)).difference(object_set_atts), end='\n\n')

my_rect = Rectangle(4,6)

print(issubclass(Rectangle, object))

We get the following as output:

----- SELF OBJECT IN MEMORY -----

<__main__.Rectangle object at 0x10cbadfd0>

----- DEFAULT OBJECT ATTRIBUTES -----

{'__sizeof__', '__eq__', '__init__', '__gt__', '__lt__', '__reduce_ex__', '__le__', '__subclasshook__', '__class__', '__ge__', '__delattr__', '__ne__', '__dir__', '__repr__', '__init_subclass__', '__setattr__', '__reduce__', '__getattribute__', '__doc__', '__format__', '__str__', '__hash__', '__new__'}

----- SELF OBJECT ATTRIBUTES BEFORE MODIFICATION -----

{'__sizeof__', '__eq__', '__init__', '__gt__', '__lt__', '__reduce_ex__', '__le__', '__subclasshook__', '__class__', '__ge__', '__delattr__', '__ne__', '__dir__', '__dict__', '__repr__', '__init_subclass__', '__module__', '__setattr__', '__reduce__', '__getattribute__', '__doc__', '__format__', '__weakref__', '__str__', '__hash__', '__new__'}

ATTRIBUTES IN SELF NOT IN OBJECT

{'__module__', '__dict__', '__weakref__'}

ATTRIBUTES IN OBJECT NOT IN SELF

set()

ATTRIBUTES IN SELF NOT IN OBJECT AFTER ASSIGNMENT

{'height', '__weakref__', '__dict__', '__module__', 'width'}

True

Some points worth noting:

- dir: Concerning

dir, recall that if no argument is passed, thendir()returns the list of names in the current local scope (namespace). When anyobjectwith attributes is passed todir([object])as an argument,dir(object)returns the list of attribute names associated with thatobject. Its result includes inherited attributes, and is sorted. So the line at the beginning of the code block,object_set_atts = set(dir(object)), simply returns a set (the returned list is turned into a set to facilitate set operations) of the attribute names associated with the baseobjectclass. selfbefore modification: We initialize an instance of theRectangleclass by means of the linemy_rect = Rectangle(4,6). TheRectangle's__init__method fires, and we immediately see how Python passed in the newly createdselfobject to the__init__method and where it lives in memory:<__main__.Rectangle object at 0x10cbadfd0>. We then see all the attributes that are part of the baseobjectclass and howselfclearly inherits everything from this class and then more upon class instantiation. Specifically, attributes that are part of our newly createdselfobject that are not part of the baseobjectclass are__module__,__dict__, and__weakref__.selfafter slight modification: Once we declareself.width = widthandself.height = heightinside__init__and then compareselfwith the baseobjectclass, we see thatselfhas more attributes now:heightandwidth. This should be expected.Rectangleis subclass ofobject: Finally, once an instance of ourRectangleclass has been instantiated, we test whether or notRectangleis a subclass of the baseobjectclass. It is.

Let's now consider adding attributes which are callables (i.e., also known as "methods"). Specifically, let's add an area method and see what our Rectangle instance looks like:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

my_rect = Rectangle(4,6)

print(dir(my_rect))

This gives us the following:

['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'area', 'height', 'width']

Worth noting here is how the listing of attributes for the my_rect instance differs compared to our previous listing of self in the __init__. If we modify the code block above to be

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

print(dir(self))

return self.width * self.height

my_rect = Rectangle(4,6)

print(dir(my_rect))

print(my_rect.area())

then we get the following:

['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'area', 'height', 'width']

['__class__', '__delattr__', '__dict__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__le__', '__lt__', '__module__', '__ne__', '__new__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', '__weakref__', 'area', 'height', 'width']

24

Note that what all is printed out for my_rect and self within the area method is the same. It's also worth noting why, when methods depend on properties, we do not have to pass those properties explicitly to the method since the self object has these properties. That is, we can write

def area(self):

return self.width * self.height

as opposed to

def area(self, width, height):

return self.width * self.height

or some alternative even though the area method depends on those values. Those properties or values need to exist on the self object! And they do by means of the initializer __init__.

Now consider an updated version of the Rectangle class:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

r1 = Rectangle(10,20)

print(str(r1))

Python allows us to often print objects off as strings. What happens if we run print(str(r1))? We'll get something like <__main__.Rectangle object at 0x102ad5fd0>. But this is not a very informative or useful format unless we are interested in what kind of object we have and at what memory address. We could, of course, make our own "to string" method:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

def to_string(self):

return 'Rectangle: width={0}, height={1}'.format(self.width, self.height)

r1 = Rectangle(10,20)

and call it accordingly: print(r1.to_string()). But this is kind of annoying. It would be nice if we could use the built in str method. How could we do that? This is where Python is really nice in how it allows us to "overload" functions and methods. For example, what does str mean? By default, str is just going to look at the class and the memory address of the object. So how do we essentially override this and write our own definition for str?

It's very simple. We have these special methods that some call "magic" methods, but there's nothing really magical about them. They're well-documented and they're well-known. The special method name we need to use here is __str__. We can adjust our code accordingly:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

def __str__(self):

return 'Rectangle: width={0}, height={1}'.format(self.width, self.height)

r1 = Rectangle(10,20)

If we ran the above in a Python interactive shell, and then just entered r1, then we would see something like <__main__.Rectangle object at 0x10f926130> printed to the console (not str(r1)). If we want to change this behavior, then we need to implement another special method, namely the repr method. Just as str(object) is a built in function, repr(object) is a built in as well:

repr(object)returns the lower-level "as-code" printable string representation ofobject. The string generally takes a form potentially parseable byeval(), or gives more details thanstr().

So we could adjust our Rectangle class further to define how repr should work. Just as we modified __str__ to specify how str should work with an instance of the Rectangle class, we could modify __repr__ to specify how repr should work with an instance of the Rectangle class. As might be guessed by its name, __repr__ essentially stands for "representation" and the representation, if possible, is typically a string that shows how you would build the object up again. In some cases, there are too many variables and you can't really do it effectively, but we can in our case. So we can update our Rectangle class like so:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

def __str__(self):

return 'Rectangle: width={0}, height={1}'.format(self.width, self.height)

def __repr__(self):

return 'Rectangle({0}, {1})'.format(self.width, self.height)

r1 = Rectangle(10, 20)

So if we now entered r1 in an interactive Python shell session, we would get Rectangle(10, 20).

What about testing for equality? If we ran

r1 = Rectangle(10, 20)

r2 = Rectangle(10, 20)

print(r1 == r2)

we would get False as the output. r1 and r2 occupy different memory addresses. But as a user of the Rectangle class, you would really like to consider r1 and r2 as defined above to be equal. How can we make this happen? We can do this by means of another special nethod, namely __eq__. This allows us to specify and define how we compare objects to each other:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self.width, self.height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self.width, self.height)

def __eq__(self, other):

return self.width == other.width and self.height == other.height

# return (self.width, self.height) == (other.width, other.height)

r1 = Rectangle(10, 20)

r2 = Rectangle(10, 20)

print(r1 == r2)

Now the output would be True. The last line

return (self.width, self.height) == (other.width, other.height)

is simply another way of making the comparison. If we ran r1 is not r2 then we would get True, and this makes sense because different memory addresses are being occupied, but r1 == r2 now returns True as desired. What is not as desired is if we did something like r1 == 100. We would get an AttributeError since __eq__ for the Rectangle class is trying to find the height and width attributes for the other argument, which is provided as 100 in this case. And of course integers don't have such properties! So we need to make sure other is actually a Rectangle class instance:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self.width, self.height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self.width, self.height)

def __eq__(self, other):

if isinstance(other, Rectangle):

return self.width == other.width and self.height == other.height

else:

return False

This is now a bit cleaner. We can write all sorts of different kinds of comparison checks for our class (e.g., less than, great than, etc.). In fact, let's write a "less than" one which we define as one rectangle being less than another when its area has a smaller quantity:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def area(self):

return self.width * self.height

def perimeter(self):

return 2 * (self.width + self.height)

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self.width, self.height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self.width, self.height)

def __eq__(self, other):

if isinstance(other, Rectangle):

return self.width == other.width and self.height == other.height

else:

return False

def __lt__(self, other):

if isinstance(other, Rectangle):

return self.area() < other.area()

else:

return NotImplemented

What would then be the output from running

r1 = Rectangle(10, 20)

r2 = Rectangle(100, 200)

print(r1 == r2)

print(r1 < r2)

print(r1 > r2)

We would get

False

True

False

Why does the comparison r1 > r2 not throw an error? What happens is Python calls the __gt__ method for r1. It wasn't implemented. So what it does for us is it basically flips the comparison around and asks what r2 < r1 might be. Since __lt__ is implemented, we get False per the implementation of __lt__ in our Rectangle class.

Let's now consider a somewhat pared down version of the Rectangle class:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self.width, self.height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self.width, self.height)

def __eq__(self, other):

if isinstance(other, Rectangle):

return self.width == other.width and self.height == other.height

else:

return False

r1 = Rectangle(10, 20)

print(r1.width)

r1.width = -100

print(r1.width)

What would we get from running the above code? We would get

10

-100

The point is that we are allowing direct access to the width property as well as the height property; users can get as well as set the width and height for any given rectangle. But we may want to put logic in our code to prevent people from being able to give a rectangle a non-positive width or height. The way we prevent behavior likes this in Python (and in many other languages) is we define so-called getter and setter methods.

Our updated code with getter and setter methods for width and height might look like the following:

class Rectangle:

def __init__(self, width, height):

self._width = width

self._height = height

def get_width(self):

return self._width

def set_width(self,width):

if width <= 0:

raise ValueError('Width must be positive.')

else:

self._width = width

def get_height(self):

return self._height

def set_height(self,height):

if height <= 0:

raise ValueError('Height must be positive.')

else:

self._height = height

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self._width, self._height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self._width, self._height)

def __eq__(self, other):

if isinstance(other, Rectangle):

return self._width == other._width and self._height == other._height

else:

return False

Note the general change from self.width and self.height to self._width and self._height, respectively. This is to indicate that width and height should be considered as private variables. Of course, if we made such a change as we did above when our application was in production and many of our team members might be using the Rectangle class, then that would break a ton of code. As such, it is not a bad idea to generally start by defining private variables and using setters and getters from the beginning. The setters and getters will often be super basic such as

def get_width(self):

return self._width

def set_width(self, width):

self._width = width

You simply make modifications as you move along in your project. This is the typical of way doing this if you come from something like a Java background. In Python, this whole business of starting like that (i.e., by writing getters and setters from the beginning) does not really matter. Let's look at the Pythonic way of doing this. Let's go back to our original class:

class Rectangle:

def __init__(self, width, height):

self.width = width

self.height = height

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self.width, self.height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self.width, self.height)

def __eq__(self, other):

if isinstance(other, Rectangle):

return self.width == other.width and self.height == other.height

else:

return False

So we can read and set width and/or height however we please (just as before). Let's say this is what we released into production. But now we want to change this behavior. What's the solution? The solution is as expressed before in terms of writing a setter and getter, but we want to do this without breaking our backward compatibility. Python allows us to do this. That is why we do not have to start by writing getters and setters right away. In Python, unless you know you have a specific reason to implement a specific getter or setter that has extra logic, then you do not implement them. You just leave the properties bare. Remember that no variable is truly private in Python. And don't force people to use a getter or setter unless they have to. In Python, what happens is they're not really even aware that they're using a getter or setter.

How do we do this? We can use decorators to our advantage (to be discussed more later). Our new class might look like the following:

class Rectangle:

def __init__(self, width, height):

self._width = width

self._height = height

@property

def width(self):

return self._width

@width.setter

def width(self, width):

if width < 0:

raise ValueError('Width must be positive.')

else:

self._width = width

@property

def height(self):

return self._height

@height.setter

def height(self, height):

if height < 0:

raise ValueError('Height must be positive.')

else:

self._height = height

def __str__(self):

return "Rectangle: width={0}, height={1}".format(self.width, self.height)

def __repr__(self):

return "Rectangle({0}, {1})".format(self.width, self.height)

def __eq__(self, other):

if isinstance(other, Rectangle):

return self.width == other.width and self.height == other.height

else:

return False

r1 = Rectangle(10, 20)

print(r1.width)

r1.width = -100

print(r1.width)

Note how backward compatibility is preserved even if we change what is going on in the initializer. The reason the other methods still work is because when we call something like self.width from within __str__ we are actually calling the getter we just defined.

Even within our new __init__ method we could have self.height = height which would call the height setter to implement the restrictive logic we might want; otherwise, we could have something like r1 = Rectange(-100,10) happening for the class defined above. We don't want to allow that. So our __init__ method could go back to what we had before where the assignments would use the setter methods:

def __init__(self, width, height):

self.width = width

self.height = height

This results in now calling the setter methods for both width and height.

Variables and memory

Variables are memory references

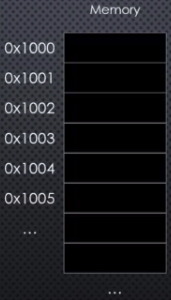

Variables are memory references. We can think of memory as a series of slots or boxes that exist in our computer from which we can store and retrieve data:

We can also compare it to the mail system where you always have to write an address on your letter so it can be effectively delivered. It's pretty much the same in computers where we need addresses atached to our memory slots. We need memory addresses and they can be numbered rather arbitrarily:

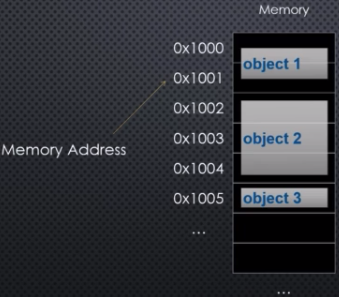

When we actually store data in memory addresses, we may need more than one shelf or address in which to store an object (everything in Python is an object):

As long as we know where an object starts in memory, that is good enough. And this is called the heap. Storing and retrieving objects from the heap is taken care of for us by something called the Python memory manager.

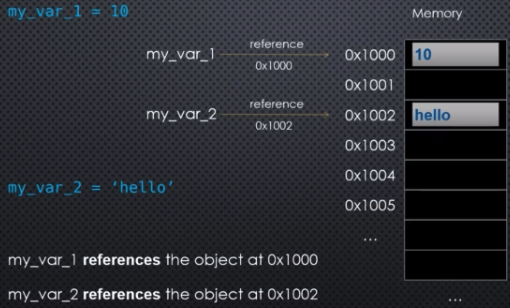

To see how this works, consider what happens when you declare a variable as simple as the following:

my_var_1 = 10

When you execute this statement, the first thing Python does is create an object somewhere in memory, at some address, say 0x1000, and it stores the value 10 inside that object. And my_var_1 is simply a name, an alias if you will, for the memory address where that object is stored (or the starting address if it overflows into multiple slots). So my_var_1 is said to be a reference to the object at 0x1000. Note that my_var_1 is not equal to 10. In fact, my_var_1 is equal to 0x1000 in this case. But 0x1000 actually represents the memory address of the data we are interested in. So, for all practical purposes, my_var_1 is equal to 10. This is how we think about it.

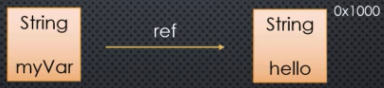

Similarly, if we write my_var_2 = 'hello', then my_var_2 gets stored somewhere in memory, say at address 0x1002. If it overflows that's fine. Now my_var_2 now becomes an alias or reference to the memory address 0x1002 that represents the data we are interested in, namely the object which is the string 'hello'.

This can be largely summed up in a picture:

It's rather important to understand that variables in Python are always references. And they're references to objects in memory.

In Python, we can find the memory address referenced by a variable by using the id() function. This will return a base-10 number. We can convert this base-10 number to hexadecimal by using the hex() function.

The id function is not something you will typically be using as you write Python programs. But we will use it quite a bit in order to understand what's happening in the Python programs we run and especially what happens to variables as we pass them along in our code throughout their lifetime.

If we run something like

my_var = 10

print(my_var)

then we will get what we expect: 10. What actually happened is that Python looked at my_var, it then looked at what memory address my_var was referencing, it found that memory address, it went to the memory address, retrieved the data from memory, and then brought it back so we can display it in our console.

Bottom line: Variables are just references to memory addresses. They're not actually equal to the value we think they are. Logically, we can think of it that way. But in real terms they're memory references.

Reference counting

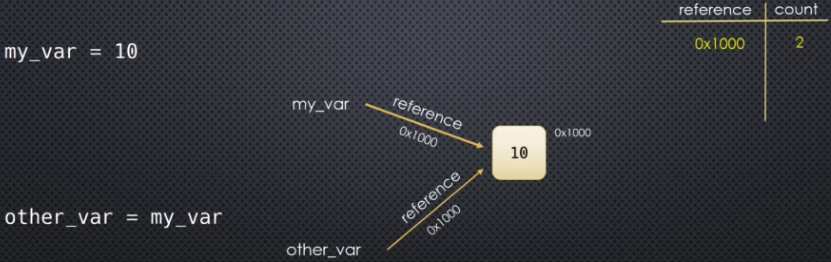

If we write the line of code my_var = 10 remember what is actually happening: Python is creating an object with an integer value of 10 at some memory address, say 0x1000 in this case. my_var is a pointer to that object--it is the reference or memory address of that object. So we can start keeping track of these objects that are created in memory. We can keep track of them by keeping track of their memory address and how many variables are pointing to them. How many other variables in our code are pointing to that some object?

Right now it's pretty clear when we declare my_var = 10 that our reference count is 1 for the memory address holding the integer value 10 referenced by my_var. But we could then write something like other_var = my_var. Remember that we are dealing with memory addresses and references. When we say other_var = my_var, we are not taking the value of 10 and assigning it to other_var--we're actually taking the reference of my_var and assigning that reference, 0x1000 in this case, to other_var. In other words, other_var is also pointing to that same object in memory (i.e., the integer value 10 being stored at memory address 0x1000).

It's important to understood the distinction outlined above. We do not have two separate objects of value 10, where my_var points to one and other_var points to another. No, when we say other_var = my_var, we're actually sharing the reference. So the reference count will go up to 2 in this case:

Let's now say my_var goes away. Maybe it falls out of scope or we assign it to a different object in memory (maybe even None). Then that reference goes away and the reference count goes down to 1. Let's now say other_var goes away. Maybe it too falls out of scope. Now there is nothing left and the reference count falls down to 0. At that point, the Python memory manager recognizes this and throws away the object if there are no references left. Now the previously occupied space in memory can be reused.

The whole process outlined above is called "reference counting" and is something the Python memory manager does for us. We don't have to worry about this since Python is doing it for us automatically. But it is valuable to know how Python is managing things behind the scenes. Garbage collection is related to this.

Before going into the code, we'll look at how we can find the reference count of a variable in Python. The sys module has a getrefcount function that we can use:

sys.getrefcount(my_var)

What's happening here is we are passing to sys.getrefcount a reference to my_var. So when we pass my_var to getrefcount we are actually creating another reference to that same object in memory. So there's kind of a downside to using this method because it always increases the reference count by 1 because simply the act of passing my_var to the function sys.getrefcount creates another reference to that same variable because variables are passed by reference in Python.

There is another function we can use that does not have this drawback:

ctypes.c_long.from_address(address).value

The difference here is that we are passing the memory address or id(my_var) (an integer, not a reference). Because we are passing the memory address, not the reference, the actual integer value, say 0x1000 in the previous example, this does not impact the reference count. This is a truer, more exact count of the number of references.

Consider this example:

import sys

a = [1,2,3]

print(sys.getrefcount(a)) # 2

As noted above, sys.getrefcount expects a variable name, a in our case. What this means is that we are passing a to getrefcount and its argument is taking the same reference that a is telling it--so it's going to point to the same object in memory. So really our reference count is going to become 2 when sys.getrefcount runs. Using this function is not an issue--just remember to subtract 1 because the simple act of passing a into sys.getrefcount has increased the reference count by 1.

To avoid this, we can import the ctypes module:

import ctypes

a = [1,2,3]

def ref_count(address: int):

return ctypes.c_long.from_address(address).value

print(ref_count(id(a))) # 1

Note how we have created a wrapper function ref_count to make our lives easier. This might seem odd at first given the problem we encountered when passing sys.getrefcount the variable a as an argument. Do we not encounter the same problem here when passing id the variable a as its argument? Clearly not. But why not? What's happening is id(a) is getting evaluated first. So, indeed, when we call id(a), when id is running, then the reference count of a is 2; but then id finishes running and returns the memory address of what variable a is pointing to. So by the time we call ref_count the id function has finished running and has released its pointer to the memory addressed referenced by its argument a. So that pointer reference is gone and the reference count is back down to 1 now.

Let's now make the assignment b = a and see what ref_count gives us:

import ctypes

a = [1,2,3]

b = a

def ref_count(address: int):

return ctypes.c_long.from_address(address).value

print(ref_count(id(a))) # 2

We can continue in this fashion, assigning other things to a and then changing the assignments:

import ctypes

def ref_count(address: int):

return ctypes.c_long.from_address(address).value

a = [1,2,3]

print(ref_count(id(a))) # 1

b = a

print(ref_count(id(a))) # 2

c = b

print(ref_count(id(a))) # 3

b = 10

print(ref_count(id(a))) # 2

You need to be very mindful when dealing directly with memory references in Python. Why? Take an example where you set a variable to None. The Python memory manager will free up that address which will become available for something else. Working with memory addresses may come up when debugging or dealing with memory leaks, but you will often not do this. It is helpful to know how Python really works behind the scenes though.

Garbage collection is related to reference counting, but it is not the same. It is a different process that Python utilizes to keep up with cleaning up the memory and keeping things freed up so we don't have memory leaks.

Garbage collection

Recall what reference counting is: As we create objects in memory, Python keeps track of the number of references we have to those objects. It could any number of references, but no matter what number of references we have, as soon as that reference count hits 0, the Python memory manager then destroys that object and reclaims the memory. So this is called reference counting. But sometimes that is not enough--it just doesn't work. In particular, we have to look at situations that can arise from so-called circular references. So what are circular references? Let's take a look at an example.

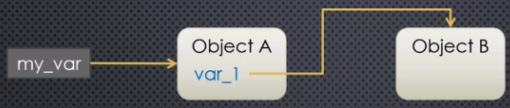

Suppose we have some variable my_var, and let's say my_var points to object A. So far so good--it's exactly what we've seen and the way in which Python normally operates. If my_var goes away or we point it to another object or None, then the reference count of object A goes from 1 to 0, and the Python memory manager at that point destroys object A and reclaims the space in memory. But let's suppose that object A has an instance variable, say var_1, that points to another object, say object B:

At this point, what happens if we get rid of the my_var pointer? Let's say we point it to None. Then the reference count of object A goes down to 0. The reference count of object B is still 1 since object A is pointing to object B. But the reference count for object A has just gone down to 0. Due to how reference counting works, object A will get destroyed. Once object A gets destroyed, object B's reference count also goes down to 0, and then it gets destroyed as well. So by destroying my_var, by removing that reference, the Python memory manager, through reference counting, will get rid of object A and B.

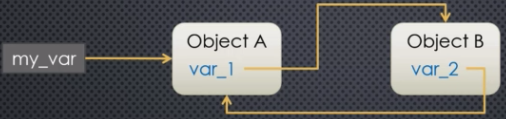

But now let's suppose object B also has an instance variable, say var_2, where var_2 points back to object A:

Now we have a circular reference. So what happens if we get rid of the my_var reference? If that goes away then the reference count for object A goes from 2 to 1. And the reference count for B stays at 1 since A is pointint to B. So in this case reference counting is not going to destroy either A or B. It can't. Because they both have references. They both have a reference count that is non-zero. And that is called a circular reference. And because the Python memory manager cannot clean that up, if we were to leave things as is, we would have a memory leak. This is where the garbage collector comes in.

The garbage collector will look and will be able to identify these circular references and clean them up. And so the memory leak goes away. The garbage collector can be controlled programmatically using the gc module. By default, the garbage collector is turned on. You can turn it off, but you really shouldn't unless you truly know what you are doing. Why would you want to turn it off? Mostly to free up some memory--the garbage collector obviously has to run (i.e., take up some space in memory) in order to collect and destroy the garbage. You can also call the garbage collector manually and interact with it and do your own cleanup. For now, just know the garbage collector is really there to clean up weak references and you should really leave it on unless you have very good reason to turn it off.

In general, the garbage collector works just fine, but as always there's a caveat. It doesn't always work, but this really applies to Python versions of less than 3.4. So if you're using Python 3.4+ then you should be fine. What was happening before is that if even one of the objects in the circular references had a destructor (e.g., __del__ ) then the order of the destructors might be important even though the garbage collector does not know what the order should be. So the obejct(s) would be marked as uncollectable. When you have that situation with a circular reference, where at least one of the objects had a destructor, then they weren't getting cleaned up by either the garbage collector or by reference counting. So you'd end up with a memory leak. This particular problem no longer exists for Python 3.4+.

Let's now look at some code and put the garbage collector through its paces and look at some circular references. We're going to look at a method, object_by_id, that's going to dig into the garbage collector and it will tell us whether or not that object exists in the garbage collector, and we'll do that by means of memory addresses.

Our object_by_id method above will basically look at all objects being tracked by the garbage collector (by means of gc.get_objects()) and it will try to find the object we are interested in by comparing the ids. There's another way we can compare memory addresses and we'll get to that later.

The commented code below illustrates all of the important points:

import ctypes

import gc

def ref_count(address: int):

return ctypes.c_long.from_address(address).value

def object_by_id(object_id):

for obj in gc.get_objects():

if id(obj) == object_id:

return "Object exists"

return "Not found"

class A:

def __init__(self):

self.b = B(self)

print('A: self: {0}, b: {1}'.format(hex(id(self)), hex(id(self.b))))

class B:

def __init__(self, a):

self.a = a

print('B: self: {0}, a: {1}'.format(hex(id(self)), hex(id(self.a))))

# prevent circular references from being cleaned up

gc.disable()

# create circular references

# two references to A:

# my_var -- direct reference to A

# my_var.b.a -- reference from B to A (created upon instantation of B)

# one reference to B: my_var.b

my_var = A()

# two references to A with same location in memory

print('Direct reference from my_var to A: ', hex(id(my_var)))

print('Reference from B to A: ', hex(id(my_var.b.a)))

# one reference to B

print('Reference from A to B: ', hex(id(my_var.b)))

# assign memory locations to new variables and get count

a_id = id(my_var.b.a)

print('Count of references to A (my_var -> A): ', ref_count(a_id)) # 2 (two references to object A)

b_id = id(my_var.b)

print('Count of references to B (my_var -> A): ', ref_count(b_id)) # 1 (one reference to object B)

# remove direct reference to object A

my_var = None

# reference from object B to A still exists but is now inaccessible: my_var.b.a

print('Count of references to A (my_var -> None): ', ref_count(a_id)) # 1

# reference from object A to B exists but is now inaccessible: my_var.b

print('Count of references to B (my_var -> None): ', ref_count(b_id)) # 1

# check to see if references are in the garbage collector

print('Reference to A in garbage collector: ', object_by_id(a_id))

print('Reference to B in garbage collector: ', object_by_id(b_id))

print('>>>> garbage being collected')

gc.collect()

print('garbage collected <<<<<')

# check to see if references are in the garbage collector

print('Reference to A in garbage collector: ', object_by_id(a_id))

print('Reference to B in garbage collector: ', object_by_id(b_id))

Here's the output (with added newline spaces for clarity):

B: self: 0x108fc9fa0, a: 0x108fc9fd0

A: self: 0x108fc9fd0, b: 0x108fc9fa0

Direct reference from my_var to A: 0x108fc9fd0

Reference from B to A: 0x108fc9fd0

Reference from A to B: 0x108fc9fa0

Count of references to A (my_var -> A): 2

Count of references to B (my_var -> A): 1

Count of references to A (my_var -> None): 1

Count of references to B (my_var -> None): 1

Reference to A in garbage collector: Object exists

Reference to B in garbage collector: Object exists

>>>> garbage being collected

garbage collected <<<<<

Reference to A in garbage collector: Not found

Reference to B in garbage collector: Not found

The takeaway from all of this: It's helpful to know that the garbage collector is largely responsible for destroying circular references. You can turn the garbage collector off, but you should almost always have it turned on.

Dynamic vs static typing

Some languages are statically typed (e.g., Java, Swift, C++, etc.). Here's some Java for example:

String myVar = "hello";

We have the variable name myVar which is to be expected, but we also have a data type at the beginning of the variable declaration (i.e., String in this case). Finally, we have a value, the string literal "hello" in this case, that we're assigning to myVar. What happens under the hood is very similar to what happens in Python:

The main thing to note here is that we are associating a data type with the variable name. So later if we try to do something like

myVar = 10;

this will not work because myVar has been declared as a String and cannot be assigned the integer value 10. Only values of type String can be assigned to myVar. For example, later on in our code, we could do something like myVar = "abc";.

Python, in contrast, is dynamically typed. So the main difference between what we saw above and the following code in Python

my_var = "hello"

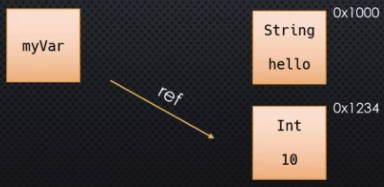

is that we are not specifying that my_var is of type string. The name my_var is just a reference, nothing more. It is a reference to an object, where that object currently happens to be a string object with a value of "hello", but the type is not attached to my_var. Late on, if we say my_var = 10, that is perfectly legal. All we are doing is creating an object in memory, an integer object with value 10, and we are changing the reference of my_var to point to that integer:

In effect, we may think that the type of my_var has changed. But not really. In Python, my_var never had a type to start off with. my_var was just a reference. What has changed is the type of the object that my_var was pointing to. We can use the built-in type() function to determine the type of the object currently referenced by a variable. But remember: variables in Python do not have an inherent static type or any type for that matter. Instead, when we call type(my_var), what really happens is Python looks up the object my_var is referencing and returns the type of the object at that memory location.

Variable re-assignment

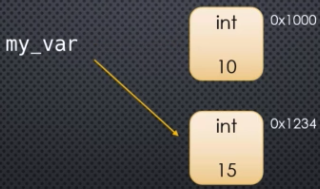

Suppose we have my_var = 10. We already know what's happening. We have an object in memory that gets created at some memory address where this object has a type of integer a value of 10 and my_var is simply a reference to that object:

If we later write a line of code like my_var = 15, then we are not changing the value inside the object located at the memory address previously taken up with an object of type integer and value of 10. Instead, what's happening is that a new object, also of type integer but with a value of 15, is created at some different memory address. And the reference of my_var changes to this new object:

But note that the value of the object at memory address 0x1000, namely 10, does not change. The my_var reference simply no longer points to this value.

To further clarify how Python handles things, consider the following lines of code:

my_var = 10

my_var = my_var + 5

The same thing happens here. Don't be fooled. Don't think that because we have my_var on both sides of the expression that somehow something changes and now we're changing the value of the original object that my_var points to. We are not. Python first evaluates the right-hand side. First, Python looks at my_var and realizes it needs to add 5 to my_var. Okay, what's my_var? my_var is an integer with a value of 10. Well, 10 plus 5 is 15, and Python then creates a new object in memory at a different memory address with a value of 15. And then the my_var pointer is updated to point at or reference the new object and its value at this new memory address.

But we did not change the value of the contents or the state of the object at the original address my_var pointed to. In fact, the value inside the integer objects can never be changed.

Some of this is easier to see by means of code:

a = 10

print(hex(id(a)))

a = 15

print(hex(id(a)))

a = a + 0

print(hex(id(a)))

a = a + 1

print(hex(id(a)))

b = 16

print(hex(id(b)))

Here's the output:

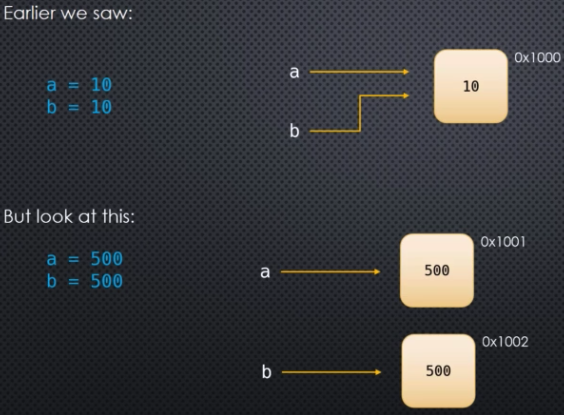

0x108989a50 # a = 10

0x108989af0 # a = 15 (different address than above)

0x108989af0 # a = 15 (same address as above despite addition of 0)

0x108989b10 # a = 16 (different address than above)

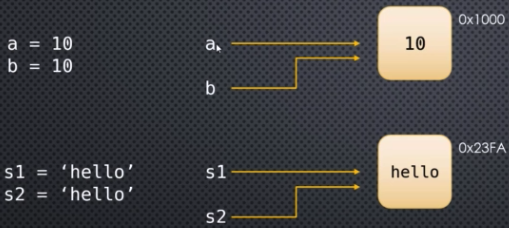

0x108989b10 # b = 16 (same address as above)

Note how the memory address is the same for a and b when a = 16 and b = 16. So they both point to the same object in memory. There's a reason for this--that object's value can never change. That will be looked at in more detail later.

Object mutability

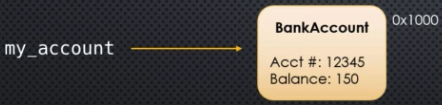

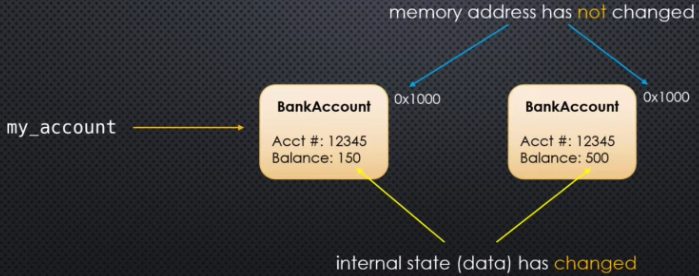

Consider some object in memory. Remember that an object is something that has a type and it also has some internal data--it has state. And it's located at some memory address. Changing the data inside the object is called modifying the internal state of the object. What's an example of this? Suppose we have a variable my_account that references some object in memory at some memory address, say 0x1000:

So the data type of our object is BankAccount and the object has two instance properties, the account number and the balance. Suppose we modify the balance in some way (payment or deposit):

So the internal state (i.e., data) has changed. But the memory address has not changed. Previously, when we had my_var = 10 and then later changed this to my_var = 15, we ended up changing the reference; that is, the memory address that my_var pointed to changed but the object originally pointed to did not change. But now we are actually modifying the internal state of the same object. Integer objects are immutable and nothing about them can change--their internal state or data cannot be modified. An integer cannot change from, say, 7 to 8. But the reference we are using to point to an integer object can change. And that's usually what happens. But now we are looking at cases where the object we are pointing to can actually change or mutate. The memory address we are pointing to will not change, but the internal state of the object being pointed to can change.

A change as described above, where the object being referenced or pointed to has its internal state changed, is called a mutation. To say an object was mutated is just a fancy way of saying that the internal data of that object has changed. An object whose internal state can be changed is called mutable. An object whose internal state cannot be changed is called immutable (the object gets created in memory but we can never change the state of that object). These are different definitions we should keep in mind because they impact how we program. Let's look at some examples of immutables and mutables in Python:

| Immutable | Mutable |

|---|---|

Numbers (int, float, bool, complex, etc.) | Lists |

| Strings | Sets |

| Tuples | Dictionaries |

| Frozen sets | User-defined classes |

| User-defined classes (when no methods allow modification) |

Let's take a look at some finer points worth bearing in mind, namely how mutability and immutability can sometimes intermingle in potentially unexpected ways. Let's say we have a tuple:

t = (1, 2, 3)

Remember that tuples are immutable. Elements cannot be deleted, inserted, or replaced. In the case above, both the container (the tuple) and all its elements (ints) are immutable. So not much can be done in the way of intermingling mutability with immutability. But what if we now define mutable elements a and b that comprise a tuple t:

a = [1, 2]

b = [3, 4]

t = (a, b)

Can we change a and b and see those changes reflected in t?

Sure:

a = [1, 2]

b = [3, 4]

t = (a, b)

print(t)

# modify the elements of a and b in place

a.append(3)

a.extend([4,5,6])

b.append('lions')

b.extend(['bears', 'tigers'])

print(t)

Here's the output:

([1, 2], [3, 4])

([1, 2, 3, 4, 5, 6], [3, 4, 'lions', 'bears', 'tigers'])

The very important thing is that we modify a and b in place; that is, in order to see changes to lists a and b reflected in the tuple t, the memory addresses to which a and b point must remain the same. In fact, it may be helpful to view t as simply consisting of the memory addresses originally pointed to by a and b--the memory address of a is 0x123 and the memory address of b is 0x124, then t is basically a collection of just 0x123 and 0x124 and always will be (even if we modify the internal state or data of the objects residing at 0x123 and 0x124).

If we do something like a = a + a, then the new a formed by concatenating a with a will not point to the same memory address that the original a pointed to. The + operation for lists is not an in-place operation--this operation will concatenate two lists and return the newly concatenated list. The newly created list by concatenation will reside somewhere in memory different from the original a value.

This example should make all of this clear:

a = [1, 2, 3]

a_id = a

t = (a)

print('Memory address of a: ', hex(id(a)))

print('Memory address of a_id: ', hex(id(a_id)))

print('Tuple t: ', t)

a.append(4)

print('Memory address of a: ', hex(id(a)))

print('Memory address of a_id: ', hex(id(a_id)))

print('Tuple t: ', t)

a = a + [-2, -1]

a_id.extend([5, 6])

print('Memory address of a: ', hex(id(a)))

print('Memory address of a_id: ', hex(id(a_id)))

print('Tuple t: ', t)

Here's the output:

Memory address of a: 0x1088425c0

Memory address of a_id: 0x1088425c0

Tuple t: [1, 2, 3]

Memory address of a: 0x1088425c0

Memory address of a_id: 0x1088425c0

Tuple t: [1, 2, 3, 4]

Memory address of a: 0x1088ccb40

Memory address of a_id: 0x1088425c0

Tuple t: [1, 2, 3, 4, 5, 6]

Note how the memory address of a changes; that is, a = a + [-2, -1] results in a pointing to a different memory address than that which it originally pointed to. The memory address that a originally pointed to has been saved as a_id, and this reference never changes throughout the block of code above.

In summary, although the tuple t is immutable, the element residing within it (i.e., a) is not. The object reference in the tuple did not change (i.e., the original memory address pointed to by a), but the referenced object did mutate (not by a = a + [-2, -1] but by a_id.extend([5, 6])).

Function arguments and mutability

We are going to look at how our variables may or may not be effected by functions when we call them and pass our variables to them. Mutability and immutability are really important in this context.

Recall from before that, for example, strings (str) are immutable objects in Python. Once a string has been created, the contents of the object can never be changed. If we write my_var = 'hello', then the only way to modify the "value" of my_var is to re-assign my_var to another object.

In general, immutable objects are safe from unintended side-effects. And by side-effects we mean that when we call a function with our variable, then that function may or may not alter the value of that variable. If we have immutable objects, then we have a general amount of safety.

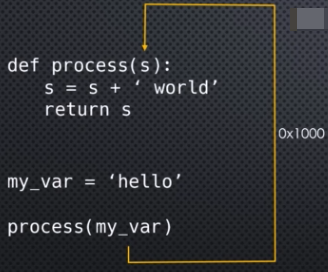

Suppose we have a function process that takes in a parameter s:

def process(s):

s = s + " world"

return s

And let's say in our main code we have my_var = "hello". In this example, we basically have two scopes:

modulescopeprocessscope

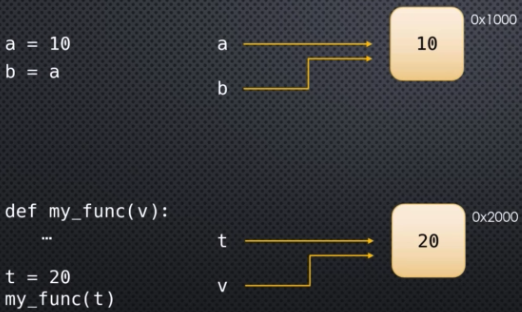

When we run my_var = "hello" the module scope has a variable called my_var and it points to some object in memory, namely the immutable string object "hello" in this case. When we run process(my_var), we are calling the process function and we are passing my_var as the argument. This next part is critically important to understand: my_var's reference is passed to process():