Fundamentals of System Design (Try Exponent)

1 - Introduction to system design

Great coding skills will get you far in a software engineering career, but strong system design skill will get you further. Senior software engineers' (SWE) performance in system design interviews is often more important than coding rounds, and for engineering managers and up, system design replaces coding entirely.

Why? Because system design interviews are as real as it gets in terms of assessing true on-the-job performance. Great engineers must understand how distributed systems work — how each component processes information, communicates, and scales up or down — because this high-level view is critical to strategic decision-making. Anything else is reactive.

Goals of the course

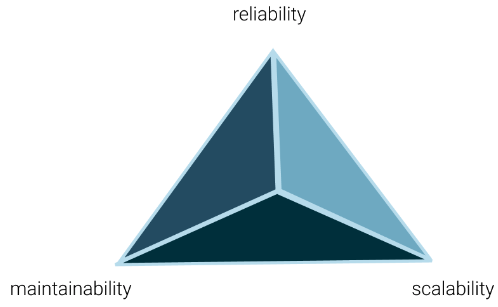

- Discuss the necessary tradeoffs between reliability, scalability, and maintainability in real-world systems.

- Review common components of distributed systems and design strategies and describe what they are, why they're important, and when to use them.

- Apply a framework to system design questions to arrive at a high-quality system design quickly.

- Identify and avoid common pitfalls in system design interviewing.

The purpose of this course is to give you the resources you need to feel confident in system design interviews. You'll orient yourself to your interviewers' perspective and practice a framework for working through vague problems, review fundamental concepts and must-know components, learn common design strategies and when to use them, and, of course, practice.

How to get the most out of your system design prep

We recommend following the below study plan.

- Read through the next lesson on the system design interview to get a sense of what interviewers are looking for to orient yourself to the course. System design and system design interviews are a bit different. You'll want to practice your communication skills as thoroughly as your design fundamentals.

- Next, read through the framework for acing system design questions and note the timing. We've designed the framework such that you can cover everything important in your 45-minute round. Keep the cadence in mind, and begin to practice as soon as you can.

- Watch a few system design mock interviews to get a sense of how SWEs and EMs answer these questions in interviews. Try to get a feel for where your own weak areas are conceptually - this will help you prioritize your fundamentals practice. Once you know what to focus on, we recommend you start by browsing through the system design glossary to re-familiarize yourself with important terms before beginning the system design fundamentals course.

- Read about the architecture(s) in place at your target company to get in the right mindset. After you're familiar with the format, choose a practice question asked recently at your target company. When you're ready for live practice, sign up for a peer mock interview. System design mocks run twice daily at 8:00am and 6:00pm PST. Sign up now!

2 - The system design interview

System design is a squarely technical interview, right? ...Not quite.

System design rounds are categorized as technical interviews, much like coding rounds, but they differ in a few significant ways. For example:

- Most prompts are intentionally vague. Coding challenges are clear, system design prompts are not. You choose which direction to take — which is why asking clarifying questions and following a framework are so important. You're time-bound, so staying on track is key.

- There is no "right" answer. It's true that there are good designs and bad designs, but as long as your choices are justifiable and you articulate tradeoffs, you can let your creativity shine.

- You're engaged in a two-way dialogue. This is critical. In a real-world scenario, you would never go off on your own with only a vague idea of what to build, right? We hope not. You want to work with your interviewer every step of the way. Spend a good amount of time clarifying requirements in the beginning, check-in throughout, and make sure to spend time summarizing and justifying your choices at the end.

Even the most brilliant engineer can tank the system design interview if they forget that communication skills are being assessed too. Unlike most coding rounds, the system design interview mimics real-world conditions. It's not often that you're given clear requests with features neatly outlined. Mostly, you'll have to tease out specifics yourself. The best engineers are able to take fuzzy customer or business requests and build a working system, but not before asking a lot of questions, thinking through tradeoffs, and justifying their choices. Keep this in mind as we walk you through the basics.

Which roles will encounter system design questions?

Nowadays, we're all working hard to build scalable applications. System design skills are applicable to more roles than ever before, but it should make sense that the rubric for an engineering manager with 15 years of experience will be very different from a junior software engineer, which will differ from a technical program manager. Whatever your role, we believe that it's worth spending a significant amount of your interview prep time studying system design because it will help you perform better at your job.

What is the format?

Typically, you'll be given 45 minutes to an hour to design a system given a vague prompt like "Design a global file storage and sharing service." Companies like to ask you to design systems that will solve problems they're actually facing, so it's good practice to orient your prep to the types of questions you're likely to be asked.

For example, if you interview at Google, focus on search-related questions. At Facebook, practice designing systems that handle large file storage. At Stripe, practice API design or authentication-oriented questions.

You'll likely use the whiteboard (if you're in person) or an online tool such as Google Drawings to outline your design.

If you have an interview scheduled, ask your recruiter how you'll present and practice answering questions within that medium. If you're told you can choose, we recommend picking one tool and practicing consistently. This will help alleviate anxiety on the big day.

In the next lesson, we'll show you a framework for working through these open-ended questions. You have a lot of freedom to adjust the framework to suit you, but we strongly recommend sticking to some sort of framework as it's easy to get off track.

How will I be assessed?

The business of technology is complex. Every problem is custom and every organization wants to accomplish the most with the least; minimizing waste and error and maximizing output and performance. To stay competitive in this environment, employers are looking for self-starting problem solvers who can anticipate obstacles and choose the best options at every turn. But how do you know what's best? The first step is understanding the landscape. To understand the problem space, you need to do two things:

- Basic knowledge of the fundamentals of good software design. That is, efficiency in terms of reliability, scalability, maintainability, and cost.

- Communication skills that allow you to scope out the problem, collect feature and non-feature requirements, justify your choices, and pivot when necessary.

Let's take a look at how this maps to a sample FAANG company interview rubric.

This rubric is mostly applicable for junior or mid-level engineers. The expectations for an advanced candidate will be higher, especially around identifying bottlenecks, discussing tradeoffs, and mitigating risk.

Bad Answer (No Hire)

- The candidate wasn't able to take the lead in the discussion.

- The candidate didn't ask clarifying questions and/or failed to outline requirements before beginning the design.

- The candidate failed to grasp the problem statement even after hints.

- The candidate missed critical components of the architecture, and/or couldn't speak to the relationship between components.

- Design flow was vague and unclear and the candidate couldn't give a detailed explanation even after hints.

- The design failed to meet specs.

- The candidate failed to discuss tradeoffs and couldn't justify decisions.

Fair Answer (On-the-Fence Hire)

- The candidate attempted to take the lead but needed significant guidance/hints to stay on track, or didn't interact much with their interviewer.

- The candidate defined some features, but the user journey/use case narrative was vague or incomplete.

- During requirements definition, important features were left out (but were hit upon later), or the candidate had to be given a few hints before a fair set of features was defined.

- The candidate identified most of the core components and understood the general connection between them (possibly with a hint or two.)

- The architecture may have had some issues but the candidate addressed them when they were pointed out, or the design flow, in general, could be improved.

- The candidate identified bottlenecks, but had trouble discussing tradeoffs and missed important risks.

Great Answer (Hire/Strong Hire)

- The candidate took the lead immediately and "checked in" regularly.

- If/when the interviewer asked to dive deeper into one or more areas, the candidate switched gears gracefully.

- The candidate identified appropriate, realistic non-functional requirements (e.g. business considerations like cost, serviceability) as well as functional requirements, and used both to define design constraints and guide decision-making.

- The high-level design was complete, component relationships were explained, and appropriate alternatives were discussed given realistic shifts in priorities.

- Bottlenecks were identified, alternatives were offered, and a quick summary of the pros and cons of each was given. Notice how there is both a knowledge and a communication component to each. For example:

- A candidate who creates a reasonable architecture but who rushes through a design likely be an on-the-fence candidate.

- A candidate who fails to take the lead on the discussion will likely be a no-hire.

- Consistently — great answers are both technically complete and are communicated well, with tradeoffs and bottlenecks identified and continuously tied back to requirements defined early on.

As you can imagine, arriving at great system designs in an interview scenario takes a lot of practice. It also takes a structured approach. We'll walk you through a framework in the next lesson.

3 - How to answer system design questions

What's the purpose of the system design interview?

The system design interview evaluates your ability to design a system or architecture to solve a complex problem in a semi-real-world setting.

It doesn't aim to test your ability to create a 100% perfect solution; instead, the interview assesses your ability to:

- Design the blueprint of the architecture

- Analyze a complex problem

- Discuss multiple solutions

- Weigh the pros and cons to reach a workable solution

This interview and the manager or behavioral interview are often used to determine the level at which you will be hired.

This lesson focuses on the framework for the system design interview.

Why use a system design interview framework?

Unlike a coding interview, a system design interview usually involves open-ended design problems.

If it takes a team of engineers years to build a solution to a problem, how can one design a complicated system within 45 minutes?

We need to allocate our time wisely and focus on the essential aspects of the design under tight time pressure. We must define the proper scope by clarifying the use cases.

An experienced candidate would not only show how they envision the design at a higher level but also demonstrate the ability to dive deep to address realistic constraints and tricky operational scenarios.

We also aim to maintain a clear communication style so that our interviewers understand why we want to spend time in certain areas and have a clear picture of our path forward.

Establishing a system design interview framework helps us:

- Better manage our time

- Reinforce our communication with the interviewer

- Lead the discussion toward a productive outcome

Once you're familiar with the framework, you can apply it every time you encounter a system design interview.

Anatomy of a system design interview

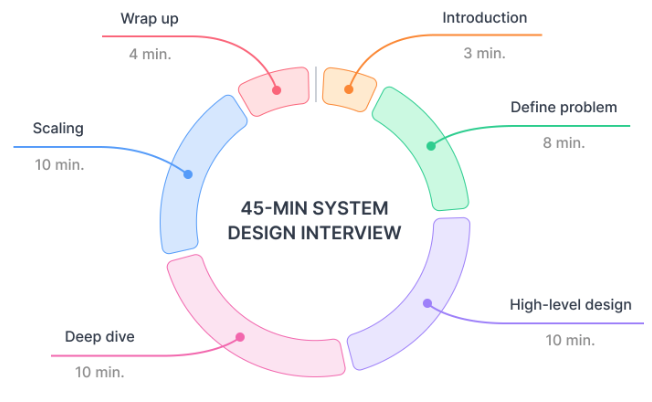

A system design interview typically consists of 5 steps:

- Step 1: Define the problem space. Here, we understand the problem and define the scope of the design.

- Step 2: Design the system at a high level. We lay out the most fundamental pieces of the system and illustrate how they work together to achieve the desired functionality.

- Step 3: Deep dive into the design. Either you or your interviewer will pick an interesting component, and you will discuss its details.

- Step 4: Identify bottlenecks and scaling opportunities. Think about the current design's bottlenecks and how we can change the design to mitigate them and support more users.

- Step 5: Review and wrap up. Check that the design satisfies all the requirements and potentially identify directions for further improvement.

A typical system design interview lasts about 45 minutes. A good interviewer leaves a few minutes in the beginning for self-introductions and a couple of minutes at the end for you to ask questions.

Therefore, we usually only have about 45 minutes for technical discussion. Here's an example of how we can allocate the time for each of the steps:

- Step 1: 8 minutes

- Step 2: 10 minutes

- Step 3: 10 minutes

- Step 4: 10 minutes

- Step 5: 4 minutes

The time estimate provided is approximate, so feel free to adjust it based on your interview style and the problem you're trying to solve. Integrating all the steps into a structured interview framework is important.

Step 1: Define your problem space

Time estimate: 8 minutes

It's common for issues to be unclear at this stage, so it's your job to ask lots of questions and discuss the problem space with your interviewer to understand all the system constraints.

One mistake to avoid is jumping into the design without first clarifying the problem.

It's important to capture both functional and non-functional requirements. What are the functional requirements of the system design? What's in and out of scope?

For instance, if you're designing a Twitter timeline, you should focus on tweet posting and timeline generation services instead of user registration or how to follow another user.

Also, consider whether you're creating the system from scratch. Who are our clients/consumers? Do we need to talk to pieces of the existing system?

Non-functional requirements

Once you've agreed with your interviewer on the functional requirements, think about the non-functional requirements of the system design. These might be linked to business objectives or user experience.

Non-functional requirements include: availability, consistency, speed, security, reliability, maintainability, cost.

- Availability

- Consistency

- Speed

- Security

- Reliability

- Maintainability

- Cost

Some questions you might ask your interviewer to understand non-functional requirements are:

- What scale is this system?

- How many users should our app support?

- How many requests should our server handle? A low query-per-second (QPS) number may mean a single-server design, while higher QPS numbers may require a distributed system with different database options.

- Are most use cases read-only? If so, that could suggest a caching layer to speed up reading.

- Do users typically read the data shortly after someone else overwrites it? That may indicate a strongly consistent system, and the CAP theorem may be a good topic to discuss.

- Are most of our users on mobile devices? If so, we must deal with unreliable networks and offline operations.

If you've identified many design constraints and feel that some are more important than others, focus on the most critical ones.

Make sure to explain your reasoning to your interviewer and check in with them. They may be interested in a particular aspect of your system, so listen to their hints if they nudge you in one direction.

Estimate the amount of data

You can do some quick calculations to estimate the amount of data you're dealing with.

For example, you can show your interviewer the queries per second (QPS) number, storage size, and bandwidth requirements. This helps you choose components and give you an idea of what scaling might look like later.

You can make some assumptions about user volume and typical user behavior, but check with your interviewer if these assumptions match their expectations.

Keep in mind that these estimates might not be exact, but they should be in the right range.

Step 2: Design your system at a high level

Time estimate: 10 minutes

Based on the constraints and features outlined in Step 1, explain how each piece of the system will work together.

Don't get into the details too soon, or you might run out of time or design something that doesn't work with the rest of the system.

You can start by designing APIs (Application Programming Interfaces), which are like a contract that defines how a client can access our system's resources or functionality using requests and responses. Think about how a client interacts with our system.

Maybe a client wants to create/delete resources, or maybe they want to read/update an existing resource.

Each requirement should translate to one or more APIs. You can choose what type of APIs you want to use Representational State Transfer, [REST], Simple Object Access Protocol [SOAP], Remote Procedure Call [RPC], or GraphQL, and explain why. You should also consider the request's parameters and the response type.

Once the APIs are established, they should not be easily changed and become the foundation of our system's architecture.

How will the web server and client communicate?

After designing the APIs, think about how the client and web server will communicate. Some popular choices are:

- Ajax Polling

- Long Polling

- WebSockets

- Server-Sent Events

Each has different communication directions and performance pros and cons, so make sure you discuss and explain your choice with your interviewer.

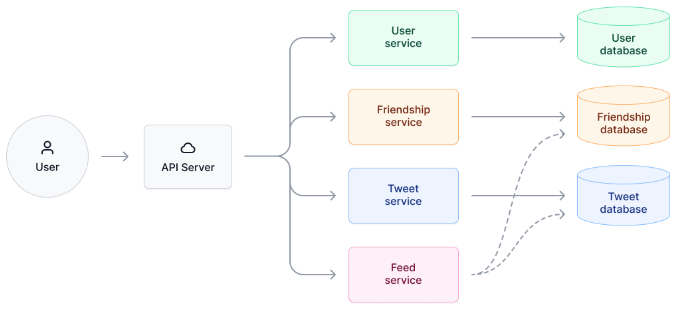

Create a high-level system design diagram

After designing the API and establishing a communication protocol, the next step is to create a high-level design diagram. The diagram should act as a blueprint of our design and highlight the most critical pieces to fulfill the functional requirements.

To illustrate the data and control flow in a system design question for a "Design Twitter" project, draw a high-level diagram. In this diagram, we've abstracted the design into an API server, several services we want to support, and the core databases.

At this stage, we should not dive deep into the details of each service yet. Instead, we should review whether our design satisfies all the functional requirements. We should demonstrate to the interviewer how the data and control flow look in each functional requirement.

In the Twitter design example above, we might want to explain to our interviewer how the following flows work:

- How a Twitter user registers or logs in to their account

- How a Twitter user follows or unfollows another user

- How a Twitter user posts a tweet

- How a Twitter user gets their news feed

If the interviewer explicitly asks us to design one of the functionalities, we should omit the rest in the diagram and only focus on the service of interest.

We should be mindful not to dive into scaling topics such as database sharding, replications, and caching yet.

We should leave those to the scaling section.

Step 3: Deep-dive

Time estimate: 10 minutes

Once you have a high-level diagram, it's time to examine system components and relationships in more detail.

The interviewer may prompt you to focus on a particular area but don't rely on them to drive the conversation. Check in regularly with your interviewer to see if they have questions or concerns in a specific area.

How do your non-functional requirements impact your design?

Consider how non-functional requirements impact design choices.

For example, if our system requires transactions, consider using a database that provides the ACID (Atomicity, Consistency, isolation, and Durability) property.

If an online system requires fresh data, think about how to speed up the data ingestion, processing, and query process.

If the data size fits into memory (up to hundreds of GBs), consider putting the data into memory. However, RAM is prone to data loss, so if we can't afford to lose data, we must find a way to make it persistent.

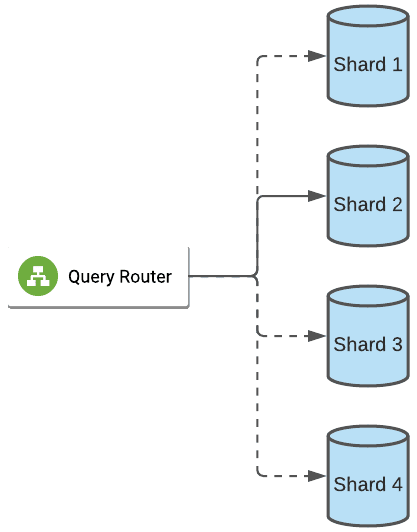

If the amount of data we need to store is large, we might want to partition the database to balance storage and query traffic.

Remember to revisit the data access pattern, QPS number, and read/write ratio discussed in Step 1 and consider how they impact our choices for different databases, database schemas, and indexing options.

We might need to add some load balancer layers to distribute the read/write traffic.

Keep in mind that we are expected to present different design choices along with their pros and cons, and explain why we prefer one approach over the other.

Remember that the system design question usually has no unique "correct" answer.

Therefore, weighing the trade-offs between different choices to satisfy our system's functional and non-functional requirements is considered one of the most critical skill sets in a system design interview.

Step 4: Identify bottlenecks and scale

Time estimate: 10 minutes

After completing a deep dive into the system components, it's time to zoom out and consider if the system can operate under various conditions and has room to support further growth.

Some important topics to consider during this step include:

- Is there a single point of failure? If so, how can we improve the robustness and enhance the system's availability?

- Is the data valuable enough to require replication? How important is it to keep all versions consistent if we replicate our data?

- Do we support a global service? If so, must we deploy multi-geo data centers to improve data locality?

- Are there any edge cases, such as peak time usage or hot users, that create a particular usage pattern that could deteriorate performance or even break the system?

- How do we scale the system to support 10 times more users? As we scale the system, we may want to upgrade each component or migrate to another architecture gradually.

Concepts such as horizontal sharding, CDN (content delivery network), caching, rate-limiting, and SQL/NoSQL databases should be considered in the follow-up lessons.

Step 5: Wrap up

Time estimate: 4 minutes

This is the end of the interview where you can summarize: review requirements, justify decisions, suggest alternatives, and answer questions. Walk through your major decisions, providing justification for each and discussing any tradeoffs in terms of space, time, and complexity.

Throughout the discussion, we recommend to refer back to requirements periodically.

4 - System design principles

Purpose of System Design

How do we architect a system that supports the functionality and requirements of a system in the best way possible? The system can be "best" across several different dimensions in system-level design. These dimensions include:

- Scalability: a system is scalable if it is designed so that it can handle additional load and will still operate efficiently.

- Reliability: a system is reliable if it can perform the function as expected, it can tolerate user mistakes, is good enough for the required use case, and it also prevents unauthorized access or abuse.

- Availability: a system is available if it is able to perform its functionality (uptime/total time). Note reliability and availability are related but not the same. Reliability implies availability but availability does not imply reliability.

- Efficiency: a system is efficient if it is able to perform its functionality quickly. Latency, response time and bandwidth are all relevant metrics to measuring system efficiency.

- Maintainability: a system is maintainable if it easy to make operate smoothly, simple for new engineers to understand, and easy to modify for unanticipated use cases.

What do these things mean in the context of system-level design? Let's take a look at a simple example of a distributed system to see what we mean.

Simple Distributed System Example

Let us consider a simple write system. The web service is designed to allow users to write and save information, and get an associated URL with the associated text that they have written (like PasteBin).

- Scalable. The use of load-balancers and caches and the choice of databases (object storage S3 and noSQL) will help facilitate additional load on the system from additional users.

- Reliable. The system will be able to reliably handle the functionality (i.e. where some number of clients are able to write text and get an associated URL with the text they have written) and by how the APIs are defined (not shown), should be able to handle user mistakes.

- Efficient. The introduction of the caching allows for greater efficiency for read requests by the user. The load-balancing also distributes the load more evenly across servers to make the system more efficient.

- Available. The load-balancers are distributing the load across multiple servers, meaning there is less likelihood of failure that will cause the system to crash. Additional databases can be used for redundancy in the case of error or failures.

- Maintainability. The APIs (not pictured here) are designed in a way that allows for modularity (i.e. separation of read and write calls). This allows for easy maintainability of the system.

You should always have the above distributed system properties (scalability, reliability, efficiency, availability and maintainability) in the back of your mind as you walk through each of the steps in the system-design interviews!

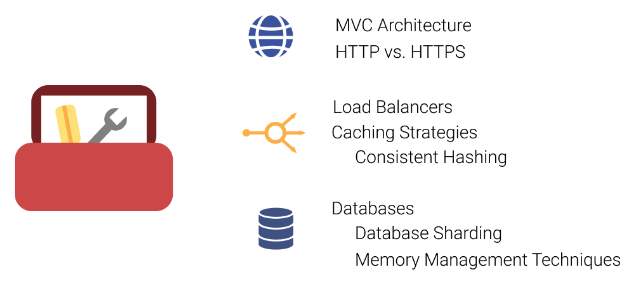

System Design Toolbox

There are so many components, algorithms, and architectures that support optimality in the above dimensions of distributed system design.

You will quickly find that each element in the system design toolbox may be great with respect to one dimension but at the cost of being low-performing in another (i.e. a component might be extremely efficient but not as reliable, or a certain design choice might be really effective at supporting one functionality like providing read access but not as efficient with write access).

In other words, there are tradeoffs that you must consider as you make informed system design choices. The best way to understand these tradeoffs is to understand how each of these components, algorithms and architectural designs work. In particular it is helpful to know how each element (tool):

- Works independently.

- Compares to other tools that perform similarly.

- Fits together in the bigger picture of system-level design.

5 - How to answer web protocol questions

How do networked computers actually talk to each other? Distributed systems would be completely impossible without the networks' physical infrastructure including client machines and servers, and rules and standards for sending and receiving data over that infrastructure. Those rules and standards are called network protocols.

Why are network protocols important?

It's easy to imagine the chaos that would ensue if communication between networked machines wasn't standardized. Network protocols have been around since the beginning, but the modern internet has converged on two models to describe how systems interact.

Two models for networked computer systems

The easiest way to think of the following networking models is as a stack of layers (hence the term "tech stack."). Generally, lower layers package data according to certain protocols which transmit upward where it is received as input by the next layer. This happens again and again until the data reaches its intended destination.

Data is transferred first through physical means like ethernet, then between computers through IP networks, and finally from user to user via the internet over the top, or "application" layer.

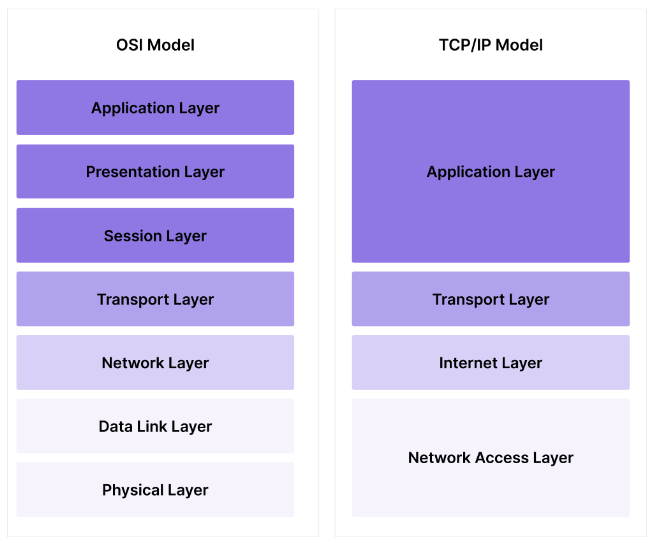

There are two models to be aware of. The TCP/IP model which maps more directly to protocols, and the more conceptual 7-layer OSI model.

TCP/IP Model

The TCP/IP model, named after its two main protocols which we will describe in more detail below, has four layers, starting with the base:

- The Network Access Layer or Link Layer, which represents a local network of machines; the "hardware" layer.

- The Internet Layer, which describes the much larger network of devices interconnected by IP addresses according to IP protocols (IPv4 or IPv6.)

- The Transport Layer which includes protocols for sending and receiving data via packets, e.g. TCP and UDP.

- The Application Layer, which describes how data is sent to and from users over the internet, e.g. HTTP and HTTPS.

OSI Model

The OSI Model or Open Systems Interface model is useful at a conceptual level. It's protocol-agnostic and more detailed. Instead of 4 layers, it breaks the Link Layer and Application Layer of the TCP/IP model into a few more pieces.

TCP (Transmission Control Protocol) and IP (Internet Protocol)

TCP/IP is the most commonly used protocol suite used today. It includes both the IP (via the internet layer) and TCP (via the transport layer) protocols. How does it work? Data packets are transmitted across network nodes. The Internet Layer connects these nodes through IP addresses and TCP protocol operating the transport Layer provides flow-control, establishes connections, and reliable transmission. TCP is a necessary "intermediary" because data packets sent over IP are often lost, dropped, or arrive out-of-order. TCP protocol was built with an emphasis on accuracy, so it's best used in applications where accuracy is more important than speed of delivery.

IPv4 vs. IPv6: You may have come across two different IP protocols — version 4 and version 6. IPv4 addresses include 4 numbers from 0 to 255 separated by periods. This standard was created in the 1980s when the number of available addresses, over 4 billion, seemed like plenty. Obviously, this isn't the case today. IPv6 allows for effectively unlimited IP addresses and includes useful new features (like the ability to stay connected to multiple networks at the same time) and better security, but the two protocols coexist today.

UDP (User Datagram Protocol)

UDP is a simpler alternative to TCP that also works with the IP protocol to transmit data. It's connectionless, making it much faster than TCP, but because it has none of the error-handling capabilities of TCP, it's error-prone. UDP is mainly used for streaming applications such as Skype, where users accept occasional delays in exchange for real-time service.

Together, TCP and UDP make up most internet traffic at the Transport Layer.

TCP vs. UDP

TCP emphasizes accurate delivery rather than speed and enforces the "rules of the road", similar to a traffic cop. How? It's connection-oriented, which means that the server must be "listening" for connection requests from clients, and the client and server must be connected before any data is sent. Because it's a stateful protocol, context is embedded into the TCP segment ("packaged" segments of the data stream including a TCP header), meaning that TCP can detect errors (either lost or out-of-order data packets) and request re-transmission.

HTTP and HTTPS

HTTP (Hypertext Transport Protocol) is the original request-response application layer protocol designed to connect web traffic through hyperlinks. It's the main protocol used by everything connected to the Internet. HTTP defines:

- A set of request methods (GET, POST, PUT, etc. - the same methods RESTful APIs use)

- Addresses (known as URLs)

- Default TCP/IP ports (port 80 for HTTP, port 443 for HTTPS).

Every time you visit a site with a http:// link, your browser makes a HTTP GET request for that URL.

HTTP is still in use, but it's been largely replaced by HTTPS (Hypertext Transport Protocol Secure), which serves the same purpose but with much better security features. In 2014, Google announced that it would give HTTPS sites a bump in rankings. That, combined with the increasing need for encrypted data transmission, resulted in much of the web over migrating to HTTPS.

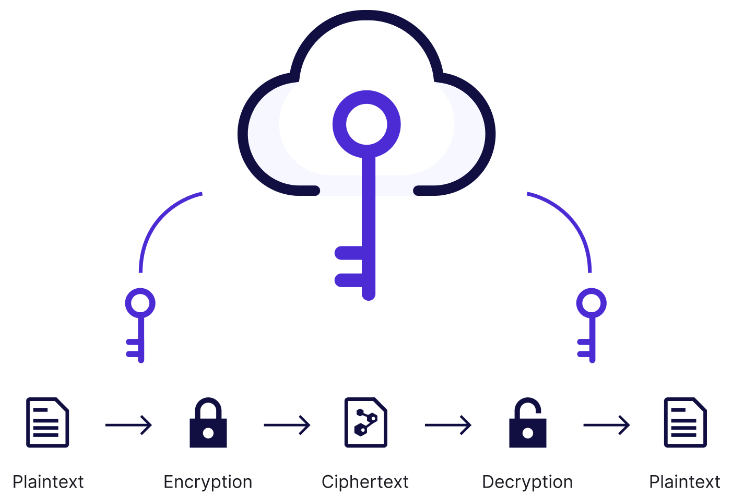

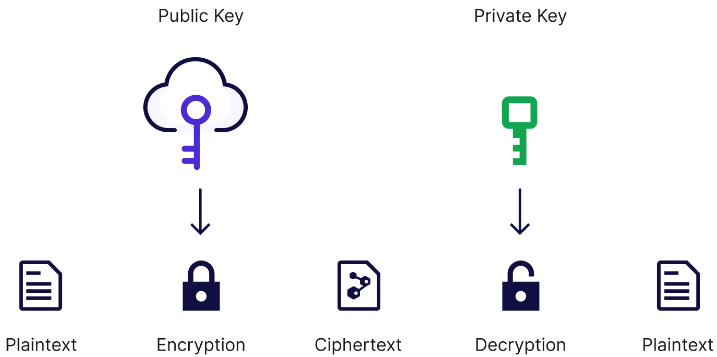

TLS Handshake Procedure

HTTPS works on top of TLS (Transport Layer Security) by default. TLS is a protocol used to encrypt communications in the transport layer, preventing unauthorized parties from listening in on communications. The process for initiating a secure session through TLS is called a TLS handshake. Here's what happens.

- The client requests to establish a secure connection with a server, usually by using port 443 which is reserved for TLS connections.

- The client and server agree to use a particular cipher suite (ciphers and hash functions.)

- The server submits a digital certificate which serves as proof of identity. Digital certificates are issued by 3rd party Certificate Authorities (CAs) and effectively vouch for the server.

- If the certificate is accepted by the client, the client will generate a session key which is used to encrypt any information transmitted during the session.

Once the session key is created, the handshake is finished and the session begins. All data transmitted will now be encrypted.

WebSocket vs HTTP

WebSocket is a newer communications protocol designed as an alternative which helps solve some key issues. HTTP was designed to be strictly unidirectional; the client must always request data from the server, and only one HTTP request can be sent per session. Lots of modern applications require longer session times and/or continuous updates from the server. Long-polling, a technique that keeps client-server connections open longer, helps, but it doesn't solve the problem — and it's very resource-intensive.

The WebSocket protocol works similarly to HTTP, but with some improvements (and tradeoffs.) It allows servers to send data to clients in a standardized way without first receiving a request, and it allows for multiple messages to be passed back and forth over the same connection. It's fully compatible with HTTP (and HTTPS), and it's much less computationally demanding than polling.

There are some drawbacks to WebSocket as compared to HTTP, namely:

- WebSocket has no built-in, standardized API semantics like HTTP's status codes or request methods.

- Keeping communications open between each client and server is more resource-intensive and adds complexity.

- It's less widespread, so development can take longer.

Most WebSocket use cases require real-time data. In a system design interview, consider WebSocket vs. HTTP for applications where updates are frequent, and up-to-date information is critical. Think messaging services, gaming, and trading platforms.

When to bring this up in an interview

Web protocols might not be an area you're likely to deep-dive or discuss explicitly in your interview, but having a thorough understanding of the architecture underpinning the internet will be extremely helpful in designing anything built to transmit data over the web. If you are asked specific follow-ups, remember:

- At the transport layer, you're likely to choose either the TCP or UDP protocol to send data. Choose TCP if you're more concerned with data accuracy, and UDP if quick transmission is needed (with tolerance for some errors — like in a video streaming application.)

- At the application layer, you have some choices to make as well. You'll probably choose HTTPS over HTTP for security reasons. If you need to maintain open client-server communications (for example, if you're building a fast-paced two-player game and you need to maintain up-to-date scores) you may choose WebSocket over HTTP.

- If you're designing a service with an API, consider HTTP (HTTPS) over WebSocket as you'll be able to make use of HTTPs standardized request methods and status codes; important if you're designing a RESTful API.

Further reading

- Check out this article on Slack's engineering blog to learn about how Websockets works at scale within Slack.

- Google's security blog has covered the migration from HTTP to HTTPS migration from the beginning.

6 - How to cover load balancing

A load balancer is a type of server that distributes incoming web traffic across multiple backend servers. Load balancers are an important component of scalable Internet applications: they allow your application(s) to scale up or down with demand, achieve higher availability, and efficiently utilize server capacity.

Why we need load balancers

In order to understand why and how load balancers are used, it's important to remember a few concepts about distributed computing.

First, web applications are deployed and hosted on servers, which ultimately live on hardware machines with finite resources such as memory (RAM), processor (CPU), and network connections. As the traffic to an application increases, these resources can become limiting factors and prevent the machine from serving requests; this limit is known as the system's capacity. At first, some of these scaling problems can be solved by simply increasing the memory or CPU of the server or by using the available resources more efficiently, such as multithreading.

At a certain point, though, increased traffic will cause any application to exceed the capacity that a single server can provide. The only solution to this problem is to add more servers to the system, also known as horizontal scaling. When more than one server can be used to serve a request, it becomes necessary to decide which server to send the request to. That's where load balancers come into the picture.

How load balancers work

A good load balancer will efficiently distribute incoming traffic to maximize the system's capacity utilization and minimize the queueing time. Load balancers can distribute traffic using several different strategies:

- Round robin: Servers are assigned in a repeating sequence, so that the next server assigned is guaranteed to be the least recently used.

- Least connections: Assigns the server currently handling the fewest number of requests.

- Consistent hashing: Similar to database sharding, the server can be assigned consistently based on IP address or URL.

Since load balancers must handle the traffic for the entire server pool, they need to be efficient and highly available. Depending on the chosen strategy and performance requirements, load balancers can operate at higher or lower network layers (HTTP vs. TCP/IP) or even be implemented in hardware. Engineering teams typically don't implement their own load balancers and instead use an industry-standard reverse proxy (like HAProxy or Nginx) to perform load balancing and other functions such as SSL termination and health checks. Most cloud providers also offer out-of-the-box load balancers, such as Amazon's Elastic Load Balancer (ELB).

When to use a load balancer

You should use a load balancer whenever you think the system you're designing would benefit from increased capacity or redundancy. Often load balancers sit right between external traffic and the application servers. In a microservice architecture, it's common to use load balancers in front of each internal service so that every part of the system can be scaled independently.

Be aware, load balancing cannot solve many scaling problems in system design. For example, an application can also succumb to database performance, algorithmic complexity, and other types of resource contention. Adding more web servers won't compensate for inefficient calculations, slow database queries, or unreliable third-party APIs. In these cases, it may be necessary to design a system that can process tasks asynchronously, such as a job queue (see the Web Crawler question for an example).

Load balancing is notably distinct from rate limiting, which is when traffic is intentionally throttled or dropped in order to prevent abuse by a particular user or organization.

Advantages of Load Balancers

- Scalability. Load balancers make it easier to scale up and down with demand by adding or removing backend servers.

- Reliability. Load balancers provide redundancy and can minimize downtime by automatically detecting and replacing unhealthy servers.

- Performance. By distributing the workload evenly across servers, load balancers can improve the average response time.

Considerations

- Bottlenecks. As scale increases, load balancers can themselves become a bottleneck or single point of failure, so multiple load balancers must be used to guarantee availability. DNS round robin can be used to balance traffic across different load balancers.

- User sessions. The same user's requests can be served from different backends unless the load balancer is configured otherwise. This could be problematic for applications that rely on session data that isn't shared across servers.

- Longer deploys. Deploying new server versions can take longer and require more machines since the load balancer needs to roll over traffic to the new servers and drain requests from the old machines.

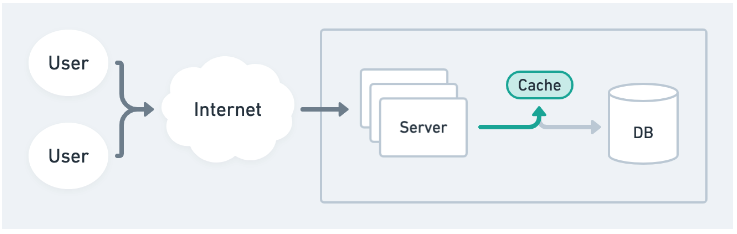

7 - How to answer questions about CDNs

Problem Background

When a client sends a request to an external server, that request often has to hop through many different routers before it can finally reach its destination (and then the response has to hop through many routers back). The number of these hops typically increases with the geographic distance between the client and the server, as well as the latency of the request. If a company hosting a website on a server, in an AWS datacenter in California (us-west-1), it may take ~100 ms to load for users in the US, but take 3-4 seconds to load for users in China. The good thing is that there are strategies to minimize this request latency for geographically far away users, and we should think about them when designing/building systems on a global scale.

What are CDNs?

CDNs (Content Distribution/Delivery Networks) are a modern and popular solution for minimizing request latency when fetching static assets from a server. An ideal CDN is composed of a group of servers that are spread out globally, such that no matter how far away a user is from your server (also called an origin server), they'll always be close to a CDN server. Then, instead of having to fetch static assets (images, videos, HTML/CSS/Javascript) from the origin server, users can fetch cached copies of these files from the CDN more quickly.

Note: Static assets can be pretty large in size (think of an HD wallpaper image), so by fetching that file from a nearby CDN server, we actually end up saving a lot of network bandwidth too.

Popular CDNs

Cloud providers typically offer their own CDN solutions, since it's so popular and easy to integrate with their other service offerings. Some popular CDNs include Cloudflare CDN, AWS Cloudfront, GCP Cloud CDN, Azure CDN, and Oracle CDN.

How do CDNs Work?

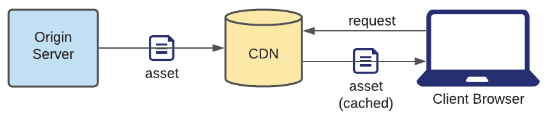

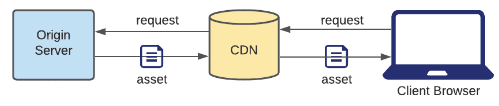

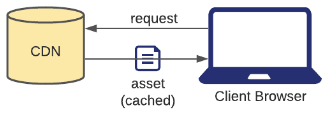

Like mentioned above, a CDN can be thought of as a globally distributed group of servers that cache static assets for your origin server. Every CDN server has its own local cache and they should all be in sync. There are two primary ways for a CDN cache to be populated, which creates the distinction between Push and Pull CDNs. In a Push CDN, it's the responsibility of the engineers to push new/updated files to the CDN, which would then propagate them to all of the CDN server caches. In a Pull CDN, the server cache is lazily updated: when a user sends a static asset request to the CDN server and it doesn't have it, it'll fetch the asset from the origin server, populate its cache with the asset, and then send the asset to the user.

Push CDN

The origin server sends the asset to the CDN, which stores it in its cache. The CDN never makes any requests to the origin server.

Pull CDN

If the CDN doesn't have the static asset in its cache, then it forwards the request to the origin server and then caches the new asset.

If the CDN has the asset in its cache, it returns the cached asset.

There are advantages and disadvantages to both approaches. In a Push CDN, it's more engineering work for the developers to make sure that CDN assets are up to date. Whenever an asset is updated/created, developers have to make sure to push it to the CDN, otherwise the client won't be able to fetch it. On the other hand, Pull CDNs require less maintenance, since the CDN will automatically fetch assets from the origin server that are not in its cache. The downside of Pull CDNs is that if they already have your asset cached, they won't know if you decide to update it or not, and to fetch this updated asset. So for some period of time, a Pull CDN's cache will become stale after assets are updated on the origin server. Another downside is that the first request to a Pull CDN will always take a while since it has to make a trip to the origin server.

Even with its disadvantages, Pull CDNs are still a lot more popular than Push CDNs, because they are much easier to maintain. There are also several ways to reduce the time that a static asset is stale for. Pull CDNs usually attach a timestamp to an asset when cached, and typically only cache the asset for up to 24 hours by default. If a user makes a request for an asset that's expired in the CDN cache, the CDN will re-fetch the asset from the origin server, and get an updated asset if there is one. Pull CDNs also usually support Cache-Control response headers, which offers more flexibility with regards to caching policy, so that cached assets can be re-fetched every five minutes, whenever there's a new release version, etc. Another solution is "cache busting", where you cache assets with a hash or etag that is unique compared to previous asset versions.

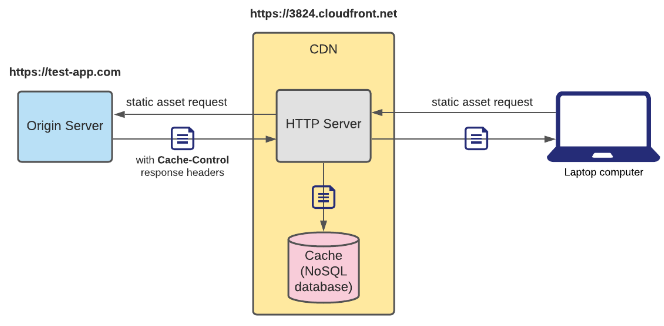

If a user is fetching the static asset image.png, they would fetch it at https://3284.cloudfront.net/image.png. If the CDN doesn't have it, then the CDN would fetch the asset from the origin server at https://test-app.com/image.png.

When not to use CDNs?

CDNs are generally a good service to add your system for reducing request latency (note, this is only for static files and not most API requests). However, there are some situations where you do not want to use CDNs. If your service's target users are in a specific region, then there won't be any benefit of using a CDN, as you can just host your origin servers there instead. CDNs are also not a good idea if the assets being served are dynamic and sensitive. You don't want to serve stale data for sensitive situations, such as when working with financial/government services.

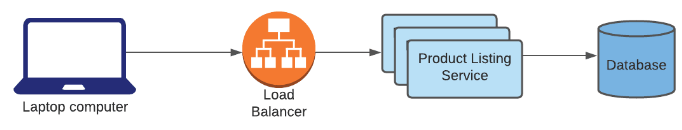

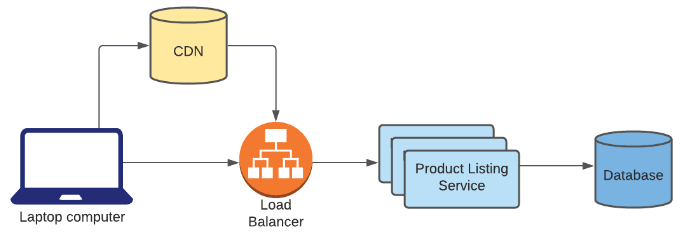

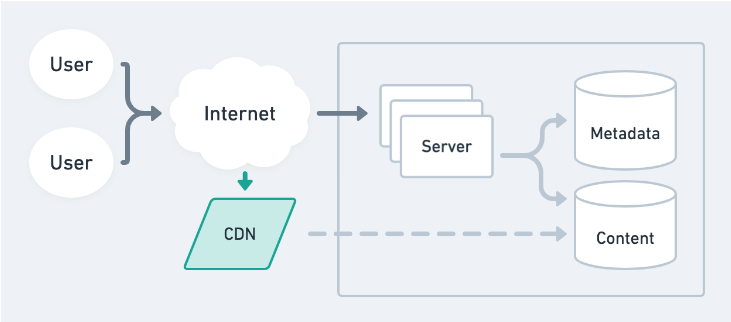

Exercise You're building Amazon's product listing service, which serves a collection of product metadata and images to online shoppers' browsers. Where would a CDN fit in the following design?

Answer

8 - APIs

An Application Programming Interface (API) is a way for different systems to talk to each other.

It's like a contract that says how one system can use another system's resources or functionality by sending requests and receiving responses.

A web API is a type of API that works between web servers and web browsers and typically uses a Hypertext Transfer Protocol (HTTP) to send and receive data.

Why do we need APIs?

Imagine that you own an international flight booking management company that maintains several databases, including flight information, ticket inventory, and ticket prices.

In a world without APIs, you would need to develop an application that allows individual customers and travel agencies to check flight availability and book tickets within your application.

However, developing such an application might be expensive and challenging to maintain globally. Furthermore, customers in many parts of the world might not even have access to your application.

Now, consider the world with the assistance of APIs to perform this business logic.

There are several benefits:

- Travel agencies can access provided APIs to aggregate data for their use, such as adjusting commissions, sending price change alerts, and tracking ticket prices.

- The booking management company can change internal services without affecting clients if the API interface remains unchanged.

- Other third parties can use the available public APIs to develop their business logic, which could increase ticket sales and revenue.

- The booking management company has precise permission controls and authentication methods provided by API gateways and/or the API itself using tokens or encryption.

Generally, an API is a contract between developers detailing the service or library's functionality.

When a client sends a request in a particular format, the API defines how the server should respond.

API developers must consider carefully what public API they offer before releasing it:

- APIs for third-party developers — APIs allow third-party developers to build applications that can securely interact with your application with your permission. Without APIs, developers would have to deal with the entire infrastructure of a service every time they wanted to access it.

- APIs for users — As a user, APIs save you from dealing with all of that infrastructure.

This simplification creates business opportunities and innovative ideas; for example, a third-party tool that uses public APIs to access Google Maps data for advertising, recommendations, and more.

In summary, APIs provide access to a server's resources while maintaining security and permission control. They simplify software development by providing a layer of abstraction between the server and the client.

API design is essential to any system architecture and is a common topic in system design interviews.

How APIs work

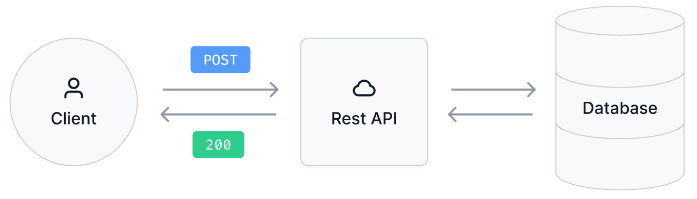

APIs deliver client requests and return responses via JavaScript Object Notation (JSON) or Extensible Markup Language (XML), usually over the Internet (web APIs). Each request-and-response cycle is an API call.

A request typically consists of a server endpoint Uniform Resource Locator (URL) and a request method, usually through HTTP. The request method indicates the desired API action. An HTTP response contains a status code, a header, and a response body.

Common status codes include:

200(OK)401(unauthorized user)404(URL not found)500(internal server error)

The response body varies depending on the HTTP request, which could be the server resource a client needs to access or any application-specific messages.

To streamline communication, APIs often converge on a few popular specifications to standardize information exchange. By far, the most common is REpresentational State Transfer (REST).

REST APIs

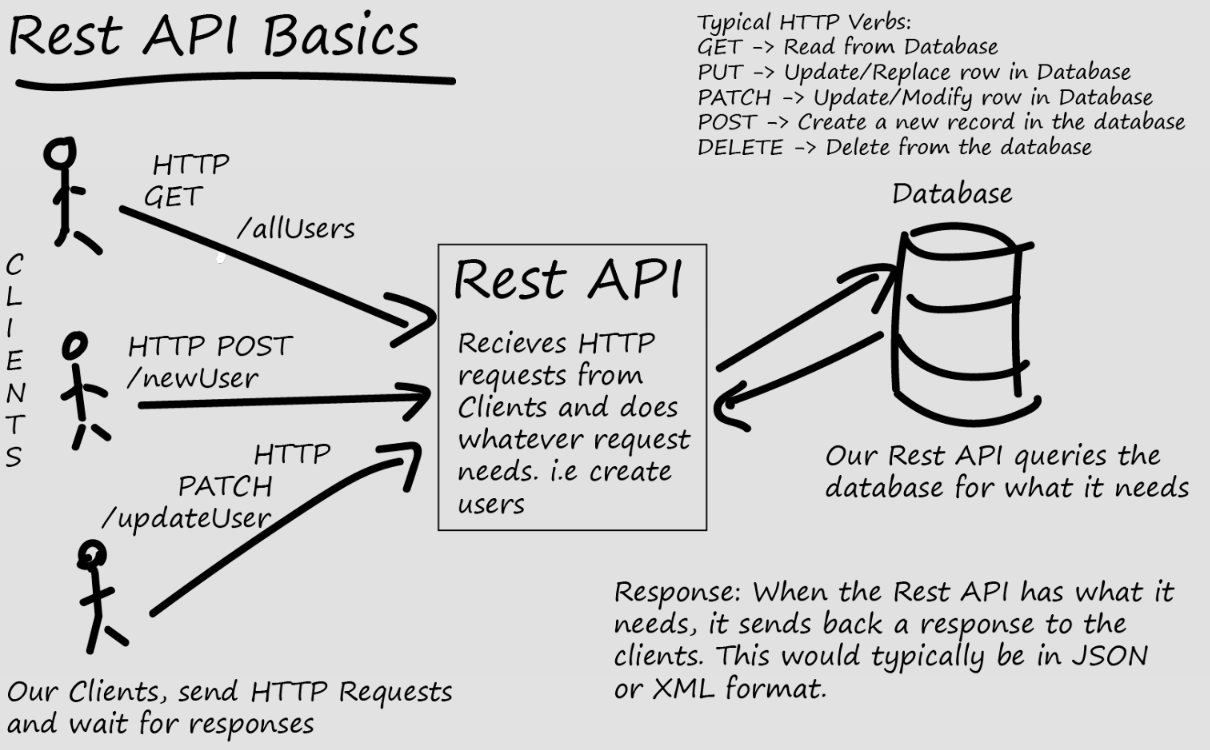

REpresentational State Transfer (REST) APIs focus on server resources and limit the set of methods to those based on HTTP methods that access resources.

The most common request methods are:

GET(to retrieve)POST(to create)PUT(to update)DELETE(to delete/remove)

REST APIs access resources through URLs, just like the URLs in your browser. The URLs are accompanied by a method that specifies how you want to interact with the resource.

REST APIs focus on resources rather than database entries. In many cases, these two are not identical.

For instance, creating a calendar event (a resource) can involve sending email invitations to attendees, creating events for each attendee, checking for conflicts, reserving meeting places, updating video conference schedules, and granting permissions to related documents.

A single calendar event might trigger updates from multiple services and databases.

Although REST APIs are among the most popular choices, a downside is that a client might have to deal with redundant data when making REST API calls.

For example, to fetch the name and members of a musical band, requesting the dedicated resources for that band would retrieve the name and its members (possibly from multiple endpoints) and also other information (such as its albums, founding year, and so on), depending on how the resources are organized by the server.

Learn more about REST API Basics:

Other popular APIs

Other than REST APIs, there are other popular APIs, such as Remote Procedure Call (RPC), GraphQL, and Simple Access Protocol (SOAP):

RPC

A requester selects a remote procedure to execute, serializes the parameters, and then sends the lightweight binary data to the server. The server decodes the message and executes the operation, sending the result back to the requester.

Its simplicity and light payloads have made it a de facto standard for many inter-service data exchanges on the backend.

GraphQL

GraphQL was developed to allow clients to execute precise queries and retrieve only the data they are interested in, typically from a graph database.

To achieve this process, servers need to predefine a schema that describes all possible queries and their return types. This reduces the server payload and offers the client a great amount of flexibility during query time.

However, performance can suffer when the client has too many nested fields in one request. Additionally, there is a steep learning curve that requires extensive knowledge.

Therefore, GraphQL users need to find a balance between its benefits and implementation costs.

SOAP

A precursor to REST. SOAP works like an envelope that contains a bulky, text-based message. It is much slower than binary messaging protocols, such as RPC.

However, the standardized text format and security features make enforcing legal contracts and authentication easy throughout the API's processing.

SOAP is a legacy protocol and is still heavily used in high-security data transmission scenarios, such as billing operations, booking, and payment systems.

API design patterns

When a Google search query returns thousands of URLs, how can we gracefully render the results to a client? API design patterns provide a set of design principles for building internal and public-facing APIs.

Pagination

One user experience design pattern uses pagination, where users can navigate between different search results pages using links, such as "next," "previous," and a list of page numbers.

How do we implement a search query API that supports pagination?

For simplicity, let's assume that Google stores the result of the queried word "Wikipedia" at "https://www.google.com/some_storage_path/wikipedia/*".

Our API request to fetch the search result for "Wikipedia" would look like this:

GET "[https://www.google.com/some_storage_path/wikipedia](https://www.google.com/storage/wikipedia/)?limit=20"

- The

limit=20parameter means that we only want to list the first 20 results on the first page. - If everything goes well, our API request receives a response with a

200OKstatus code and a response body containing the first 20 pages that match our search word "Wikipedia". - The response also contains a link to the next page that shows the following 20 available matching pages, and an empty link to the previous page.

- Then, we can send a second API request asking for the following 20 pages by specifying the offset of the search results.

Use the following GET request to retrieve the first page of results:

- The response body could contain the results for the first page, a valid link to the next page, and an empty previous page link. If we have exhausted all the result pages, the following page link will be empty.

- It is good design practice to add pagination from the start. Otherwise, a client that is unaware of the current

GETAPI that supports pagination could mistakenly believe they obtained the full result when in fact, they only obtained the very first page.

In addition to pagination as a solution to navigate between a list of pages, other design patterns include load more and infinite scrolling. Learn more about pagination vs. load more vs. infinite scrolling design patterns.

Long-running operations

When using the DELETE API, an appropriate return value depends on whether or not the DELETE method immediately removes the resource. If it does, the server should return an empty response body with an appropriate status code, such as 200 (OK) or 204 (No Content).

For example:

- If the object the client tries to delete is large and takes time to delete, the server can embed a long-running operation in the response body so that the client can later track the progress and receive the deletion result.

- When the

POSTAPI creates a large resource, the server could return a202status code forAcceptedand a response body indicating that the resource is not yet ready for use.

API Idempotency

A client may experience failures, such as timeouts, server crashes, or network interruptions, while trying to pay for a service. If the client retries the payment and is double-charged, it could be due to any of the following scenarios:

There are 3 potential scenarios when a payment request is made:

- The initial handshake between the client and server could fail, preventing the payment request from being sent. In this case, the client can retry the request and hope for success without risking double charging.

- The server receives the payment request but has not yet begun processing it when a failure occurs. The server sends an error code back to the client, and there is no change to the client's account.

- The server receives the payment request and successfully processes it or is processing it when a failure occurs. However, the failure happens before the server can return the

200status code to the client. In this scenario, the client may not realize that their account has already been charged and may initiate another payment, leading to double charging.

Overall, the first two scenarios are acceptable, but the last scenario is problematic and could result in unintentional double charging.

Possible solution

To address this problem, we need to develop an idempotent payment API. An idempotent API ensures that only the first call generates the expected result and subsequent calls are no-ops.

- Require clients to generate a unique key in the request header when initiating a request to the payment server.

- The key is sent to the server along with other payment-related information.

- The server stores the key and process status.

- If the client receives a failure, it can safely retry the request with the same key in the header, and the payment server can recognize this as a duplicate request and take appropriate action.

For example, if the previous failure interrupted an ongoing payment processing, the payment server might restore the state and continue processing.

Non-mutating APIs, such as GET, are generally idempotent.

Mutating APIs, such as PUT, update a resource's state. This means that executing the same PUT API multiple times updates the resource only once, while subsequent calls overwrite the resource with the same value. Therefore, PUT APIs are usually idempotent.

Similarly, the mutating API, DELETE, only takes effect during the first call, returning a 200 (OK) status, while subsequent calls return 404 (Not Found).

The last mutating API, POST, creates a new resource.

However, receiving multiple requests can cause servers to allocate different places for the new resource. Therefore, the POST API is usually not idempotent.

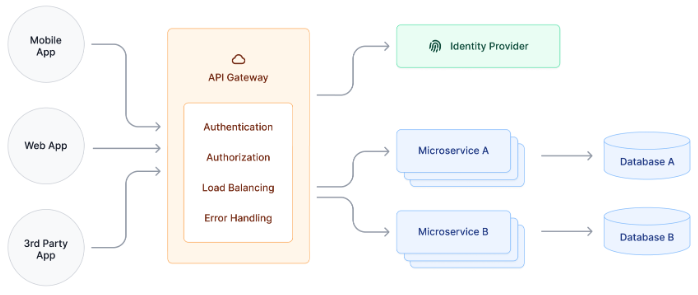

API gateways

Although APIs offer security to the systems on either end, they are susceptible to overuse or abuse. If you're concerned about this, you may want to implement an API gateway to collect requests and route them accordingly.

An API gateway is a reverse proxy that acts as a single entry point for microservices and back-end APIs.

All client requests are routed to the gateway, which directs them accordingly — to the API if it is available, or redirected if the request fails to meet security standards.

Generally, use cases for API gateways include user authentication, rate limiting, and billing if you're monetizing an API.

Learn more

- Netflix's Engineering Blog: Engineering Trade-Offs and The Netflix API Re-Architecture and Beyond REST (learn about the engineering team's experiments with microservices over the years)

- REST API response codes and error messages (IBM)

- Pagination vs. infinite scrolling vs. load more: Best practices on Oracle Help Center (Oracle)

9 - Understanding CAP theorem

The CAP theorem is an important database concept to understand in system design interviews. It's often not explicitly asked about, but understanding the theorem helps us narrow down a type of database to use based on the problem requirements. Modern databases are usually distributed and have multiple nodes over a network. Since network failures are inevitable, it's important to decide beforehand the behavior of nodes in a database, in the event that packets are dropped/lagged or a node becomes unresponsive.

Partition Tolerance and Theorem Definition

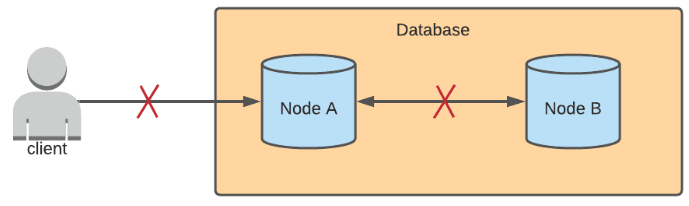

CAP stands for "Consistency", "Availability", and "Partition tolerance". A network partition is a (temporary) network failure between nodes. Partition tolerance means being able to keep the nodes in a distributed database running even when there are network partitions. The theorem states that, in a distributed database, you can only ensure consistency or availability in the case of a network partition.

Example Scenario

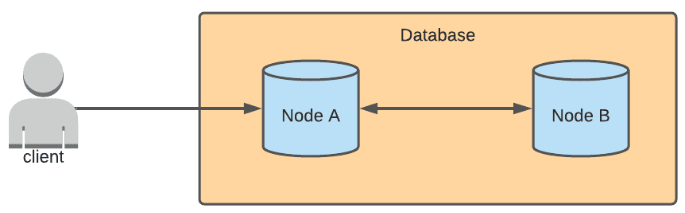

To better understand the situation with network partitions, we give an example scenario. In a multi-primary architecture, data can be written to multiple nodes. Let's pretend that there are two nodes in this example database. When a write is sent to Node A, Node A syncs the update with Node B, and any read requests sent to either of the two nodes will return the updated result.

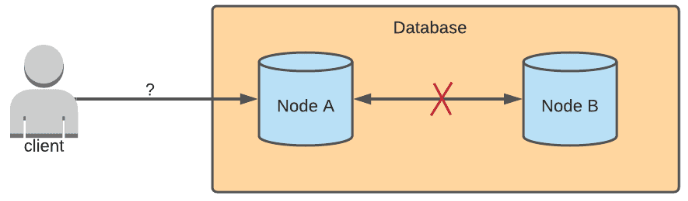

If there is a network partition between Node A and Node B, then updates cannot be synced. Node B wouldn't know that Node A received an update, and Node A wouldn't know if Node B received an update. The behavior of either node after receiving a write request depends on whether the database prioritizes consistency or availability.

Consistency

Consistency is the property that after a write is sent to a database, all read requests sent to any node should return that updated data. In the example scenario where there is a network partition, both Node A and Node B would reject any write requests sent to them. This would ensure that the state of the data on the two nodes are the same. Or else, only Node A would have the updated data, and Node B would have stale data.

Availability

In a database that prioritizes availability, it's OK to have inconsistent data across the nodes, where one node may contain stale data and another has the most updated data. Availability means that we prioritize nodes to successfully complete requests sent to them. Available databases also tend to have eventual consistency, which means that after some undetermined amount of time when the network partition is resolved, eventually, all nodes will sync with each other to have the same, updated data. In this case, Node A will receive the update first, and after some time, Node B will be updated as well.

When should Consistency or Availability be prioritized?

If you're working with data that you know needs to be up-to-date, then it may be better to store it in a database that prioritizes consistency over availability. On the other hand, if it's fine that the queried data can be slightly out-of-date, then storing it in an available database may be the better choice. This may seem kind of vague, but check out the examples at the end of this post for a better understanding.

Read Requests

Notice that only write requests were discussed above. This is because read requests don't affect the state of the data, and don't require re-syncing between nodes. Read requests are typically fine during network partitions for both consistent and available databases.

SQL Databases

SQL databases like MySQL, PostgreSQL, Microsoft SQL Server, Oracle, etc, usually prioritize consistency. Primary-secondary replication is a common distributed architecture in SQL databases, and in the event of a primary becoming unavailable, the role of primary would failover to one of the replica nodes. During this failover process and electing a new primary node, the database cannot be written to, so that consistency is preserved.

Popular Databases that Prioritize Consistency vs. Availability

| Prioritize Consistency | Prioritize Availability |

|---|---|

| SQL databases | Cassandra |

| MongoDB | AWS DynamoDB |

| Redis | CouchDB |

| Google BigTable | |

| HBase |

Does Consistency in CAP mean Strong Consistency?

In a strongly consistent database, if data is written and then immediately read after, it should always return the updated data. The problem is that in a distributed system, network communication doesn’t happen instantly, since nodes/servers are physically separated from each other and transferring data takes >0 time. This is why it’s not possible to have a perfectly, strongly consistent distributed database. In the real world, when we talk about databases that prioritize consistency, we usually refer to databases that are eventually consistent, with a very short, unnoticeable lag time between nodes.

I’ve heard the CAP Theorem defined differently as "Choose 2 of the 3, Consistency, Availability or Partition Tolerance"?

This definition is incorrect. You can only choose a database to prioritize consistency or availability in the case of a network partition. You can’t choose to forfeit the "P" in CAP, because network partitions happen all the time in the real world. A database that is not partition tolerant would mean that it’s unresponsive during network failures, and could not be available either.

Short Exercises

Question 1: You’re building a product listing app, similar to Amazon’s, where shoppers can browse a catalog of products and purchase them if they’re in-stock. You want to make sure that products are actually in-stock, or then you’ll have to refund shoppers for unavailable items and they get really angry. Should the distributed database you choose to store product information prioritize consistency or availability?

Answer: Consistency. In the case of a network partition and nodes cannot sync with each other, you’d rather not allow any shoppers to purchase any products (and write to the database) than have two or more shoppers purchase the same product (write on different nodes) when there is only one item left. An available database would allow for the latter, and at least one of the shoppers would have to have their order canceled and refunded.

Question 2: You’re still building the same product listing app, but the PMs have decided, through much analysis, that it’s more cost effective to refund shoppers for unavailable items than to show that the products are out-of-stock during a network failure. Should the distributed database you choose still prioritize consistency or availability?

Answer: Availability. Canceling and refunding the order of a shopper would be preferable to not allowing any shoppers to purchase the product at all during a network failure.

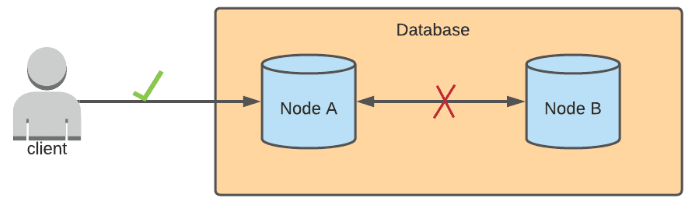

10 - Caching strategies

Caching is a data storage technique that is ubiquitous throughout computer systems and plays an important role in designing scalable Internet applications. A cache is any data store that can store and retrieve data quickly for future use, enabling faster response times and decreasing load on other parts of your system.

Why We Need Caching

Without caching, computers and the Internet would be impossibly slow due to the access time of retrieving data at every step. Caches take advantage of a principle called locality to store data closer to where it is likely to be needed. This principle is at work even within your computer: as you browse the web, your web browser caches images and data temporarily on your hard drive; data from your hard drive is cached in memory, etc.

In large-scale Internet applications, caching can similarly make data retrieval more efficient by reducing repeated calculations, database queries, or requests to other services. This frees up resources to be used for other operations and serve more traffic volume. In a looser sense, caching can also refer to the storage of pre-computed data that would otherwise be difficult to serve on demand, like personalized newsfeeds or analytics reports.

How Caching Works

Caching can be implemented in many different ways in modern systems:

In-memory application cache. Storing data directly in the application's memory is a fast and simple option, but each server must maintain its own cache, which increases overall memory demands and cost of the system.

Distributed in-memory cache. A separate caching server such as Memcached or Redis can be used to store data so that multiple servers can read and write from the same cache.

Database cache. A traditional database can still be used to cache commonly requested data or store the results of pre-computed operations for retrieval later.

File system cache. A file system can also be used to store commonly accessed files; CDNs are one example of a distributed file system that take advantage of geographic locality.

Caching Policies

One question you may be wondering is, "If caching is so great, why not cache everything?"

There are two main reasons: cost and accuracy. Since caching is meant to be fast and temporary, it is often implemented with more expensive and less resilient hardware than other types of storage. For this reason, caches are typically smaller than the primary data storage system and must selectively choose which data to keep and which to remove (or evict). This selection process, known as a caching policy, helps the cache free up space for the most relevant data that will be needed in the future.

Here are some common examples of caching policies:

- First-in first-out (FIFO). Similar to a queue, this policy evicts whichever item was added longest ago and keeps the most recently added items.

- Least recently used (LRU). This policy keeps track of when items were last retrieved and evicts whichever item has not been accessed recently. See the related programming question.

- Least frequently used (LFU). This policy keeps track of how often items are retrieved and evicts whichever item is used least frequently, regardless of when it was last accessed.

In addition, caches can quickly become out-of-date from the true state of the system. This speed/accuracy tradeoff can be reduced by implementing an eviction policy — most commonly by limiting the time-to-live (TTL) of each cache entry, and updating the cache entry before or after database writes (write-through vs. write-behind).

Cache Coherence

One final consideration is how to ensure appropriate cache consistency given our requirements.

- A write-through cache updates the cache and main memory simultaneously, meaning there's no chance either can go out of data. It also simplifies the system.

- In a write-behind cache, memory updates occur asynchronously. This may lead to inconsistency, but it speeds things up significantly.

Another option is called cache-aside or lazy loading where data is loaded into the cache on-demand. First, the application checks the cache for requested data. If it's not there (also called a "cache miss") the application fetches the data from the data store and updates the cache. This simple strategy keeps the data stored in the cache relatively "relevant" - as long as you choose a cache eviction policy and limited TTL combination that matches data access patterns.

| Cache Policy | Pros | Cons |

|---|---|---|

| Write-through | Ensures consistency. A good option if your application requires more reads than writes. | Writes are slow. |

| Write-behind | Speed (both reads and writes.) | Risky. You're more susceptible to issues with consistency and you may lose data during a crash. |

| Cache-aside or Lazy Loading | Simplicity and reliability. Also, only requested data is cached. | Cache misses cause delays. May result in stale data. |

Further Reading

- In 2013, Facebook engineers published Scaling Memcache at Facebook, a now-famous paper detailing improvements to memcached. The concept of leases, a Facebook innovation addressing the speed/accuracy problem with caching, is introduced here.

- This blog series written by Stack Overflow's Chief Architect on the company's approach to caching (and many other architectural topics) is both informative and fun.

Concept Check

There are 3 big decisions you'll have to make when designing a cache.

- How big should the cache be?

- How should I evict cached data?

- Which expiration policy should I choose?

Summarize a few general data points you'd consider when making your decisions.

Full Solution

How big? At a high level, consider how latency reduction will impact your users vs. the added cost of increased complexity and expensive hardware. Then consider anticipated request volume and the size and distribution of cached objects. From there, design your cache such that it meets your cache hit rate targets.

Eviction? This will depend on your application, but LRU is by far the most common option. It's simple to implement and works best under most circumstances.

Expiration? A common, simple approach is to set an absolute TTL based on data usage patterns. Consider the impact of stale data on your users and how quickly data changes. You likely won't get this right the first time.

11 - SQL vs. NoSQL? How to choose a database

In system design interviews, you will often have to choose what database to use, and these databases are split into SQL and NoSQL types. SQL and NoSQL databases each have their own strengths (+) and weaknesses (-), and should be chosen appropriately based on the use case.

SQL Databases

- (+) Relationships SQL databases, also known as relational databases, allows easy querying on relationships between data among multiple tables. These relationships are defined using primary and foreign key columns. Table relationships are really important for effectively organizing and structuring a lot of different data. SQL, as a query language, is also both very powerful for fetching data and easy to learn.

- (+) Structured Data Data is structured using SQL schemas that define the columns and tables in a database. The data model and format of the data must be known before storing anything, which reduces room for potential error.

- (+) ACID Another benefit of SQL databases is that they are ACID (Atomicity, Consistency, Isolation, Durability) compliant, and they ensure that by supporting transactions. SQL transactions are groups of statements that are executed atomically. This means that they are either all executed, or not executed at all if any statement in the group fails. A simple example of a SQL transaction is written below:

BEGIN TRANSACTION transfer_money_1922;

UPDATE balances SET balance = balance - 25 WHERE account_id = 10;

UPDATE balances SET balance = balance + 25 WHERE account_id = 155;

COMMIT TRANSACTION;

$25 is being transferred from one account balance to another.

- (-) Structured Data Since columns and tables have to be created ahead of time, SQL databases take more time to set up compared to NoSQL databases. SQL databases are also not effective for storing and querying unstructured data (where the format is unknown).

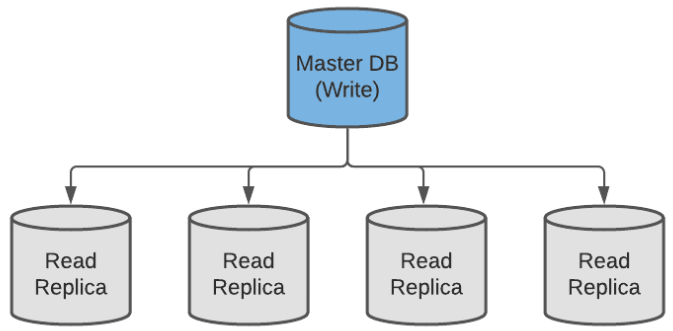

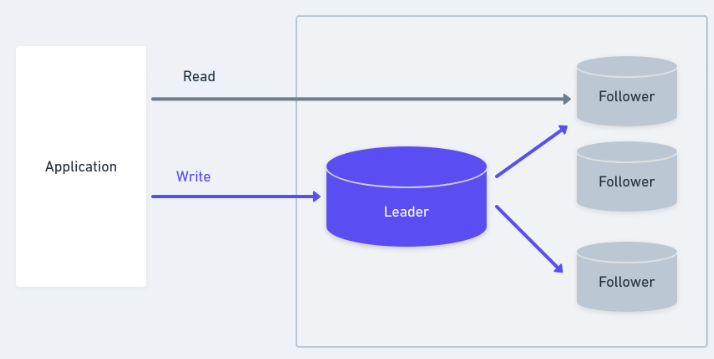

- (-) Difficult to Scale Because of the relational nature of SQL databases, they are difficult to scale horizontally. For read-heavy systems, it’s straightforward to provision multiple read-only replicas (with leader-follower replication), but for write-heavy systems, the only option oftentimes is to vertically scale the database up, which is generally more expensive than provisioning additional servers.

Leader-Follower Replication

Related Note 1: By increasing the number of read replicas, a trade-off is made between consistency and availability. Having more read servers leads to higher availability, but in turn, sacrifices data consistency (provided that the updates are asynchronous) since there is a higher chance of accessing stale data. This follows the CAP theorem, which will be discussed more as a separate topic. Related Note 2: It’s not impossible to horizontally scale write-heavy SQL databases, looking at Google Spanner and CockroachDB, but it’s a very challenging problem and makes for a highly complex database architecture. Examples of Popular SQL databases: MySQL, PostgreSQL, Microsoft SQL Server, Oracle, CockroachDB.

NoSQL Databases

- (+) Unstructured Data NoSQL databases do not support table relationships, and data is usually stored in documents or as key-value pairs. This means that NoSQL databases are more flexible, simpler to set up and a better choice for storing unstructured data.