MIT Missing Semester (2020)

1 - Course overview + the shell

Introduction to the shell

The shell is going to be one of the primary ways that you interact with your computer once you want to do more things than what the visual interfaces you might be used to allow you to do. The visual interfaces are sort of limited in what they allow you to do because you can only do the things that there are buttons for, sliders for, input fields for, etc.

Often these textual tools are built to be both composable with one another but also to have tons of different ways to combine them or ways to program or automate them. The shell is the place you will do most of this work.

Terminals are windows that allow you to display shells. And there are quite a few different types of shells but the most common of which is bash. (See "The Terminal" note following this note for more on what terminals are and what the Terminal application on a Macintosh is specifically.)

When you open your terminal, you will be greeted with a single line: the shell prompt (of whichever shell you have chosen to be your default shell). This is where you will tell the shell what you want it to do. You can customize this prompt a lot and will likely look different from one developer to the next. This article goes over how to customize your macOS terminal and has some ideas. We're not going to go over how to customize your shell right now but how to get your shell to do useful things.

The shell is really the main textual interface you have with your computer. To use your shell, first launch your terminal. You will then see a prompt that often looks something like

DWF-MBP:~$

This is the main textual interface to the shell. At this prompt, you can type a command, which will then be interpreted by the shell. As noted in [3], Mac OS X comes with over 1,000 commands for file manipulation,

text editing, printing, mathematics, computer programming,

typesetting, networking...you name it. A typical command is

run in a shell by typing its program name, followed by options

and arguments, like wc -l myfile. Commands are often relatively straightforward things by themselves. Usually a command will be used to execute a program with options and arguments as illustrated above with the wc program.

For example, one program you can execute without any arguments at all is the date program:

date

# Fri Dec 11 06:52:09 CST 2020

Unsurprisingly, it gives you the date and time. You can also execute programs with arguments to modify the behavior of the program. For example, there's a program called echo that just prints out the arguments that you give it where arguments are just whitespace-separated strings that follow the program name. So we can have something like the following:

echo hello

# hello

Probably not all that surprising but this is the very basics of arguments with programs. Since arguments are whitespace-separated, it makes sense we would need some way of representing a single argument we want to use but that has more than one word. You can do this with single or double quotes as well as escaping the space:

echo 'Two Words'

echo "Two Words"

echo Two\ Words

# Two Words

The difference between single quotes and double quotes will come up when we start talking aboutbash scripting.

The terminal

The discussion that follows is largely adapted from [2].

What exactly is "The Terminal"? In terms of Macintosh computers, the Terminal is an application that runs commands. Inside each Terminal window is a special program running called a shell, and the shell does four simple things:

- It displays a prompt in the Terminal window, waiting for you to type a command and press Enter.

- It reads your command and interprets any special symbols you typed.

- It runs or executes the command, automatically locating any necessary programs.

- It prints the output, if any, in the Terminal window.

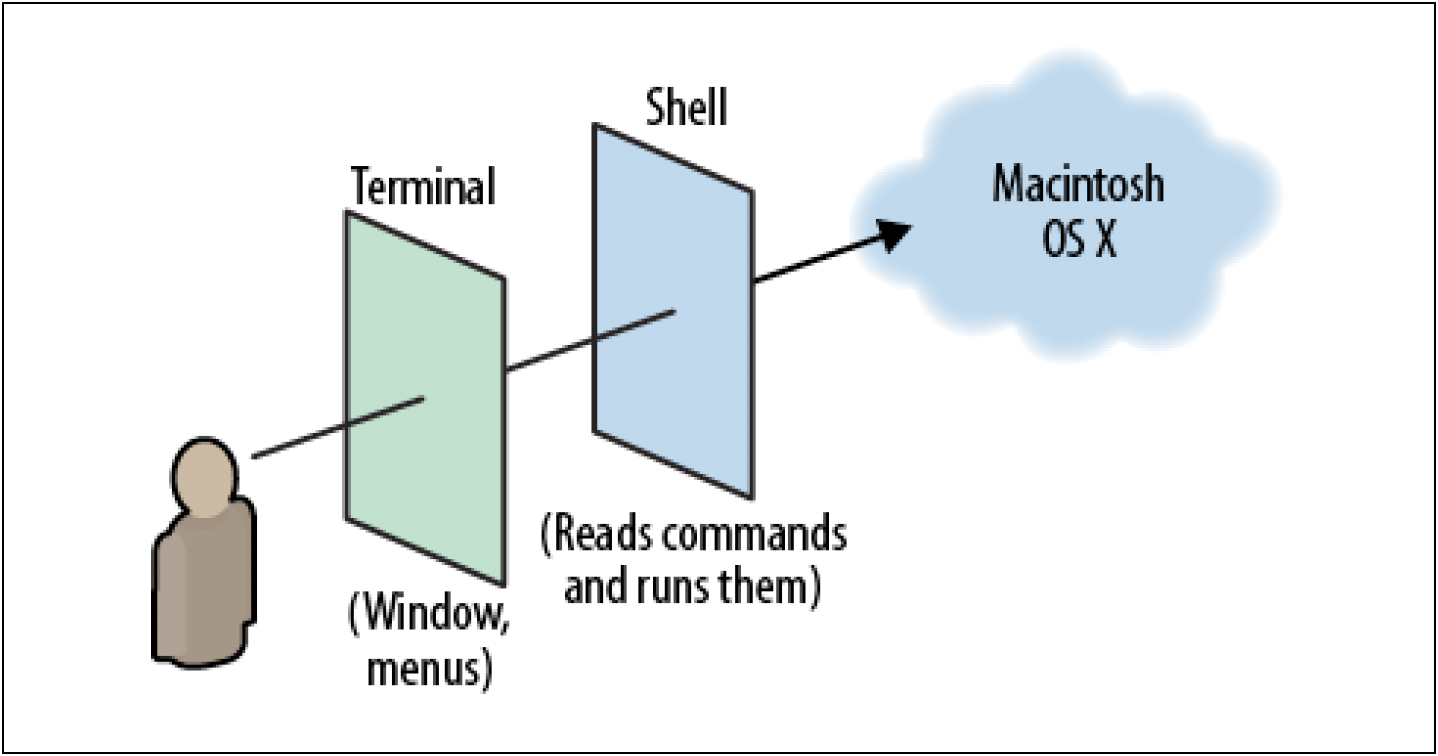

The Terminal's job is merely to open windows and manage shells. Using the Terminal, you can resize the windows, change their colors and fonts, and perform copy and paste operations. But it's the shell that is doing the real work of reading and running commands. The following figure illustrates how the Terminal and shell work together:

When you peer into a Terminal window, you are viewing a shell, which in turn interacts with your Macintosh.

In a sense, the Terminal is almost a GUI for your shell. And it is nice to note that, at least on a Macintosh, Terminal.app and bash is only the default "Terminal-Shell" pairing even though there are several alternatives for both. For example, as noted on Slant, there are several terminal emulators for the Mac such as

- Terminal.app (comes preinstalled with your Mac)

- iTerm2: iTerm2 is a replacement for Terminal and the successor to iTerm. It works on Macs with macOS 10.14 or newer. iTerm2 brings the terminal into the modern age with features you never knew you always wanted (e.g., split panes, hotkey window, search, autocomplete, copy mode, etc.). [...]

- Alacritty: Alacritty is a modern terminal emulator that comes with sensible defaults, but allows for extensive configuration. By integrating with other applications, rather than reimplementing their functionality, it manages to provide a flexible set of features with high performance (e.g., Vi mode, hints, etc.). The supported platforms currently consist of BSD, Linux, macOS and Windows. [...]

- kitty: The fast, featureful, GPU based terminal emulator. Offloads rendering to the GPU for lower system load and buttery smooth scrolling. Uses threaded rendering to minimize input latency. Supports all modern terminal features: graphics (images), unicode, true-color, OpenType ligatures, mouse protocol, hyperlinks, focus tracking, bracketed paste and several new terminal protocol extensions. [...]

- Hyper: Hyper is an Electron-based terminal. Built on HTML/CSS/JS. Fully extensible. Install themes and plugins from the command line. [...]

- ... and many many more ...

while there are also several UNIX shell options such as

-bash (default shell on virtually every UNIX system)

- zsh: Zsh is a shell designed for interactive use, although it is also a powerful scripting language. Many of the useful features ofbash, ksh, and tcsh were incorporated into zsh; many original features were added. [...]

- fish: fish is a smart and user-friendly command line shell for Linux, macOS, and the rest of the family. Some highlights: autosuggestions (fish suggests commands as you type based on history and completions, just like a web browser), glorious VGA color (fish supports 24 bit true color, the state of the art in terminal technology), sane scripting (fish is fully scriptable, and its syntax is simple, clean, and consistent), web-based configuration (for those lucky few with a graphical computer, you can set your colors and view functions, variables, and history all from a web page), man page completions (other shells support programmable completions, but only fish generates them automatically by parsing your installed man pages), and works out of the box (fish will delight you with features like tab completions and syntax highlighting that just work, with nothing new to learn or configure). [...]

- dash: DASH is a POSIX-compliant implementation of /bin/sh that aims to be as small as possible. It does this without sacrificing speed where possible. In fact, it is significantly faster thanbash (the GNU Bourne-Again SHell) for most tasks. [...]

- xonsh: Xonsh is a Python-powered, cross-platform, Unix-gazing shell language and command prompt. The language is a superset of Python 3.5+ with additional shell primitives that you are used to frombash and IPython. It works on all major systems including Linux, OSX, and Windows. Xonsh is meant for the daily use of experts and novices. [...]

- ... and many many more ...

With a Mac, Terminal.app andbash are just the defaults that get shipped to you. But you have numerous options to choose from out there for your own use on a day-to-day basis, butbash is a good choice regardless given its very wide usage (i.e., it is very portable).

Note: If you run bash --version and you get something like

GNUbash, version 3.2.57(1)-release (x86_64-apple-darwin18)

Copyright (C) 2007 Free Software Foundation, Inc.

then you should consider updating your version ofbash since v3.2 dates back to 2007 whilebash is currently (at time of writing) v5.1.4. This article can help with the update process.

The PATH

One thing you may wonder is how the shell knows what program you want it to execute. For example, when we execute the date program, how does the shell know where to find the date program? If we execute echo hello, then how does the shell know where the echo program is to feed hello as an argument to? How does the shell find these programs and know what these programs are supposed to do?

The answer is that your computer has a bunch of built in programs that come with the machine. So your computer will typically ship with some kind of terminal application (e.g., Terminal.app) and some kind of shell (e.g.,bash), but it will also ship with a bunch of terminal-centric applications ready to go. And these applications are stored on your file system. And your shell has a way to determine where a program is located. It basically has a way to search for programs. It does this through a so-called environment variable. An environment variable is a variable like you might be used to for programming languages. It turns out that the shell, and the bourne again shell in particular, is really a programming language. The shell prompt you are given when you open a terminal window is not just able to run a program with arguments--you can also do things like while loops, for loops, conditionals, define functions, etc. All these things you can do in the shell. This is the kind of thing you do when shell scripting.

For now though, let's just look at this particular environment variable: In the context of the shell, environment variables are variables that are set whenever you start your shell. They're not variables you have to set everytime you run your shell. There are a bunch of these environment variables that are set to things like: where is your home directory, what is your username, etc. There's also one that is absolutely critical for all of this: the $PATH variable (you can see all of your shell's environment variables by running printenv):

$ echo $PATH

/Users/danielfarlow/.pyenv/shims:/Users/danielfarlow/.nvm/versions/node/v14.15.0/bin:/Users/danielfarlow/opt/anaconda3/bin:/Users/danielfarlow/opt/anaconda3/condabin:/Users/danielfarlow/.local/bin:/Library/Frameworks/Python.framework/Versions/3.7/bin:/usr/local/bin:/usr/bin:/bin:/usr/sbin:/sbin:/Library/TeX/texbin:/Users/danielfarlow/.pyenv/shims:/Users/danielfarlow/.nvm/versions/node/v14.15.0/bin:/usr/local/mysql/bin:.:/Applications/Visual Studio Code.app/Contents/Resources/app/bin

The above shows that echo $PATH inbash will show us all the paths on our machine that the shell will search for programs. Worth noting is that the output is essentially a colon-separated list, which may be kind of long and hard to read. But the essential point is that whenver you type the name of a program inbash, your shell will search the list of paths in the $PATH variable. It will look through each directory for a program or file whose name matches the command you tried to run. So if you run date or echo in thebash shell, then your shell will search every directory in $PATH until it finds a program named date or echo. And then it's going to run it if it finds a match. If we want to know which one it actually runs (e.g., maybe you have two echo programs), then you can run the which program. For example:

$ which echo

/bin/echo

This tells us that if we were to try to run a program named echo, then our shell would run the echo program from the /bin/echo directory. It's worth pausing for a moment to talk about what paths are. Paths are a way to name the location of a file on your computer. On Linux and macOS, these paths are separated by forward slashes: /. When you see something like /bin/echo, the first / indicates that we are at the root; that is, we are at the top of the file system. On Windows, paths like this are typically separated by backslashes instead. And while on Linux and macOS everything lives under the root namespace (so all absolute paths start with a slash) in Windows there is one root for every partition. So you may see things like C:\ or D:\. So Windows has sort of separate file system hierarchies for each drive that you have. But in Linux and macOS everything is mounted under one namespace (i.e., the root namespace).

On the topic of paths, what might one mean by the term absolute path? Absolute paths are paths that fully determine the location of a file. So something like /bin/echo is talking about a specific echo file and we are getting the full path to this file since the path begins with only a slash. But there are also things known as relative paths. A relative path, as its name implies, is relative to where you are. And the way you find out where you currently are is by typing pwd.

pwd

/Volumes/DEVFARLOW/development-and-engineering/webdev-courses/mit/missing-semester

This will print out the current path you are in. Notice that the path printed out by pwd is itself an absolute path. But we can change the current working directory to something else in relation to the directory we are presently working in. That is, something like cd .. will take us up one level in relation to whatever directory was printed out with pwd.

There are also a couple of "special directories" that exist, namely . and .., where . means the current directory (the directory printed out by pwd) while .. means the parent directory of the current directory. So this is a way you can easily navigate around the system. We can use . to navigate into folders relative to where we currently are: cd ./somesubfolder.

In general, when you run a program, that program will run on the current working directory. At least by default. This is really handy because this means we do not have to give full paths for things. We can just use the names for the files that are in the directory we are currently in. One thing useful for finding out which files exist in the directory you are in is the ls command--this will list the files in the current directory.

It is useful to know that ~ always expands to your home directory. So doing something like cd ~ will take you to your home directory. And you can easily change into directories relative to your home directory like so: cd ~/Desktop. Another useful thing to know is cd -. This effectively lets you toggle between two directories--it takes you to the directory you were previously in.

In the case of many programs, such as ls or cd, there may be arguments you do not know about. So far we have not done much other than provide paths. But how do you even discover that you can give a path to ls in the first place? Well, most programs take what are known as "arguments" like flags and options. These are things that usually start with a dash. One of the handiest of these is --help (see this link for why ls --help results in an error on macOS; short version: try man ls for something similar).

Something helpful is the -l flag when using ls (to "use a long listing format") which is maybe not helpful in and of itself. But let's see what the output might look like:

ls -l

-rw-r--r-- 1 danielfarlow staff 15 Nov 22 22:09 README.md

-rwxrwxrwx@ 1 danielfarlow staff 9478242 Nov 14 10:38 Structure and Interpretation of Computer Programs - Abelson and Sussman (1996) [2nd Ed.].pdf

drwxr-xr-x 11 danielfarlow staff 374 Nov 27 17:05 book-work

drwxr-xr-x 23 danielfarlow staff 782 Nov 5 11:45 code-from-book

drwxr-xr-x 5 danielfarlow staff 170 Nov 17 13:14 official-course-archive

drwxr-xr-x 6 danielfarlow staff 204 Nov 17 13:07 official-exams

drwxr-xr-x@ 33 danielfarlow staff 1122 Nov 14 11:17 official-lecture-notes

drwxr-xr-x 9 danielfarlow staff 306 Nov 17 13:12 official-projects

drwxr-xr-x 3 danielfarlow staff 102 Nov 16 10:42 official-video-recordings

drwxr-xr-x 20 danielfarlow staff 680 Nov 5 11:25 programming-assignments

-rw-r--r-- 1 danielfarlow staff 19 Dec 3 22:56 scheme-sandbox.scm

drwxr-xr-x 4 danielfarlow staff 136 Nov 5 11:47 sicp-js

-rw-r--r-- 1 danielfarlow staff 2267 Dec 10 17:34 temp.html

drwxr-xr-x 3 danielfarlow staff 102 Nov 29 10:22 uc-berkeley-resources

You can see the beginning d in the leftmost column indicates the item is a directory while those with - are not. The following letters after that indicate the permissions that are set for that file. So in some cases you may not be able to change into a directory or read a file or any other number of permission-related tasks. This all has to do with permissions!

The way to read this information is to note that the first group of three character indicates the permissions that are set for the owner of the file (i.e., danielfarlow as shown above). The second group of three characters displays the permissions for the group that owns the file or directory--above, we see all the files are owned by the staff group. The final group of three characters displays the list of permissions for everyone else. So anyone who is not a user owner or a group owner. You will get a different list of permissions and the like if, for example, you visit the root directory:

ls -l

drwxrwxr-x+ 115 root admin 3680 Nov 29 15:24 Applications

drwxr-xr-x+ 67 root wheel 2144 Oct 29 17:05 Library

drwxr-xr-x 2 root wheel 64 Aug 17 2018 Network

drwxr-xr-x@ 5 root wheel 160 Jan 15 2019 System

drwxr-xr-x 5 root admin 160 Oct 7 2019 Users

drwxr-xr-x+ 6 root wheel 192 Dec 11 11:41 Volumes

drwxr-xr-x@ 37 root wheel 1184 Nov 12 15:40 bin

drwxrwxr-t 2 root admin 64 Aug 17 2018 cores

dr-xr-xr-x 3 root wheel 4614 Dec 11 05:34 dev

lrwxr-xr-x@ 1 root wheel 11 Jan 15 2019 etc -> private/etc

dr-xr-xr-x 2 root wheel 1 Dec 11 09:20 home

-rw-r--r-- 1 root wheel 313 Aug 17 2018 installer.failurerequests

dr-xr-xr-x 2 root wheel 1 Dec 11 09:20 net

drwxr-xr-x 6 root wheel 192 Jan 15 2019 private

drwxr-xr-x@ 64 root wheel 2048 Nov 12 15:40 sbin

lrwxr-xr-x@ 1 root wheel 11 Jan 15 2019 tmp -> private/tmp

drwxr-xr-x@ 9 root wheel 288 Jan 15 2019 usr

lrwxr-xr-x@ 1 root wheel 11 Jan 15 2019 var -> private/var

The permissions here are a bit more interesting. You'll first notice everything is owned by the user root. We'll get back to what the root user is before long, but everything is a bit more interesting here. You'll notice groups like admin and wheel.

The character group rwx means (r)ead, (w)rite, and e(x)ecute; that is, directory and file access rights for user, group, and everyone else are indicated by groups of three characters each where something like rwx means the accessor has read, write, and execute permissions, whereas something like r-x means the accessor has read and execute permission but not write permission.

It's worth noting that what these things really mean differs for files and for directories. For files it's pretty straightforward:

r: If you have read permissions on a file, then you can read its contents.w: If you have write permissions on a file, then you can save the file, add more to it, replace it entirely, etc.x: If you have execute permissons on a file, then you are allowed to execute that file.

For directories, these permissions are a bit different.

r: Are you allowed to see which files are inside the directory? So think ofrfor a directory as "list"; that is, you will not be able to list off the contents of a directory unless you have the read permission.w: Are you allowed to rename, create, or remove files within a directory? If so, then you must have the write permission. So this is kind of intuitive but notice that this means that if you have write permissions on a file but you do not have write permissions on its directory, then you cannot delete the file. You can empty it. But you cannot delete it. Because that would require writing to the directory itself.x: Execute on directories is something that trips people up a lot. Execute on a directory is what's known as "search". While this is not a terribly useful name, what this means is: Are you allowed to enter this directory? If you want to get to a file, whether to open it or read it or write it or whatever you want to do (basically tocdinto a directory), then you must have the execute permission on all parent directories of the directory as well as the directory itself. For example, for me to access a file inside/usr/bin, such as/usr/bin/echo, then we must have the execute permission on/,usr, andbin. If we do not have all those execute permissions, then we will not be allowed access to theechofile in/usr/binbecause we won't be able to enter the directories along the way.

There are other bits you might come across such as s, t, l, @, etc.

Another command that is helpful to know about is the mv command which takes two arguments, the path to the file you want to move and the path to where you want the file to be moved. There's also the cp command which is very similar to the mv command except with cp you copy a file.

You can also remove files with rm. By default, removal is not recursive; hence, you cannot remove all files from a directory just using rm. You can pass the -r flag which lets you do a recursive remove and then pass a path where the effect will be the removal of everything below the specified path. There's also the rmdir command which lets you remove a directory but only if the directory is empty. Finally, there's mkdir which lets you make a directory.

If you ever want more information about how any of these commands work, then there's a really handy command for that as well: man. This stands for "manual pages". This program takes as an argument the name of another program and gives you its manual page. So we can do something like man ls to see more about the ls program and its usage. We can quit the manual page program by simply entering q.

Combining programs

So far we have largely talked about programs in isolation. But where much of the power of the shell really comes through is when you start combining different programs. So instead of just running cd or running ls, we may want to chain multiple programs together. The way we can do this is by means of the notion of streams that the shell gives us.

Every program by default has essentially two primary streams, the input stream (i.e., stdin) and the output stream (i.e., stdout). By default, the input stream is your keyboard (essentially the window you type into opened by your terminal). Whatever you type into your terminal is going to end up in your program. And it has a default output stream which is, whenever the program prints something, it is going to print to that stream. And by default the standard output stream is your terminal. This is why when we type echo hello it gets printed back to our terminal.

But the shell gives you a way to rewire these streams (i.e., to change where the input and output of a program are pointed). The most straightforward way you do this is using the angle bracket signs (i.e. < and >). An example will clearly demonstrate their uses.

echo hello > hello.txt

The above will effectively take the output of echo hello and store the content in the file hello.txt. And because we implicitly gave a relative path, this will construct the file hello.txt in the current directory called hello.txt. In theory, the contents of the hello.txt file after invoking this command should just be the string hello. If you execute this, then notice that nothing gets printed to the output. Previously, when we just ran echo hello, we saw hello printed to the terminal. Now, that hello has gone into a file called hello.txt. And we can verify this using the cat program (which prints the contents of a file) like so:

cat hello.txt

hello

But cat is also a program that supports this kind of rewiring. So cat, whose default behavior is to duplicate its input to its output, can be used like so:

cat < hello.txt

hello

We are communicating that we want the cat program to take its input from hello.txt. What will happen in this case is that the shell will open hello.txt, take its contents and set those contents to be the input of cat, and then cat is just going to print those contents to its output, which, since we haven't rewired it, is just going to be the terminal.

And we can use both angle brackets at the same time if we so desire. For example, if we want to copy a file without using the cp command for some reason, then we can run the following:

cat < hello.txt > hello2.txt

In this case, we're telling the cat program to just do its normal thing--it knows nothing about the redirection. But we are telling the shell to use hello.txt as the input for cat and write anything that cat prints to hello2.txt.

It's important to be aware of >> instead of just >. The default behavior of > is to overwrite whereas >> is used to append content to a file.

These commands are pretty straightforward--they're usually just ways to interact with files, but where it gets really interesting is when you use another operator the shell gives you: the pipe character |. What "pipe" means is "take the output of the program to the left and make it the input to the program on the right." What does this look like?

Let's consider ls -l /. This prints, in long listing format, the contents of the root directory /. Let's say we only wanted the last line of the output. Well, there's a command called tail where tail prints the last n lines of its input. So we can do something like tail -n1 which tells us to print the last line. And we can wire the ls program and the tail program together by means of |:

ls -l / | tail -n1

lrwxr-xr-x@ 1 root wheel 11 Jan 15 2019 var -> private/var

Notice here that ls does not know about tail at all. And tail does not know about ls either. They're completely different programs and have never been programmed to be compatible with one another. All they know how to do is read from input and write to output. But the pipe | is what wires them together. In this particular case, what we're saying is that we want the output of ls -l / to be the input to tail -n1 and we want the output of tail -n1 to go to our terminal because we haven't rewired the output of tail -n1. We could, on the other hand, rewire the output of tail -n1 to go to something like ls.txt:

ls -l / | tail -n1 > ls.txt

This will result in

lrwxr-xr-x@ 1 root wheel 11 Jan 15 2019 var -> private/var

being written to ls.txt instead of the terminal window. It turns out you can do some really neat things with this, namely building robust pipelines to accomplish all sorts of work. To give you one example of something not all that practical but mildly interesting nonetheless:

curl --head --silent google.com | grep -i content-length | cut -d " " -f 2

219

The curl --head --silent google.com gives us all the HTTP headers for accessing google.com:

HTTP/1.1 301 Moved Permanently

Location: http://www.google.com/

Content-Type: text/html; charset=UTF-8

Date: Sat, 12 Dec 2020 01:54:15 GMT

Expires: Mon, 11 Jan 2021 01:54:15 GMT

Cache-Control: public, max-age=2592000

Server: gws

Content-Length: 219

X-XSS-Protection: 0

X-Frame-Options: SAMEORIGIN

The cool thing is that we are taking the output stream of curl --head --silent google.com, which usually goes to the Terminal window, and we are redirecting it to be the input stream to grep -i content-length, where this command uses a case-insensitive regular expression to give us Content-Length: 219. We can yet again pipe this to cut with cut -d " " -f 2 to give us the second field where we are using a space " " as the delimiter. The net result is 219.

This example is not the most useful of examples but you can already see by the composability how we could do some really cool things (maybe piping things to different functions, etc.). Furthermore, it turns out that pipes are not just for textual data. You can do this for things like images as well. You can have a program that manipulates a binary image on its input and writes a binary image to its output.

The root user

We need to discuss the notion of a "root user". The root user is sort of like the administrator user on Windows and has user ID 0. The root user is special because it is allowed to do whatever it wants on your system. Even if a file is not readable by anyone or not writable by anyone, etc. Root can still do all this stuff. Root is sort of a "super user" that can do whatever it wants. Most of the time you will not be operating as the super user--you will not be root but someone like danielfarlow or whatever your default username is. This is the user you will typically act with. This is because if you were operating your computer as the root user at all times then if you ran the wrong program by accident you could completely destroy your computer. This is to protect you in most cases. But sometimes you explicitly want to do something that you would otherwise often be protected against. Usually for these cases you would use a program called sudo or "do as su" where su in this case is "super user". So sudo effectively means "do the following as the super user". Usually, the way sudo works is you write sudo and then a command like you would normally appear on your terminal like sudo echo bob, and then the shell would run that command is if you were the root user instead of your normal user.

Where might you need something like this? There are many special file systems on your computer and often modifying them in any way requires you do so as the root user. On some systems, for example, we might be able to do something like the following:

$ cd /sys/class/backlight/intel_backlight

$ cat brightness

1060

You might think you could do something like echo 500 > brightness to change the brightness, but we get an error: bash: brightness: Permission denied. The fix seems simple: sudo echo 500 > brightness. But we get the same error. Why? This is because redirections of input and output do not know about each other. This is not something the programs themselves know about. When we piped ls into tail, tail did not know about ls and ls did not know about tail. The pipe and redirection was set up by the shell. So in this case what we are doing is telling the shell to run the echo program as the super user and to send the output to the brightness file. But the shell is what is opening the brightness file--it is not the sudo program. So in this case, the shell tries to open the brightness file for writing as the default user (i.e., not the root/super user). But it's not allowed to do that and therefore we get a permission denied error.

How do we get around this? We could switch into a "root terminal"--one way to do this is to run sudo su which effectively says, "Run the following command as root and su is a complicated command that effectively gets you a shell as the super user." Then everything you execute is done so as the super user. With most shell setups, the shown username will change from your default to root and the $ will change to #. If we run sudo su, drop into a root shell, and execute echo 500 > brightness, then we do not get any permission denied errors, but can we accomplish this same thing without resorting to dropping into the root shell? Yes. Here is one way:

echo 1060 | sudo tee brightness

How is this different? Here we are telling the shell to run the echo 1060 command and then to run the sudo tee brightness command, where the standard output of echo 1060 is redirected to be the standard input of sudo tee brightness. In order to understand this, we must know what the tee command does. The tee command takes its input and writes it to a file but also to standard output. So tee is a convenient way if, say, you have a log file and you want to send to a file to store for later but you also want to see it yourself. So you can pipe your content through tee to the name of a file but also see the redirected output show up as standard output as well (i.e., printed to the Terminal window). We are taking advantage of that program here: We are telling the shell to run tee as the super user with input 1060 coming from echo--this will effectively write 1060 to the brightness file as well as show 1060 as standard output.

All shells are roughly the same at their core

While they may vary in the details, at their core they are all roughly the same: they allow you to run programs, give them input, and inspect their output in a semi-structured way.

Standard input and output streams for programs are both the terminal

Normally, a program's input and output are both your terminal (i.e., your keyboard as input and your screen as output). You can gain a great deal of power by effectively rewiring these streams (typically in the form of using | to pipe the output stream of one command/program as the input stream of another command/program).

The simplest form of redirection is < file and > file, where < and > let you rewire the input and output streams of a program to a file, respectively.

Operations like |, >, and < are done by the shell, not the individual program

On a Linux system, sysfs exposes a number of kernel parameters as files (so you can easily reconfigure the kernel on the fly without specialized tools), and you need to be root when writing to the sysfs file system mounted under/sys.

For example, the brightness of your laptop's screen is exposed through a file called brightness under /sys/class/backlight. By writing a value into that file, we can change the screen brightness. Your first instinct might be to do something like:

$ sudo find -L /sys/class/backlight -maxdepth 2 -name '*brightness*'

/sys/class/backlight/thinkpad_screen/brightness

$ cd /sys/class/backlight/thinkpad_screen

$ sudo echo 3 > brightness

An error occurred while redirecting file 'brightness'

open: Permission denied

This error may come as a surprise. After all, we ran the command with sudo! This is an important thing to know about the shell. Operations like |, >, and < are done by the shell, not by the individual program (hence you cannot "run" something like |, <, or > with sudo--these symbols aren't programs; these are operations managed by the shell so your use of sudo should always apply to the program you really need to be using as a super user). echo and friends do not "know" about |. They just read from their input and write to their output, whatever it may be. In the case above, the shell (which is authenticated just as your user) tries to open the brightness file for writing, before setting that as sudo echo's output, but is prevented from doing so since the shell does not run as root. Using this knowledge, we can work around this:

echo 3 | sudo tee brightness

Since the tee program is the one to open the /sys file for writing, and it is running as root, the permissions all work out.

Exercises

1.1

- Question

- Answer

- Additional Details

For this course, you need to be using a Unix shell likebash or ZSH. If you are on Linux or macOS, you don't have to do anything special. If you are on Windows, you need to make sure you are not running cmd.exe or PowerShell; you can use Windows Subsystem for Linux or a Linux virtual machine to use Unix-style command-line tools. To make sure you're running an appropriate shell, you can try the command echo $SHELL. If it says something like /bin/bash or /usr/bin/zsh, that means you're running the right program.

echo $SHELL

# /usr/local/bin/fish

Daily using Mac OS X with a Unix-style shell in the totally awesome fish shell!

But running everything in this course using the upgradedbash shell in v5.1.4.

1.2

- Question

- Answer

- Additional Details

Create a new directory called missing under /tmp.

mkdir /tmp/missing

Now we can simply cd into this new directory since we will be working inside of it for a bit:

cd /tmp/missing

1.3

- Question

- Answer

- Additional Details

Look up the touch program. The man program is your friend.

man touch

The command above gives us the following (any stylings such as underlining and the like have been removed):

TOUCH(1) BSD General Commands Manual TOUCH(1)

NAME

touch -- change file access and modification times

SYNOPSIS

touch [-A [-][[hh]mm]SS] [-acfhm] [-r file] [-t [[CC]YY]MMDDhhmm[.SS]] file ...

DESCRIPTION

The touch utility sets the modification and access times of files. If any file does not exist, it is created with default

permissions.

By default, touch changes both modification and access times. The -a and -m flags may be used to select the access time or

the modification time individually. Selecting both is equivalent to the default. By default, the timestamps are set to the

current time. The -t flag explicitly specifies a different time, and the -r flag specifies to set the times those of the

specified file. The -A flag adjusts the values by a specified amount.

The following options are available:

-A Adjust the access and modification time stamps for the file by the specified value. This flag is intended for use

in modifying files with incorrectly set time stamps.

The argument is of the form ``[-][[hh]mm]SS'' where each pair of letters represents the following:

- Make the adjustment negative: the new time stamp is set to be before the old one.

hh The number of hours, from 00 to 99.

mm The number of minutes, from 00 to 59.

SS The number of seconds, from 00 to 59.

The -A flag implies the -c flag: if any file specified does not exist, it will be silently ignored.

-a Change the access time of the file. The modification time of the file is not changed unless the -m flag is also

specified.

-c Do not create the file if it does not exist. The touch utility does not treat this as an error. No error messages

are displayed and the exit value is not affected.

-f Attempt to force the update, even if the file permissions do not currently permit it.

-h If the file is a symbolic link, change the times of the link itself rather than the file that the link points to.

Note that -h implies -c and thus will not create any new files.

-m Change the modification time of the file. The access time of the file is not changed unless the -a flag is also

specified.

-r Use the access and modifications times from the specified file instead of the current time of day.

-t Change the access and modification times to the specified time instead of the current time of day. The argument is

of the form ``[[CC]YY]MMDDhhmm[.SS]'' where each pair of letters represents the following:

CC The first two digits of the year (the century).

YY The second two digits of the year. If ``YY'' is specified, but ``CC'' is not, a value for ``YY''

between 69 and 99 results in a ``CC'' value of 19. Otherwise, a ``CC'' value of 20 is used.

MM The month of the year, from 01 to 12.

DD the day of the month, from 01 to 31.

hh The hour of the day, from 00 to 23.

mm The minute of the hour, from 00 to 59.

SS The second of the minute, from 00 to 61.

If the ``CC'' and ``YY'' letter pairs are not specified, the values default to the current year. If the ``SS'' let-

ter pair is not specified, the value defaults to 0.

EXIT STATUS

The touch utility exits 0 on success, and >0 if an error occurs.

COMPATIBILITY

The obsolescent form of touch, where a time format is specified as the first argument, is supported. When no -r or -t

option is specified, there are at least two arguments, and the first argument is a string of digits either eight or ten

characters in length, the first argument is interpreted as a time specification of the form ``MMDDhhmm[YY]''.

The ``MM'', ``DD'', ``hh'' and ``mm'' letter pairs are treated as their counterparts specified to the -t option. If the

``YY'' letter pair is in the range 39 to 99, the year is set to 1939 to 1999, otherwise, the year is set in the 21st cen-

tury.

SEE ALSO

utimes(2)

STANDARDS

The touch utility is expected to be a superset of the IEEE Std 1003.2 (``POSIX.2'') specification.

HISTORY

A touch utility appeared in Version 7 AT&T UNIX.

BSD April 28, 1995 BSD

1.4

- Question

- Answer

- Additional Details

Use touch to create a new file called semester in missing.

touch semester

None

1.5

- Question

- Answer

- Additional Details

Write the following into that file, one line at a time:

#!/bin/sh

curl --head --silent https://missing.csail.mit.edu

The first line might be tricky to get working. It's helpful to know that

# starts a comment in Bash, and ! has a special meaning even within

double-quoted (") strings. Bash treats single-quoted strings (')

differently: they will do the trick in this case. See the Bash

quoting

manual page for more information.

echo '#!/bin/sh' > semester

echo 'curl --head --silent https://missing.csail.mit.edu' >> semester

The linked manual page's entries on the escape character, single quotes, and double quotes are all very helpful in general and thus reproduced below for ease of reference (even though the entry on single quotes is the only relevant one presently)

- Escape Character (how to remove the special meaning from a single character): A non-quoted backslash

\is the Bash escape character. It preserves the literal value of the next character that follows, with the exception ofnewline. If a\newlinepair appears, and the backslash itself is not quoted, the\newlineis treated as a line continuation (that is, it is removed from the input stream and effectively ignored). - Single Quotes (how to inhibit all interpretation of a sequence of characters): Enclosing characters in single quotes (

') preserves the literal value of each character within the quotes. A single quote may not occur between single quotes, even when preceded by a backslash. - Double Quotes (how to suppress most of the interpretation of a sequence of characters): Enclosing characters in double quotes (

") preserves the literal value of all characters within the quotes, with the exception of$,`,/, and, when history expansion is enabled,!. When the shell is in POSIX mode (see Bash POSIX Mode), the!has no special meaning within double quotes, even when history expansion is enabled. The characters$and`retain their special meaning within double quotes (see Shell Expansions). The backslash retains its special meaning only when followed by one of the following characters:$,`,",/, ornewline. Within double quotes, backslashes that are followed by one of these characters are removed. Backslashes preceding characters without a special meaning are left unmodified. A double quote may be quoted within double quotes by preceding it with a backslash. If enabled, history expansion will be performed unless an!appearing in double quotes is escaped using a backslash. The backslash preceding the!is not removed. The special parameters*and@have special meaning when in double quotes (see Shell Parameter Expansion).

The upshot of all of this in the context of the current exercise is that we should use single quotes as indicated in the provided answer:

echo '#!/bin/sh' > semester

echo 'curl --head --silent https://missing.csail.mit.edu' >> semester

Note: Use of > in the first line above would overwrite anything that might exist in the semester file while >> used in the second line appends to the semester file. It's not a bad idea to generally use >> to avoid accidentally overwriting things.

1.6

- Question

- Answer

- Additional Details

Try to execute the file; that is, type the path to the script (./semester)

into your shell and press enter. Understand why it doesn't work by

consulting the output of ls (hint: look at the permission bits of the

file).

./semester

# bash: ./semester: Permission denied

ls -l

# -rw-r--r-- 1 danielfarlow wheel 61 Apr 25 18:26 semester

The execution bit x is not set for the owner (only the read and write bits are set).

From the code sample above and the hint given in the exercise statement, it seems clear that the problem lies in not having x (i.e., ability to execute) for the "owner", "group", or "world" permission sets.

It is interesting to note that

./semesterrequires execution and readable bits (right now we only have the readable bit for the group and world permission sets and readable and writable for the owner set), butbash semesterorsh semesteronly requires the readable bit (which means we can runbash semesterorsh semesterjust fine since each accessor set has the readable bit set).

The short of it is that ./semester results in making your shell run the file as if it were a regular executable (this is obviously a problem if you do not have the execution bit x on the file set for the appropriate accessing group) while sh semester makes your shell run sh and passes semester as the command-line argument (semester is only read and interpreted by sh as a regular file and thus the execution bit x is not required).

A more detailed explanation behind the behavior described above can be found in this answer and this answer. The first linked answer is reproduced below:

For your specific script

#!/bin/bash

echo "Hello World!"either way will work, except that

./script.shrequires execution and readable bits, whilebash script.shonly requires readable bit.The reason of the permissions requirement difference lies in how the program that interprets your script is loaded:

./script.shmakes your shell run the file as if it was a regular executable.The shell forks itself and uses a system call (e.g.

execve) to make the operating system execute the file in the forked process. The operating system will check the file's permissions (hence the execution bit needs to be set) and forward the request to the program loader, which looks at the file and determines how to execute it. In Linux compiled executables start with an ELF magic number, while scripts start with a#!(hashbang). A hashbang header means that the file is a script and needs to be interpreted by the program that is specified after the hashbang. This allows a script itself to tell the system how to interpret the script.With your script, the program loader will execute

/bin/bashand pass./script.shas the command-line argument.

bash script.shmakes your shell runbashand passscript.shas the command-line argumentSo the operating system will load

bash(not even looking atscript.sh, because it's just a command-line argument). The createdbashprocess will then interpret thescript.shbecause it's passed as the command-line argument. Becausescript.shis only read bybashas a regular file, the execution bit is not required.I recommend using

./script.shthough, because you might not know which interpreter the script is requiring. So let the program loader determine that for you.

1.7

- Question

- Answer

- Additional Details

Run the command by explicitly starting the sh interpreter, and giving it

the file semester as the first argument (i.e. sh semester). Why does

this work, while ./semester didn't?

Running

sh semester

The command above results in the following output (at the time of running this command):

HTTP/2 200

server: GitHub.com

content-type: text/html; charset=utf-8

last-modified: Tue, 20 Apr 2021 14:15:31 GMT

access-control-allow-origin: *

etag: "607ee203-1f31"

expires: Mon, 26 Apr 2021 04:11:01 GMT

cache-control: max-age=600

x-proxy-cache: MISS

x-github-request-id: C174:0523:8C4CFF:E81E50:60863AFD

accept-ranges: bytes

date: Mon, 26 Apr 2021 04:01:01 GMT

via: 1.1 varnish

age: 0

x-served-by: cache-fty21340-FTY

x-cache: MISS

x-cache-hits: 0

x-timer: S1619409661.254991,VS0,VE22

vary: Accept-Encoding

x-fastly-request-id: a01a72609209bd71a49fbeac08956529a4e7ff14

content-length: 7985

The reason sh semester worked while ./semester did not is because with sh semester we only need the read bit for permissions while ./semester requires the execution bit. (This is described more thoroughly in the response for the previous exercise.)

None

1.8

- Question

- Answer

- Additional Details

Look up the chmod program (e.g., use man chmod).

man chmod

The command above results in the following (stylings such as underlining and the like have been removed):

CHMOD(1) BSD General Commands Manual CHMOD(1)

NAME

chmod -- change file modes or Access Control Lists

SYNOPSIS

chmod [-fv] [-R [-H | -L | -P]] mode file ...

chmod [-fv] [-R [-H | -L | -P]] [-a | +a | =a] ACE file ...

chmod [-fhv] [-R [-H | -L | -P]] [-E] file ...

chmod [-fhv] [-R [-H | -L | -P]] [-C] file ...

chmod [-fhv] [-R [-H | -L | -P]] [-N] file ...

DESCRIPTION

The chmod utility modifies the file mode bits of the listed files as

specified by the mode operand. It may also be used to modify the Access

Control Lists (ACLs) associated with the listed files.

The generic options are as follows:

-f Do not display a diagnostic message if chmod could not modify the

mode for file.

-H If the -R option is specified, symbolic links on the command line

are followed. (Symbolic links encountered in the tree traversal

are not followed by default.)

-h If the file is a symbolic link, change the mode of the link

itself rather than the file that the link points to.

-L If the -R option is specified, all symbolic links are followed.

-P If the -R option is specified, no symbolic links are followed.

This is the default.

-R Change the modes of the file hierarchies rooted in the files

instead of just the files themselves.

-v Cause chmod to be verbose, showing filenames as the mode is modi-

fied. If the -v flag is specified more than once, the old and

new modes of the file will also be printed, in both octal and

symbolic notation.

The -H, -L and -P options are ignored unless the -R option is specified.

In addition, these options override each other and the command's actions

are determined by the last one specified.

Only the owner of a file or the super-user is permitted to change the

mode of a file.

DIAGNOSTICS

The chmod utility exits 0 on success, and >0 if an error occurs.

MODES

Modes may be absolute or symbolic. An absolute mode is an octal number

constructed from the sum of one or more of the following values:

4000 (the set-user-ID-on-execution bit) Executable files with

this bit set will run with effective uid set to the uid of

the file owner. Directories with the set-user-id bit set

will force all files and sub-directories created in them to

be owned by the directory owner and not by the uid of the

creating process, if the underlying file system supports

this feature: see chmod(2) and the suiddir option to

mount(8).

2000 (the set-group-ID-on-execution bit) Executable files with

this bit set will run with effective gid set to the gid of

the file owner.

1000 (the sticky bit) See chmod(2) and sticky(8).

0400 Allow read by owner.

0200 Allow write by owner.

0100 For files, allow execution by owner. For directories,

allow the owner to search in the directory.

0040 Allow read by group members.

0020 Allow write by group members.

0010 For files, allow execution by group members. For directo-

ries, allow group members to search in the directory.

0004 Allow read by others.

0002 Allow write by others.

0001 For files, allow execution by others. For directories

allow others to search in the directory.

For example, the absolute mode that permits read, write and execute by

the owner, read and execute by group members, read and execute by others,

and no set-uid or set-gid behaviour is 755 (400+200+100+040+010+004+001).

The symbolic mode is described by the following grammar:

mode ::= clause [, clause ...]

clause ::= [who ...] [action ...] action

action ::= op [perm ...]

who ::= a | u | g | o

op ::= + | - | =

perm ::= r | s | t | w | x | X | u | g | o

The who symbols ``u'', ``g'', and ``o'' specify the user, group, and

other parts of the mode bits, respectively. The who symbol ``a'' is

equivalent to ``ugo''.

The perm symbols represent the portions of the mode bits as follows:

r The read bits.

s The set-user-ID-on-execution and set-group-ID-on-execution

bits.

t The sticky bit.

w The write bits.

x The execute/search bits.

X The execute/search bits if the file is a directory or any

of the execute/search bits are set in the original (unmodi-

fied) mode. Operations with the perm symbol ``X'' are only

meaningful in conjunction with the op symbol ``+'', and are

ignored in all other cases.

u The user permission bits in the original mode of the file.

g The group permission bits in the original mode of the file.

o The other permission bits in the original mode of the file.

The op symbols represent the operation performed, as follows:

+ If no value is supplied for perm, the ``+'' operation has no

effect. If no value is supplied for who, each permission bit spec-

ified in perm, for which the corresponding bit in the file mode

creation mask is clear, is set. Otherwise, the mode bits repre-

sented by the specified who and perm values are set.

- If no value is supplied for perm, the ``-'' operation has no

effect. If no value is supplied for who, each permission bit spec-

ified in perm, for which the corresponding bit in the file mode

creation mask is clear, is cleared. Otherwise, the mode bits rep-

resented by the specified who and perm values are cleared.

= The mode bits specified by the who value are cleared, or, if no who

value is specified, the owner, group and other mode bits are

cleared. Then, if no value is supplied for who, each permission

bit specified in perm, for which the corresponding bit in the file

mode creation mask is clear, is set. Otherwise, the mode bits rep-

resented by the specified who and perm values are set.

Each clause specifies one or more operations to be performed on the mode

bits, and each operation is applied to the mode bits in the order speci-

fied.

Operations upon the other permissions only (specified by the symbol ``o''

by itself), in combination with the perm symbols ``s'' or ``t'', are

ignored.

EXAMPLES OF VALID MODES

644 make a file readable by anyone and writable by the owner

only.

go-w deny write permission to group and others.

=rw,+X set the read and write permissions to the usual defaults,

but retain any execute permissions that are currently set.

+X make a directory or file searchable/executable by everyone

if it is already searchable/executable by anyone.

755

u=rwx,go=rx

u=rwx,go=u-w make a file readable/executable by everyone and writable by

the owner only.

go= clear all mode bits for group and others.

g=u-w set the group bits equal to the user bits, but clear the

group write bit.

ACL MANIPULATION OPTIONS

ACLs are manipulated using extensions to the symbolic mode grammar. Each

file has one ACL, containing an ordered list of entries. Each entry

refers to a user or group, and grants or denies a set of permissions. In

cases where a user and a group exist with the same name, the user/group

name can be prefixed with "user:" or "group:" in order to specify the

type of name.

If the user or group name contains spaces you can use ':' as the delim-

iter between name and permission.

The following permissions are applicable to all filesystem objects:

delete Delete the item. Deletion may be granted by either this

permission on an object or the delete_child right on the

containing directory.

readattr

Read an objects basic attributes. This is implicitly

granted if the object can be looked up and not explicitly

denied.

writeattr

Write an object's basic attributes.

readextattr

Read extended attributes.

writeextattr

Write extended attributes.

readsecurity

Read an object's extended security information (ACL).

writesecurity

Write an object's security information (ownership, mode,

ACL).

chown Change an object's ownership.

The following permissions are applicable to directories:

list List entries.

search Look up files by name.

add_file

Add a file.

add_subdirectory

Add a subdirectory.

delete_child

Delete a contained object. See the file delete permission

above.

The following permissions are applicable to non-directory filesystem

objects:

read Open for reading.

write Open for writing.

append Open for writing, but in a fashion that only allows writes

into areas of the file not previously written.

execute

Execute the file as a script or program.

ACL inheritance is controlled with the following permissions words, which

may only be applied to directories:

file_inherit

Inherit to files.

directory_inherit

Inherit to directories.

limit_inherit

This flag is only relevant to entries inherited by subdi-

rectories; it causes the directory_inherit flag to be

cleared in the entry that is inherited, preventing further

nested subdirectories from also inheriting the entry.

only_inherit

The entry is inherited by created items but not considered

when processing the ACL.

The ACL manipulation options are as follows:

+a The +a mode parses a new ACL entry from the next argument on the

commandline and inserts it into the canonical location in the

ACL. If the supplied entry refers to an identity already listed,

the two entries are combined.

Examples

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

# chmod +a "admin allow write" file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: admin allow write

# chmod +a "guest deny read" file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: admin allow write

# chmod +a "admin allow delete" file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: admin allow write,delete

# chmod +a "User 1:allow:read" file

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: User 1 allow read

3: admin allow write,delete

The +a mode strives to maintain correct canonical form for the

ACL.

local deny

local allow

inherited deny

inherited allow

By default, chmod adds entries to the top of the local deny and

local allow lists. Inherited entries are added by using the +ai

mode.

Examples

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: admin allow write,delete

3: juser inherited deny delete

4: admin inherited allow delete

5: backup inherited deny read

6: admin inherited allow write-security

# chmod +ai "others allow read" file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: admin allow write,delete

3: juser inherited deny delete

4: others inherited allow read

5: admin inherited allow delete

6: backup inherited deny read

7: admin inherited allow write-security

+a# When a specific ordering is required, the exact location at which

an entry will be inserted is specified with the +a# mode.

Examples

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: admin allow write

# chmod +a# 2 "others deny read" file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: others deny read

3: admin allow write

The +ai# mode may be used to insert inherited entries at a spe-

cific location. Note that these modes allow non-canonical ACL

ordering to be constructed.

-a The -a mode is used to delete ACL entries. All entries exactly

matching the supplied entry will be deleted. If the entry lists a

subset of rights granted by an entry, only the rights listed are

removed. Entries may also be deleted by index using the -a# mode.

Examples

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: guest deny read

2: admin allow write,delete

# chmod -a# 1 file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: admin allow write,delete

# chmod -a "admin allow write" file1

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: admin allow delete

Inheritance is not considered when processing the -a mode; rights

and entries will be removed regardless of their inherited state.

If the user or group name contains spaces you can use ':' as the

delimiter

Example

# chmod +a "User 1:allow:read" file

=a# Individual entries are rewritten using the =a# mode.

Examples

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: admin allow delete

# chmod =a# 1 "admin allow write,chown"

# ls -le

-rw-r--r--+ 1 juser wheel 0 Apr 28 14:06 file1

owner: juser

1: admin allow write,chown

This mode may not be used to add new entries.

-E Reads the ACL information from stdin, as a sequential list of

ACEs, separated by newlines. If the information parses cor-

rectly, the existing information is replaced.

-C Returns false if any of the named files have ACLs in non-canoni-

cal order.

-i Removes the 'inherited' bit from all entries in the named file(s)

ACLs.

-I Removes all inherited entries from the named file(s) ACL(s).

-N Removes the ACL from the named file(s).

COMPATIBILITY

The -v option is non-standard and its use in scripts is not recommended.

SEE ALSO

chflags(1), fsaclctl(1), install(1), chmod(2), stat(2), umask(2), fts(3),

setmode(3), symlink(7), chown(8), mount(8), sticky(8)

STANDARDS

The chmod utility is expected to be IEEE Std 1003.2 (``POSIX.2'') compat-

ible with the exception of the perm symbol ``t'' which is not included in

that standard.

HISTORY

A chmod command appeared in Version 1 AT&T UNIX.

BSD July 08, 2004 BSD

1.9

- Question

- Answer

- Additional Details

Use chmod to make it possible to run the command ./semester rather than

having to type sh semester. How does your shell know that the file is

supposed to be interpreted using sh? See this page on the

shebang line for more

information.

ls -l

# -rw-r--r-- 1 danielfarlow wheel 61 Apr 25 18:26 semester

chmod u+x semester

ls -l

# -rwxr--r-- 1 danielfarlow wheel 61 Apr 25 18:26 semester

The hashbang link tells us (in the second paragraph) why the semester file with its contents as

#!/bin/sh

curl --head --silent https://missing.csail.mit.edu

is supposed to be interpreted using sh:

When a text file with a shebang is used as if it is an executable in a Unix-like operating system, the program loader mechanism parses the rest of the file's initial line as an interpreter directive. The loader executes the specified interpreter program, passing to it as an argument the path that was initially used when attempting to run the script, so that the program may use the file as input data. For example, if a script is named with the path

path/to/script, and it starts with the following line,#!/bin/sh, then the program loader is instructed to run the program/bin/sh, passingpath/to/scriptas the first argument.

Hence, we have the following if we use our exercise as the context for the last sentence reproduced above: If a script is named with the path ./semester, and it starts with #!/bin/sh (which it does), then the program loader is instructed to run the program /bin/sh (i.e., the Bourne shell or sh instead of the Bourne-Again shell or bash), passing ./semester as the first argument.

Running chmod u+x semester allows us to execute the semester file by running ./semester from the command line, whereupon the program loader recognizes from #!/bin/sh that the Bourse shell (sh) is to be used in interpreting the contents of semester.

Relevant/useful portions from the linked articles in the reproduced section from the article on the hashbang above (i.e., Unix-like, program loader, interpreter directive, and interpreter) are provided below:

Unix-like operating systems

A Unix-like (sometimes referred to as UNX or nix) operating system is one that behaves in a manner similar to a Unix system, while not necessarily conforming to or being certified to any version of the Single UNIX Specification. A Unix-like application is one that behaves like the corresponding Unix command or shell. There is no standard for defining the term, and some difference of opinion is possible as to the degree to which a given operating system or application is "Unix-like".

[...]

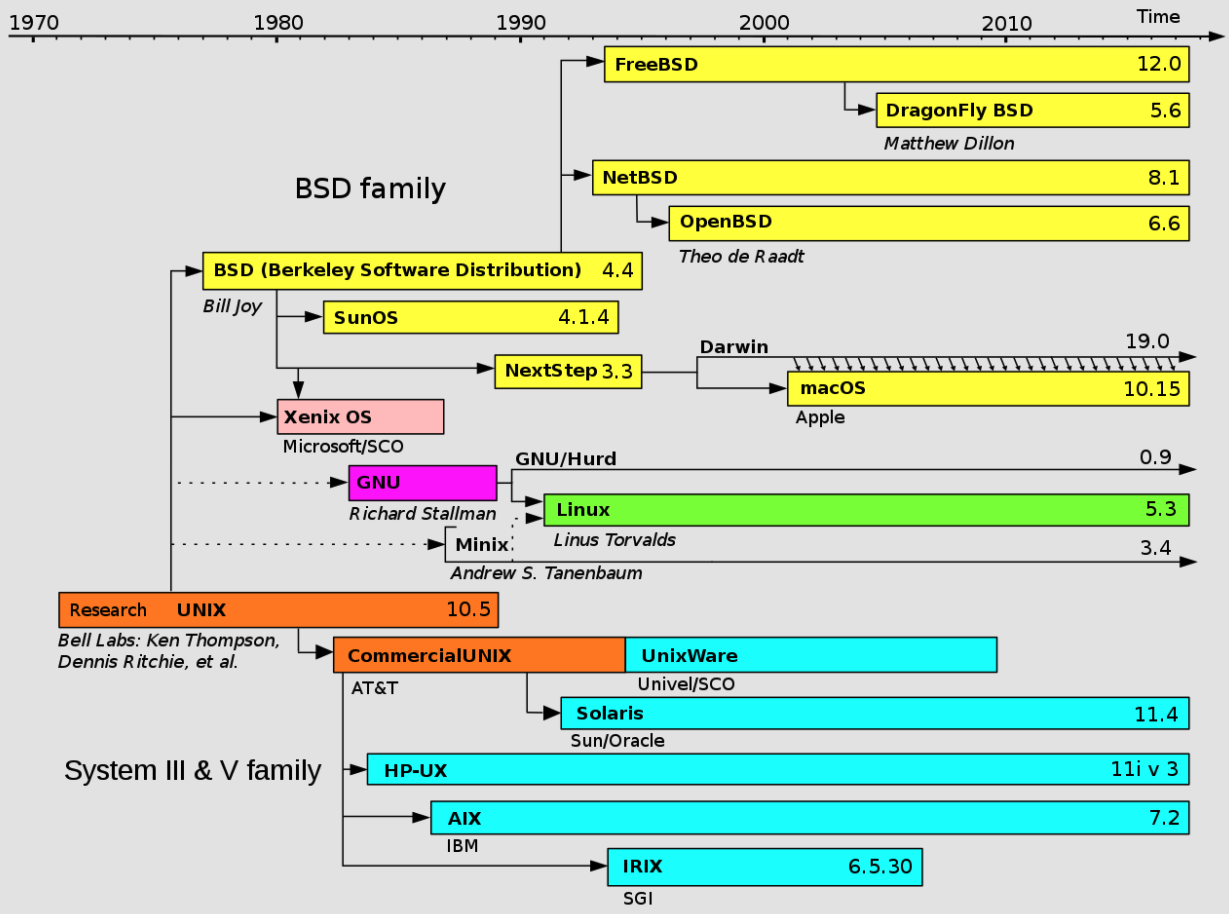

Various free, low-cost, and unrestricted substitutes for UNIX emerged in the 1980s and 1990s, including 4.4BSD, Linux, and Minix. Some of these have in turn been the basis for commercial "Unix-like" systems, such as BSD/OS and macOS. Several versions of (Mac) OS X/macOS running on Intel-based Mac computers have been certified under the Single UNIX Specification. The BSD variants are descendants of UNIX developed by the University of California at Berkeley with UNIX source code from Bell Labs. However, the BSD code base has evolved since then, replacing all of the AT&T code. Since the BSD variants are not certified as compliant with the Single UNIX Specification, they are referred to as "UNIX-like" rather than "UNIX".

Simplified history of Unix-like operating systems:

As can be seen from above, macOS appears on the scene shortly after 2000 in implementing a Unix-like OS.

Program loader

In computer systems a loader is the part of an operating system that is responsible for loading programs and libraries. It is one of the essential stages in the process of starting a program, as it places programs into memory and prepares them for execution. Loading a program involves reading the contents of the executable file containing the program instructions into memory, and then carrying out other required preparatory tasks to prepare the executable for running. Once loading is complete, the operating system starts the program by passing control to the loaded program code.

[...]

In Unix, the loader is the handler for the system call execve(). The Unix loader's tasks include:

- validation (permissions, memory requirements etc.);

- copying the program image from the disk into main memory;

- copying the command-line arguments on the stack;

- initializing registers (e.g., the stack pointer);

- jumping to the program entry point (

_start).

Interpreter directive

In Unix, Linux and other Unix-like operating systems (e.g., macOS), the first two bytes in a file can be the characters # and !, which constitute a magic number (hexadecimal 23 and 21, the ASCII values of # and !, respectively) often referred to as shebang, prefix the first line in a script, with the remainder of the line being a command usually limited to a max of 14 (when introduced) up to usually about 80 characters in 2016.

If the file system permissions on the script (a file) include an execute permission bit for the user invoking it by its filename (often found through the command search path), it is used to tell the operating system what interpreter (usually a program that implements a scripting language) to use to execute the script's contents, which may be batch commands or might be intended for interactive use. An example would be #!/bin/bash, meaning run this script with the bash shell found in the /bin directory.

Interpreter

In computer science, an interpreter is a computer program that directly executes instructions written in a programming or scripting language, without requiring them previously to have been compiled into a machine language program.

Notes on chmod: As noted in [1], chmod recognizes numeric format as well as symbolic format, but symbolic format will likely be your best bet in making changes easily. The file permissions as denoted by -rw-r--r-- have 644 as its numeric format:

Since we want the x bit set for the owner, we could issue the command chmod 744 semester to update the semester file accordingly. Or we could go the easier-to-remember symbolic route: chmod u+x semester. This effectively adds (+) the execution bit (x) for the user (u) while retaining all other permissions--this symbolic reasoning is explained in more detail below.

Observations worth noting when using chmod symbolically instead of numerically: You can set a file's permissions using a string of letters (like r for read permission) and symbols (like = to set permissions), where the so-called permission string has three parts:

- Whose permission?

ufor usergfor groupofor other users not in the groupafor all users. The default isa.

- Add, remove, or set?

+to add permissions-to remove permissions=to set absolute permissions, overwriting existing ones

- Which permissions?

rfor readwfor write/modifyxfor execute (for directories, this is permission tocdinto the directory)

You can use the shorthand u to duplicate the owner permissions, g to duplicate the group permissions, or o to duplicate the (world) permissions. You can also combine permission strings by separating them with commas, such as ug+rw,a-x (this will add read and write permissions for the user and group while also removing the execution permission for all users).

Here are some minor examples:

# add read and write permissions for the user and the group

chmod ug+rw myfile

# remove execute permissions for all users (they're equivalent)

chmod a-x myfile

chmod -x myfile

# create entirely new permissions (deleting the old ones) and

# make a file readable only by its owner

chmod u=r myfile

# add read and write permissions to user and group

# and remove execution permission for everyone

chmod ug+rw,a-x myfile

1.10

- Question

- Answer

- Additional Details

Use | and > to write the "last modified" date output by semester into a file called last-modified.txt in your home directory.

./semester | grep last-modified | cut -d " " -f 2- > $HOME/last-modified.txt

See this link for some helpful examples concerning the cut program.

1.11

- Question

- Answer

- Additional Details

Write a command that reads out your laptop battery's power level or your desktop machine's CPU temperature from /sys. Note: if you're a macOS user, your OS doesn't have sysfs, so you can skip this exercise.

Not relevant since I'm a macOS user, but here's one response from another user:

cat /sys/class/power_supply/BAT0/capacity

None

2 - Shell tools and scripting

Shell scripting vs. scripting in another programming language

Most shells have their own scripting language with variables, control flow, and their own programming syntax. What makes shell scripting different from other scripting programming languages is that shell scripting is optimized for performing shell-related tasks (i.e., as opposed to importing file system modules and things like that). Thus, creating command pipelines, saving results into files, and reading from standard input are primitives in shell scripting, which makes it easier to use than general purpose scripting languages.

Everything we will cover is centric to the bash shell, but many of the concepts (and features) extend to other Unix-like shells such as fish, zsh, etc.

Defining and assigning variables and observing the differences between single and double quotes

Suppose you drop into a shell and you want to define a variable. This is typically one of the first things you learn how to do when learning a new programming language (i.e., you learn how to declare variables). With bash this is pretty simple:

foo=bar

echo $foo

bar

One quirk you have to be aware of is that spaces are very imporant when dealing with bash (mainly because whitespace is typically reserved for splitting or separating arguments provided to different programs, functions, etc.):

foo = bar

bash: foo: command not found

Why did we get an error? The reason for this is because we are not actually assigning foo to be bar in the above code snippet. What we are actually doing is instructing bash to execute the program foo with first argument = and second argument bar.

Another thing to be aware of is how bash treats strings when you use single or double quotes. When using literal strings, single and double quotes are treated the same. For example:

echo "Hello"

# prints Hello

echo 'Hello'

# prints Hello

As can be seen above, it does not matter whether or not we use single or double quotes when dealing with literal strings. But that is basically the only time it doesn't matter! Otherwise, as noted here, enclosing characters in single quotes preserves the literal value of each character within the quotes while enclosing characters within double quotes preserves the literal value of all characters within the quotes with the exception of $, `, /, and ! when history expansion is enabled.

Simply put, strings in bash can be defined with ' and " delimiters, but they are not equivalent--strings delimited with ' are literal strings and will not substitute variable values whereas " delimited strings will.

The following small example illustrates these important differences:

foo=bar

echo "Value is $foo"

# Value is bar

echo 'Value is $foo'

# Value is $foo

As can be seen above, there is variable replacement when using double quote strings in bash ($foo is basically expanded to be its variable assignment of bar) but not when using single quotes.

Going beyond piping and into functions

Previously, we discussed how piping can be used in bash with the | character. But sometimes you want to create a function with a series of discrete, sequential steps, and sometimes what you really want is not so much to be piping things around but to have a dedicated function assigned to your chosen task(s). As with most programming languages, bash supports control flow techniques including if, case, while and for. Similarly, bash has functions that take arguments and can operate with them. For example, the following function lets you create a directory and then change into it (a pretty common navigation pattern):

# make a directory and then cd into it

mcd () {

mkdir -p "$1"

cd "$1"

}

Hence, instead of doing something like

mkdir myFolder

cd myFolder

we can simply use mcd, as defined above, to accomplish the same task:

mcd myFolder

In the definition of mcd, $1 is the first argument to the script/function. Unlike other scripting languages, bash uses a variety of special variables to refer to arguments, error codes, and other relevant variables. Below is a list of some of them:

| Variable | Description |

|---|---|

$0 | Name of the script |

$1 to $9 | Arguments to the script. $1 is the first argument and so on. |

$@ | All the arguments |

$# | Number of arguments |

$? | Return code of the previous command |

$$ | Process identification number (PID) for the current script |

!! | Entire last command, including arguments. A common pattern is to execute a command only for it to fail due to missing permissions; you can quickly re-execute the command with sudo by doing sudo !! |

$_ | Last argument from the last command. If you are in an interactive shell, you can also quickly get this value by typing Esc followed by . |

A more comprehensive list of these kinds of variables can be found in the advanced bash-scripting guide.

To recap, implicit in the description of mcd function above was the use of $1 as the "first" argument. As [1] notes, arguments are assigned to the positional parameters $1, $2, etc. If the first argument is a script, commands are read from it, and the remaining arguments are assigned to $1, $2, and so on. The name of the script is available as $0. The script file itself need not be executable, but it must be readable.

Exit status codes (the details)

Previously we've seen operators like <, >, and | to redirect standard input or standard output (i.e., stdin or stdout, respectively). We can also interact with the standard error or stderr (e.g., maybe you want to log errors when running programs and do not want to pollute the standard output). There's also the error code or exit status in general.