JSNSD Course Notes

- 1 - Introduction

- 2 - Setting up

- 3 - Creating a web server

- 4 - Serving Web Content

- 5 - Creating RESTful JSON Services

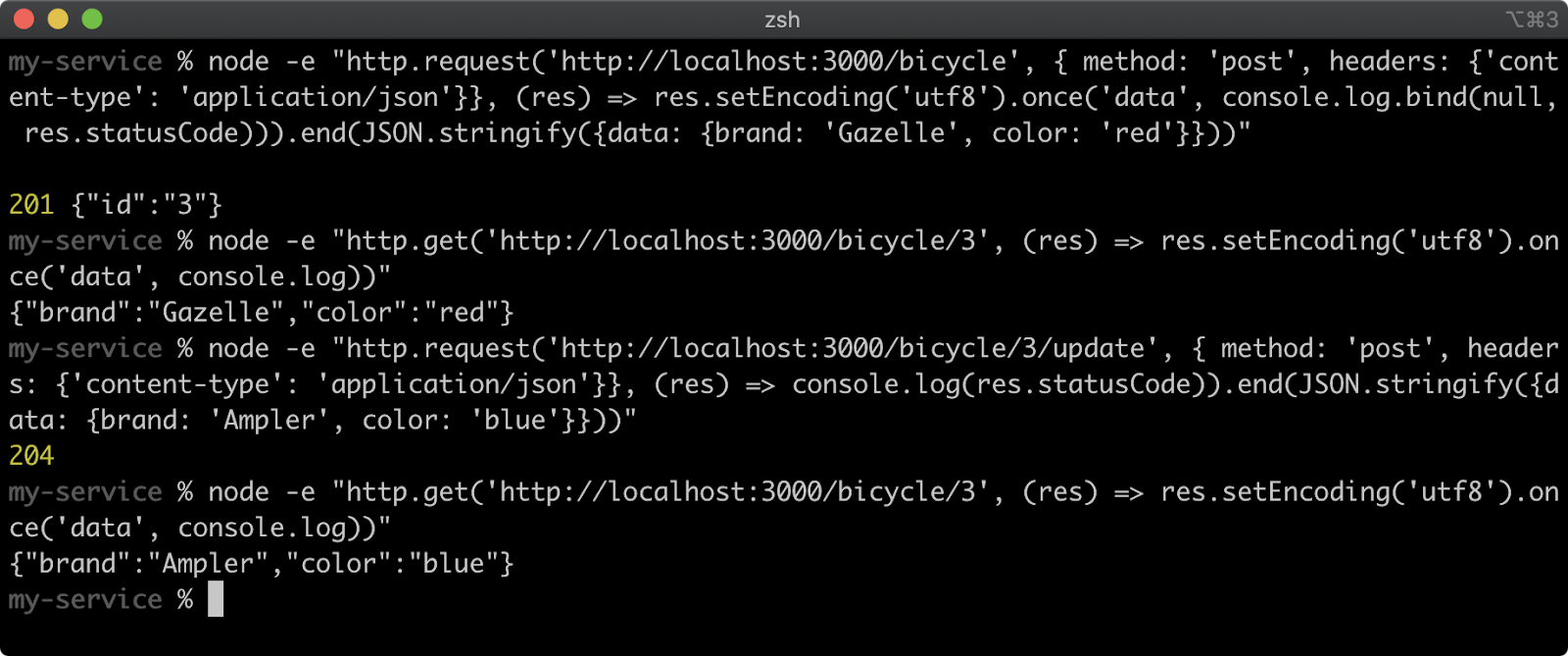

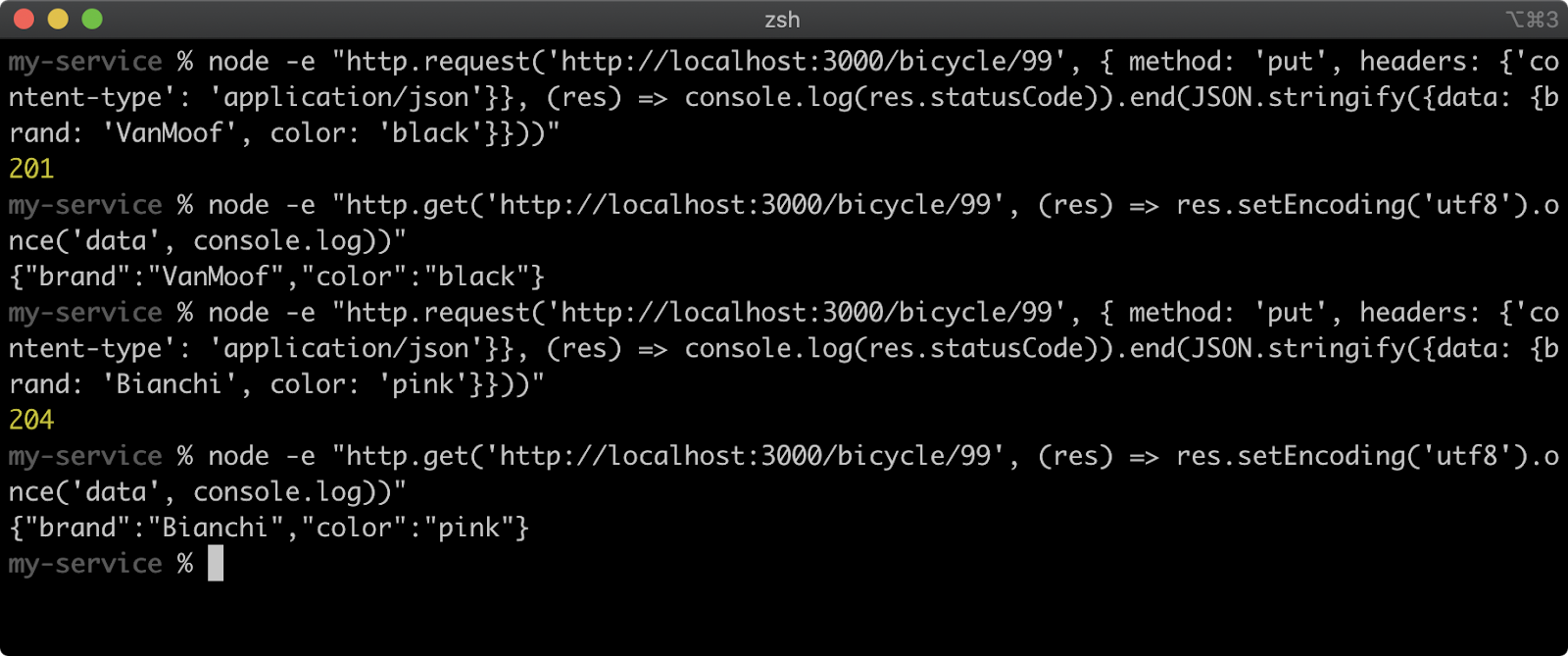

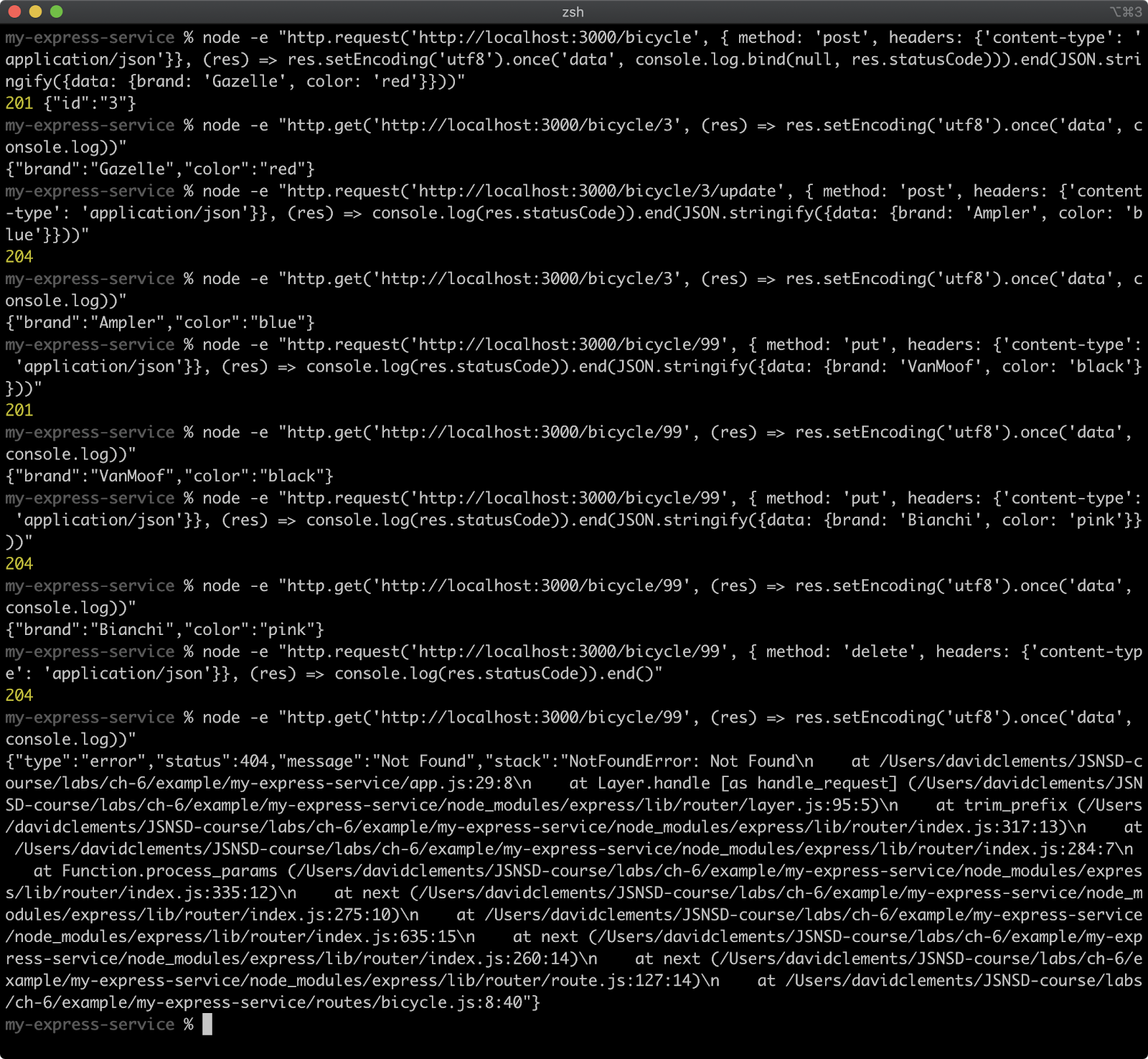

- 6 - Manipulating Data with RESTful Services

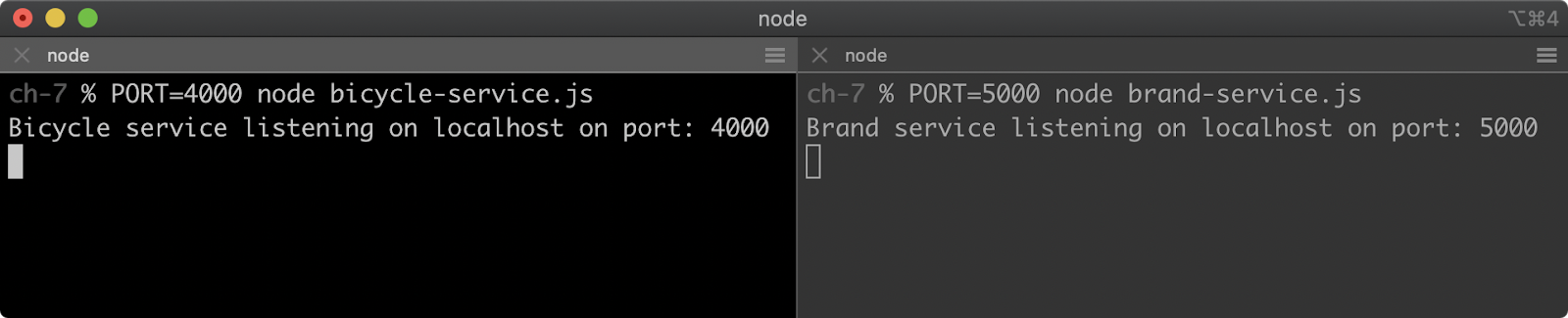

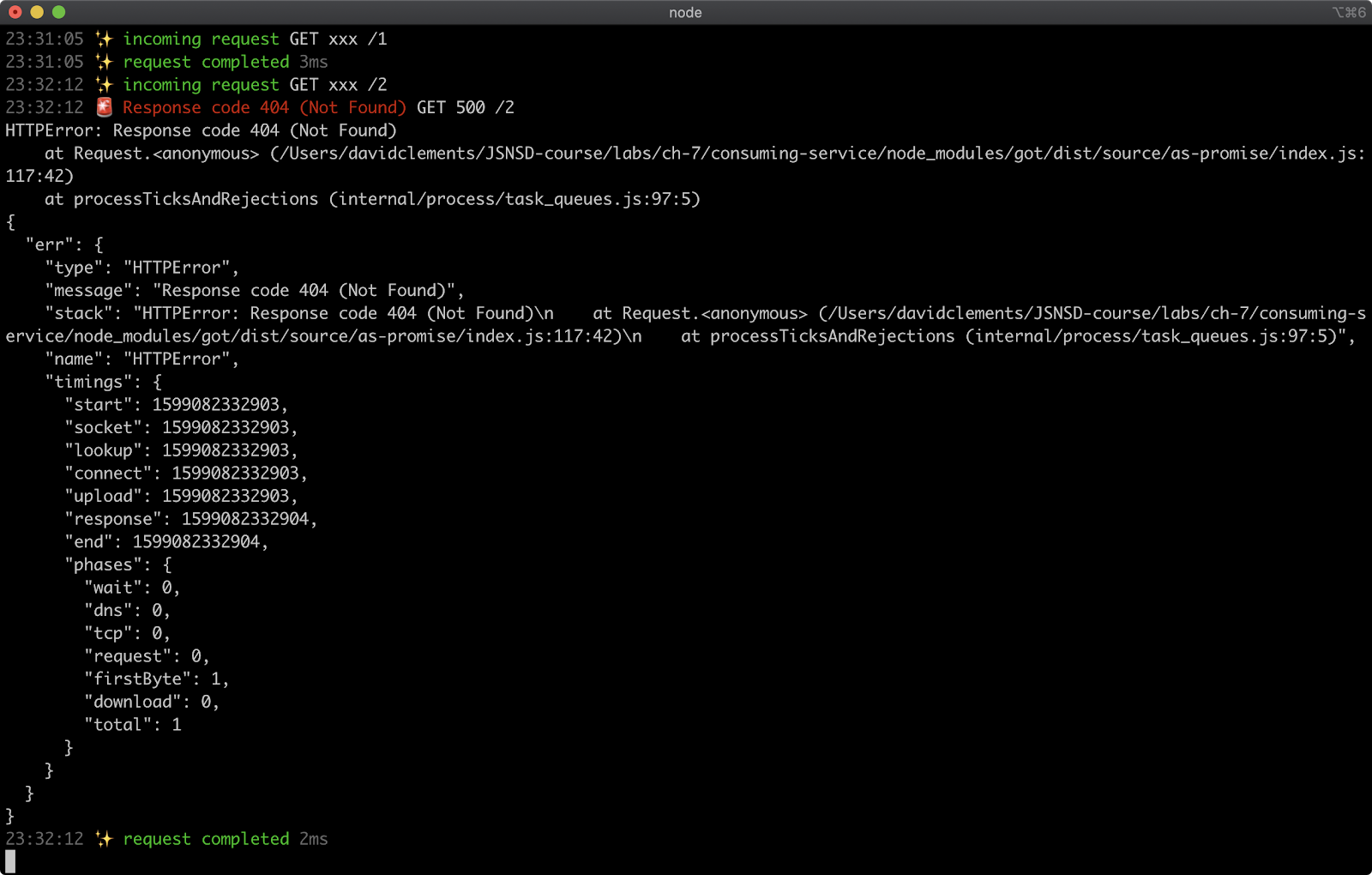

- 7 - Consuming and Aggregating Services

- 8 - Proxying HTTP Requests

- 9 - Web Security: Handling User Input

- 10 - Web Security: Mitigating Attacks

- 11 - Course Completion

- Quizzes

1 - Introduction

- Resources for this course can be found online. Making updates to this course takes time. Therefore, if there are any changes in between updates, you can always access course updates, as well as the course resources online: Go to the Linux Foundation training website to obtain Course Resources. The user ID is LFtraining and the password is Penguin2014.

- One great way to interact with peers taking this course is via the Class Forum. The forum can be used in the following ways:

- To introduce yourself to other peers taking this course.

- To discuss concepts, tools and technologies presented in this course, or related to the topics discussed in the course materials.

- To ask questions or report issues with labs or course content.

- To share resources and ideas related to Node.js.

2 - Setting up

How not to install Node

Often Node.js can be installed with a particular Operating System's official or unofficial package manager. For instance apt-get on Debian/Ubuntu, Brew on macOs, Chocolatey on Windows. It is strongly recommended against using this approach to install Node. Package managers tend to lag behind the faster Node.js release cycle. Additionally the placement of binary and config files and folders isn't standardized across OS package managers and can cause compatibility issues.

Another significant issue with installing Node.js via an OS package manager is that installing global modules with Node's module installer (npm) tends to require the use of sudo (a command which grants root privileges) on non-Windows systems. This is not an ideal setup for a developer machine and granting root privileges to the install process of third-party libraries is not a good security practice.

Node can also be installed directly from the Node.js website. Again on macOS and Linux it predicates the use of sudo for installing global libraries. Whether Windows, macOS or Linux, in the following sections we'll present a better way to install Node using a version manager.

It's strongly recommended that if Node is installed via an Operating System package manager or directly via the website, that it be completely uninstalled before proceeding to the following sections.

Installing Node.js on macOS and Linux

The recommended way to install Node.js on macOS and Linux is by using a Node version manager, in particular nvm. See https://github.com/nvm-sh/nvm for full details. The current nvm version is v0.39.1 (as of January 2022), so the install process will contain this version in the URL, if a greater version is out at time of reading, replace v0.39.1 with the current nvm version. For this installation process we assume that Bash, Sh, or Zsh is the shell being used, Fish is not supported but see the nvm readme for alternatives. The way to install nvm is via the install script at https://github.com/nvm-sh/nvm/blob/v0.39.1/install.sh. If curl is installed (it usually is) a single command can be used to install and setup nvm:

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.1/install.sh | bash

If using zsh (e.g., on newer macOS releases) the bash part of the command can be replaced with zsh. Alternatively the file can be downloaded and saved, and then easily executed like so: cat install.sh | bash. Again bash can be replaced with zsh. To check that the installation was successful execute the following in the terminal: command -v nvm. It should output nvm. If this fails on Linux, close and reopen the terminal (or SSH session) and try running the command again. On macOS see https://github.com/nvm-sh/nvm#troubleshooting-on-macos for in depth troubleshooting. Now that we have a version manager, let's install the Node version we'll be using on this course: nvm install 16. This will install the latest version of Node 16. We can verify that Node is installed, and which version, with the following command: node -v.

3 - Creating a web server

Introduction

Chapter Overview

The focus of this course and the JSNSD examination centers on RESTful/HTTP services as key knowledge for almost every contemporary scenario involving Node.js and services. There is a great deal of overlap between an HTTP service and a web server. In order to make an HTTP-based service, the first step is to create an HTTP server. In this chapter we'll explore different approaches to creating a web server and perform a tour de force of selected web frameworks while we're at it. This chapter and indeed the entire course assumes prior knowledge of Node.js, including the module system and Node.js streams and Node CLI flags. See the Node.js Application Development (LFW211) course for instruction in these fundamentals.

Learning Objectives

By the end of this chapter, you should be able to:

- Create a minimal web server with Node core APIs.

- Create a minimal web server with the Express framework.

- Create a minimal web server with the Fastify framework.

Creating a Web Server

Creating a Web Server with Node Core (1)

Generally speaking, attempting to create a web server or service with just the Node core http (or https) module is not recommended. However, for learning purposes we'll put together a basic web server using the core http module in order to better understand the value that a web framework can bring and how a web framework is actually operating under the hood.

Let's define what we expect from a minimum viable web server:

- Responds to HTTP requests based on a given HTTP verb (for instance GET).

- Responds to requests based on a given route.

- Responds with 404 HTTP Status code if a route isn't found.

- Sets appropriate headers, such as Content-Type.

To meet this criteria we're going to take an iterative approach and build in layers.

Before getting started, be aware that we'll be stopping and starting servers a lot throughout this chapter and the entire course. Make sure that any previous processes from prior sections have been terminated, especially when attempting the exercises.

To get started, we can create a folder called http-web-server with the following commands:

node -e "fs.mkdirSync('http-web-server')"

cd http-web-server

Note, throughout this course node with the -e (evaluate) flag will be used for cross-platform/cross-shell administrative commands (like creating folders).

Now, let's create a file called server.js with the following initial code:

'use strict'

const http = require('http')

const PORT = process.env.PORT || 3000

const hello = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

</style>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>`

const server = http.createServer((req, res) => {

res.setHeader('Content-Type', 'text/html')

res.end(hello)

})

server.listen(PORT)

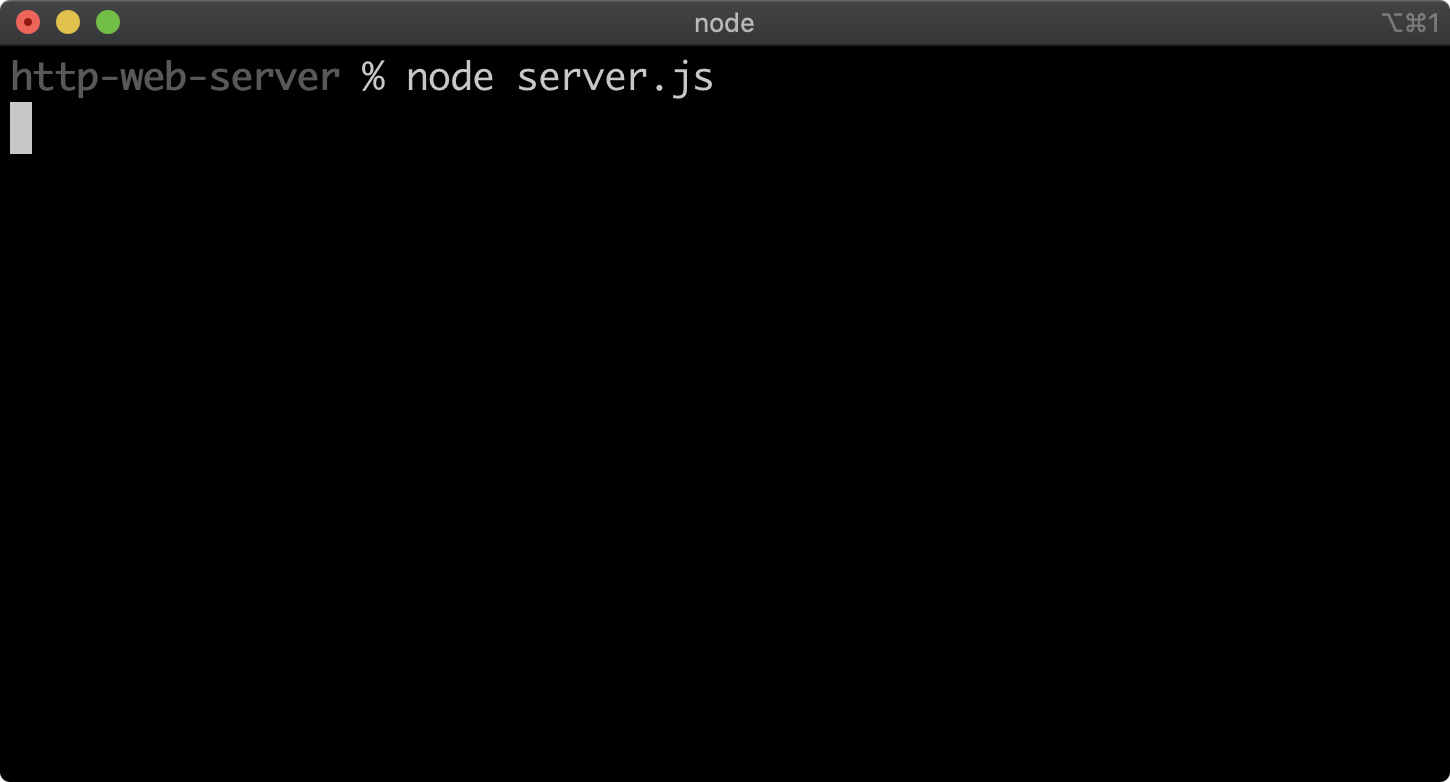

This code can be executed with the following command:

node server.js

If we run this code the process will not exit by itself:

This is because the created server is keeping the process open.

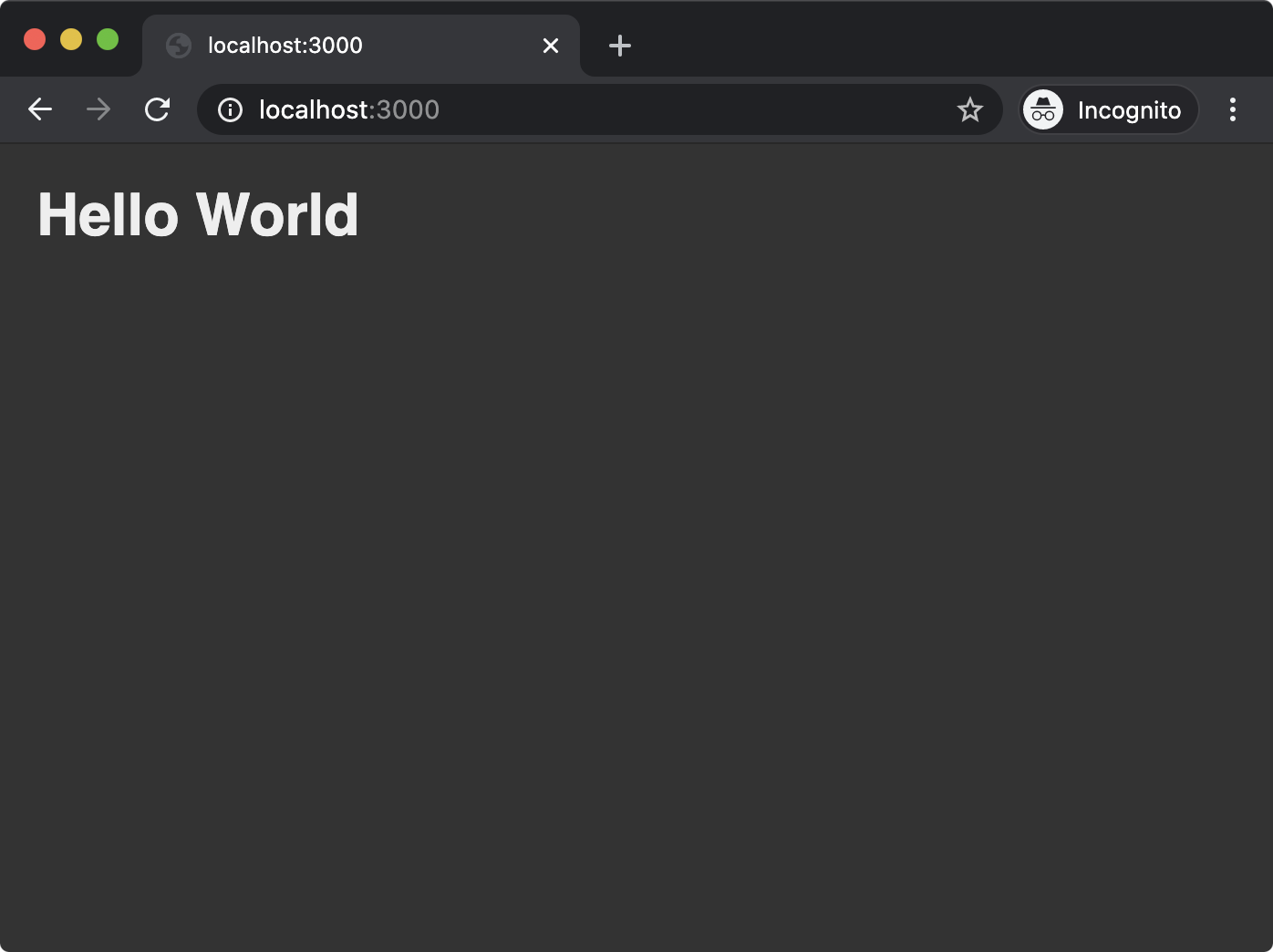

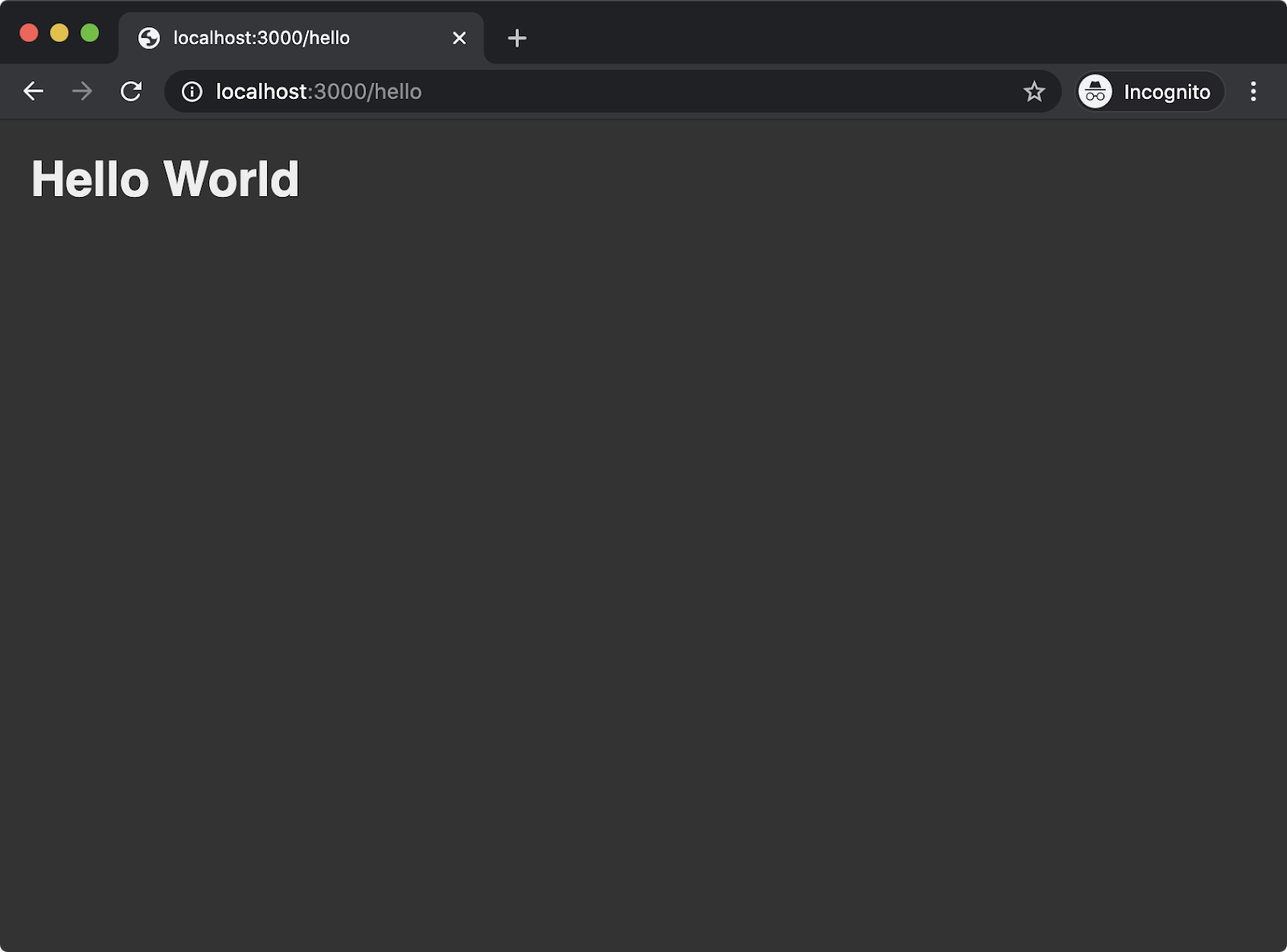

If we then navigate in a browser to http://localhost:3000, we should see something like the following:

So far we've used the Node core http module to create a server with the createServer method. We've also observed that a function is passed to createServer. This function is called every time the HTTP server receives a request. The function passed to createServer is passed two objects: the request object and the response object. These objects are created for every request and then passed to the function we supply to createServer.

In the function we passed to createServer we use the setHeader and the end methods of the response object (res) to set the Content-Type header to text/html, and to send the string of HTML (assigned to the hello constant) while also closing the connection. The res object inherits from http.ServerResponse which in turn inherits from http.OutgoingMessage (a Node core internal constructor) which then inherits from stream.Stream. For all practical purposes the res object is a writable stream, which is why calling end writes our content and also closes the connection.

The createServer method also returns an object which represents the server. We use the listen method to bind to a port. In our case, by default, we bind to port 3000. Our server can instead bind to a different port by setting the PORT environment variable.

Creating a Web Server with Node Core (2)

Our implementation does not yet meet our criteria. We can navigate to any route and the response will be the same. For instance, http://localhost:3000/foo:

Regardless of whether we use a POST, GET or any other HTTP verb, we will always get the same response.

Let's update our server.js code to the following:

'use strict'

const url = require('url')

const http = require('http')

const PORT = process.env.PORT || 3000

const { STATUS_CODES } = http

const hello = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

</style>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>`

const root = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

a { color: yellow; font-size: 2rem; font-family: sans-serif }

</style>

</head>

<body>

<a href='/hello'>Hello</a>

</body>

</html>

`

const server = http.createServer((req, res) => {

res.setHeader('Content-Type', 'text/html')

if (req.method !== 'GET') {

res.statusCode = 405

res.end(STATUS_CODES[res.statusCode] + '\r\n')

return

}

const { pathname } = url.parse(req.url)

if (pathname === '/') {

res.end(root)

return

}

if (pathname === '/hello') {

res.end(hello)

return

}

res.statusCode = 404

res.end(STATUS_CODES[res.statusCode] + '\r\n')

})

server.listen(PORT)

We can stop the currently running server.js process with Ctrl+C and start our modified server.js file with the following command:

node server.js

Creating a Web Server with Node Core (3)

At the top of our server.js file we've added the additional Node core url module, and we've destructured the STATUS_CODES object, which contains key-values of status codes to HTTP status messages, from the http module. Just before creating our server we've also added a root constant which contains an HTML string with an anchor tag linking to the /hello route.

We've updated the function passed to createServer with some new logic. We check the incoming requests HTTP verb by accessing the req.method property. If this is not set to GET we set the statusCode of the res object to 405 (Method Not Allowed) and end the response with an appropriate status message.

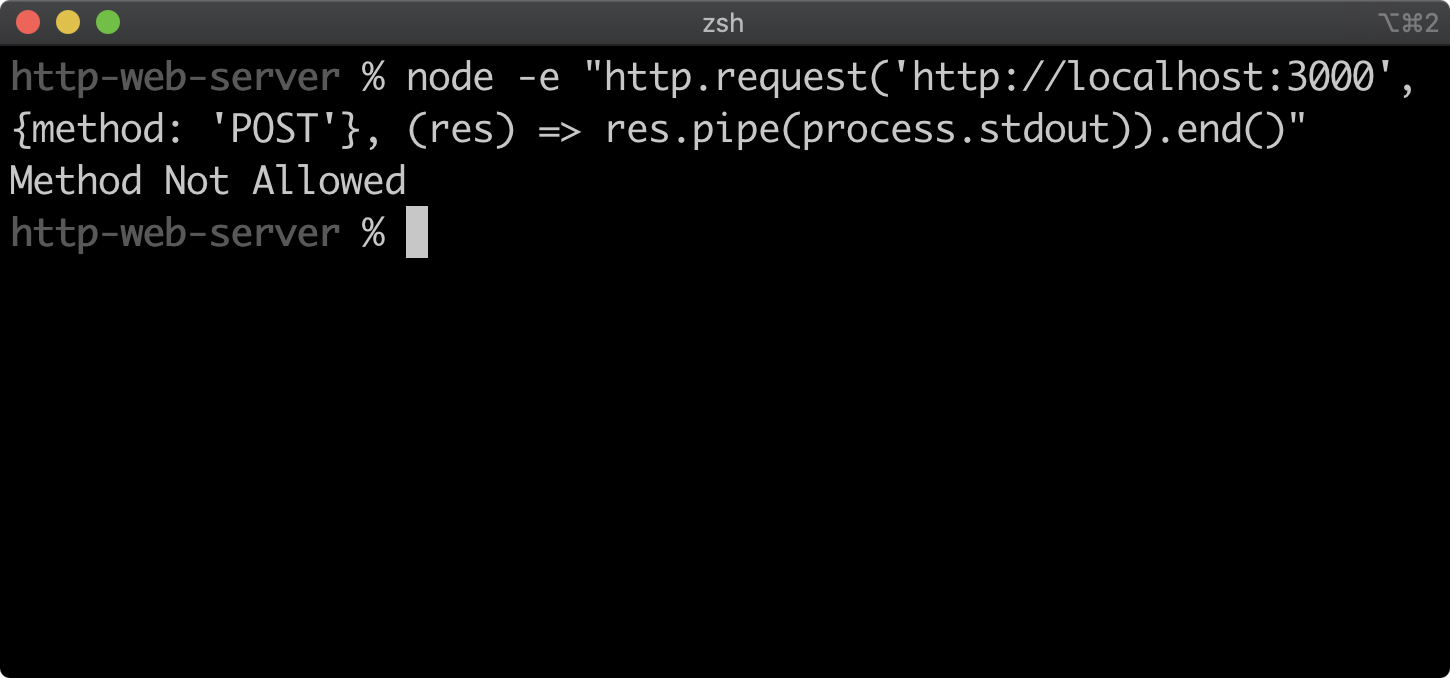

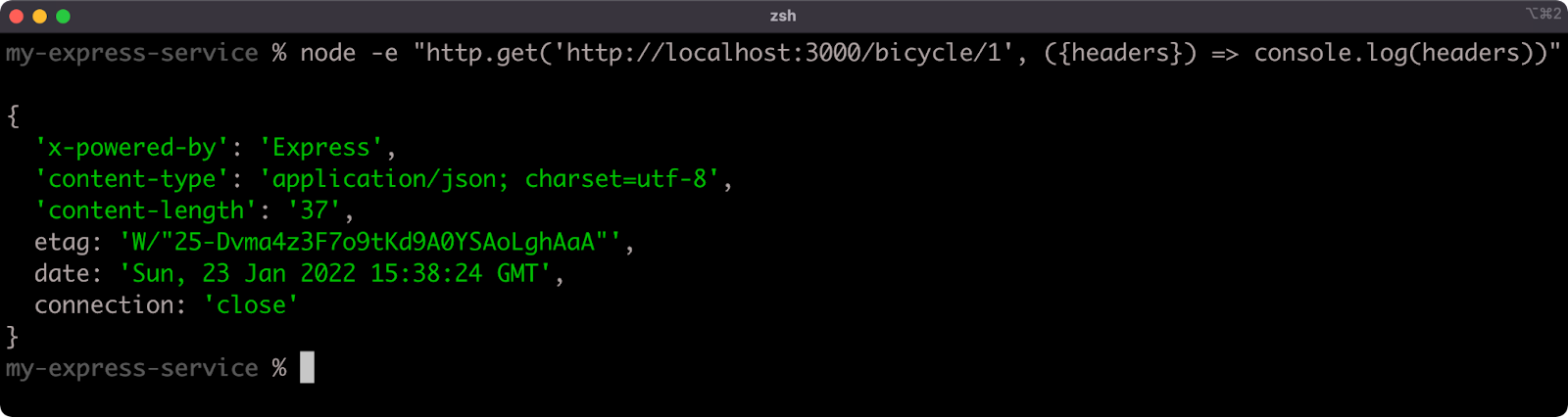

We can check whether this works by running the following command in another terminal window:

node -e "http.request('http://localhost:3000', {method: 'POST'}, (res) => res.pipe(process.stdout)).end()"

This command uses the http module to submit a POST request to our server and then prints the result to the terminal:

The Node core url module has a parse method which turns a URL string into an object containing various segments of the URL, such as host, protocol and pathname. See the Node.js Documentation to learn more.

The req.url property has a slightly misleading name. It does not hold the entire URL of an incoming request, only the relative path after the host portion. For instance a request to http://localhost:3000/hello will result in a req.url of /hello. The reason we pass req.url to url.parse is to separate any potential query string from the URL. Now, let's consider a request to http://localhost:3000/hello?foo=1. It would result in a req.url value of /hello?foo=1. Passing such a string to url.parse will result in an object with a pathname property of /hello.

If the pathname is / then we end the response with the contents of root and exit the function early with a return keyword. In this case, there is no need to set res.statusCode because the default res.statusCode is 200 (OK).

For more information on status codes see the MDN web docs.

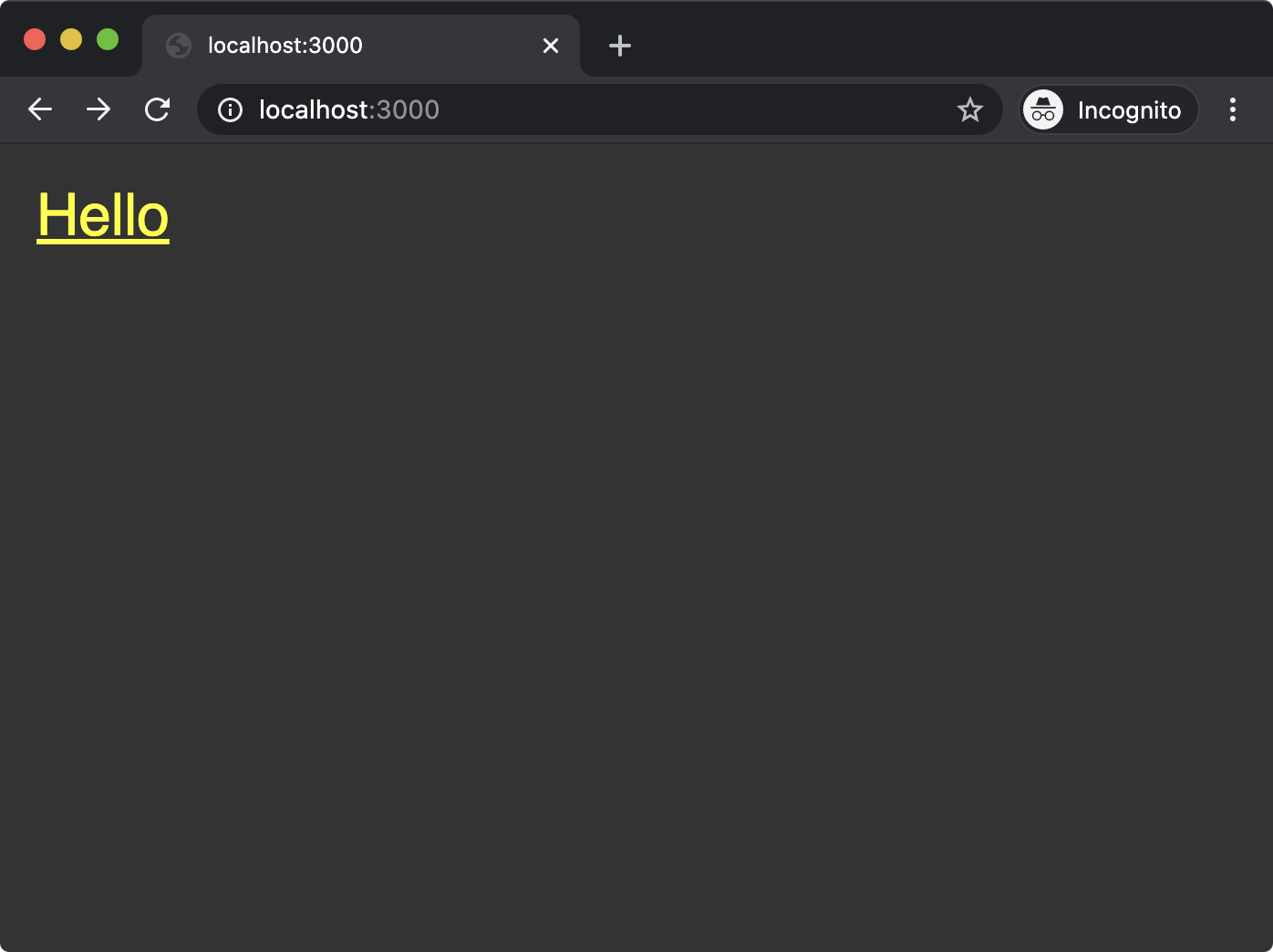

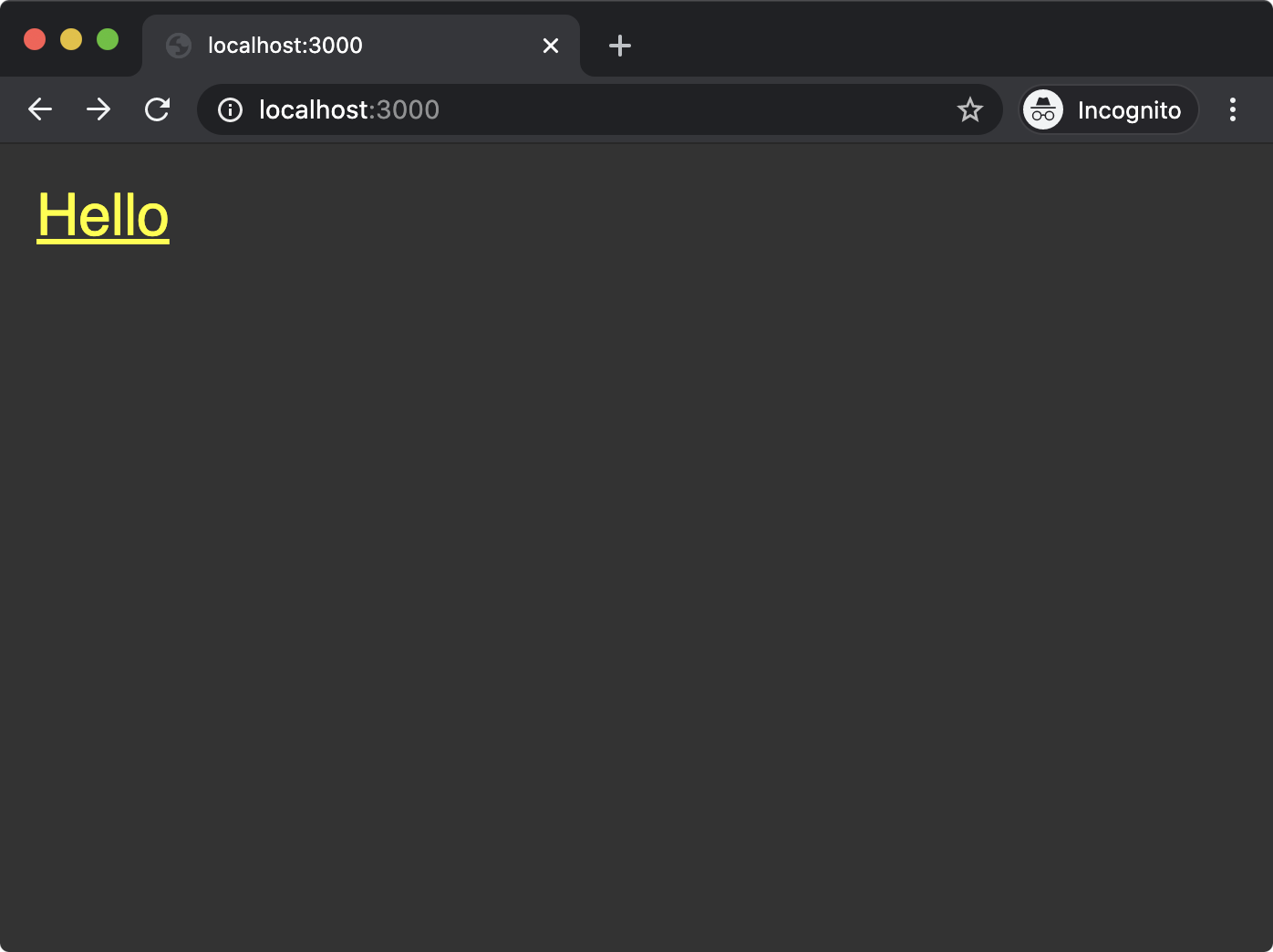

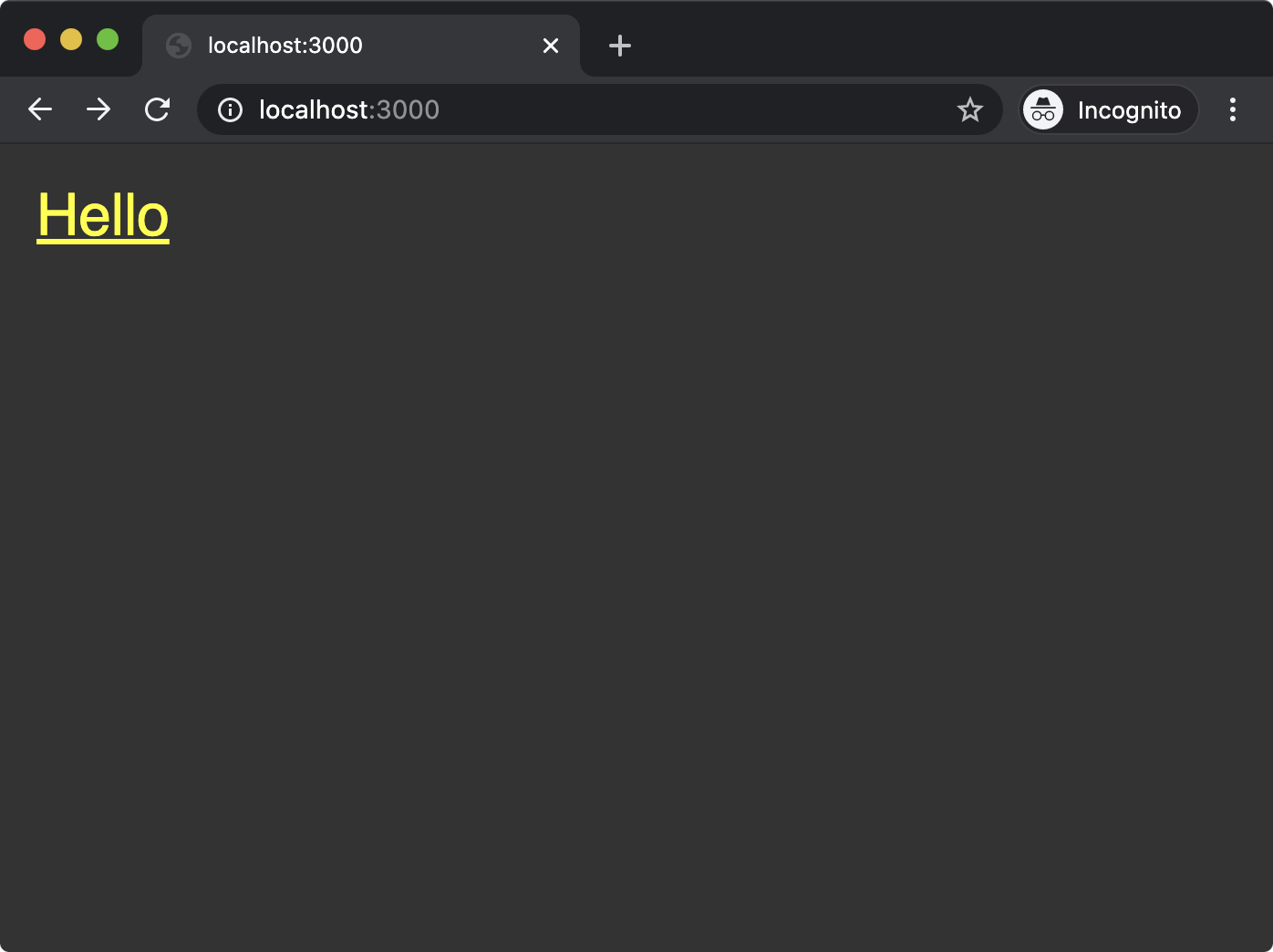

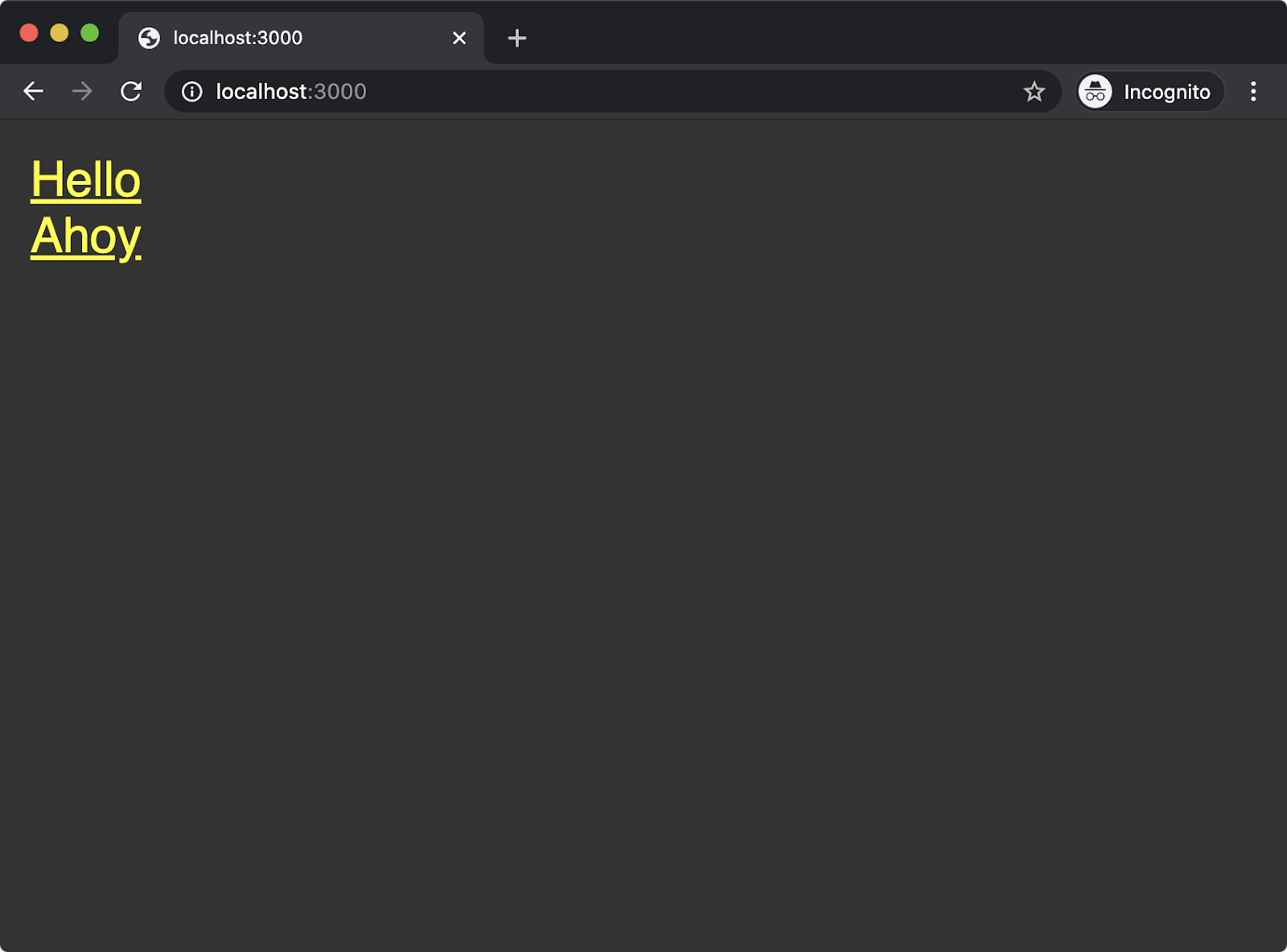

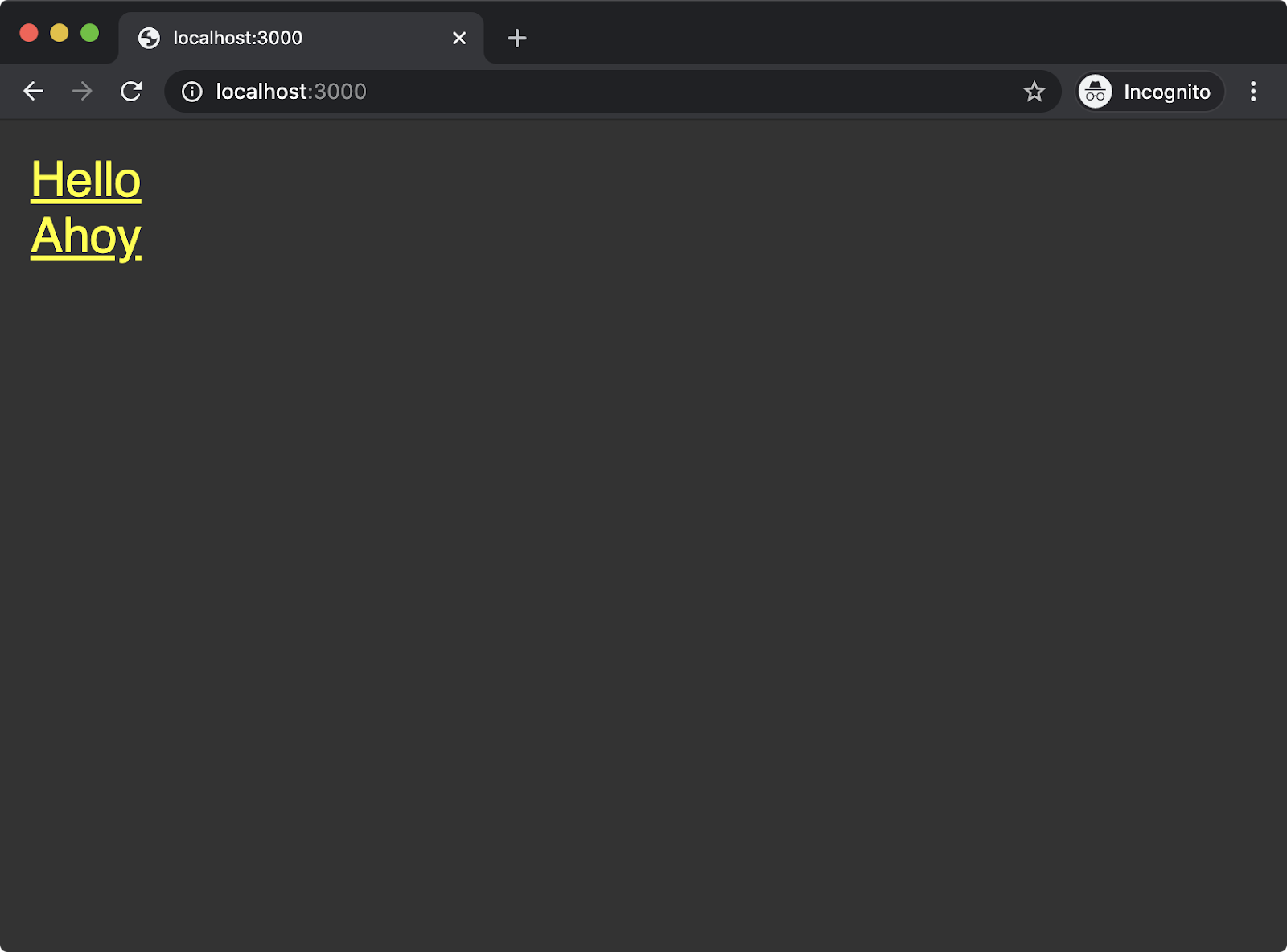

If we navigate to http://localhost:3000 in the browser we should see the following:

Creating a Web Server with Node Core (4)

If the pathname is /hello then we end the response with the contents of hello and exit the function. Again, no need to set the res.statusCode property.

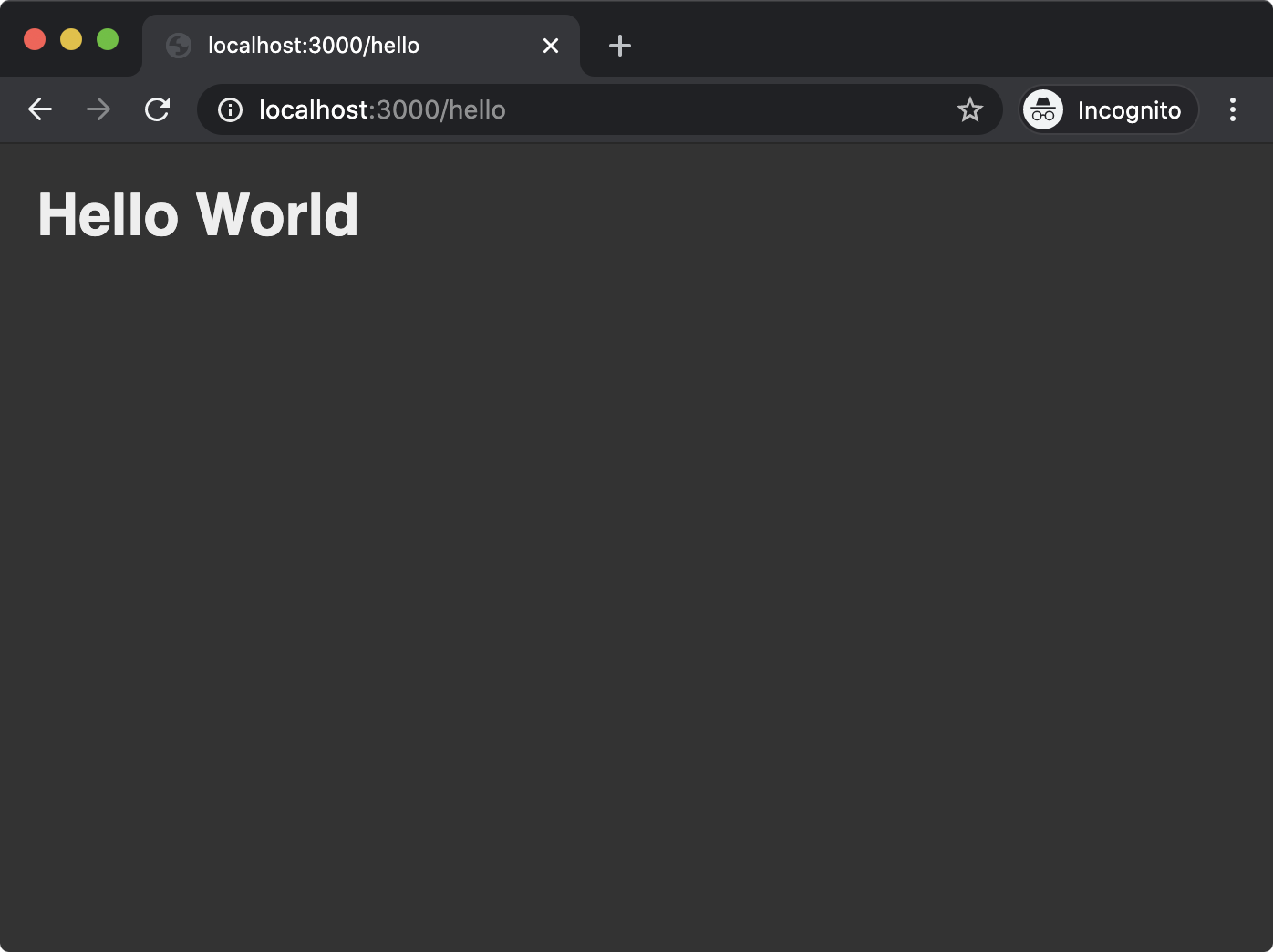

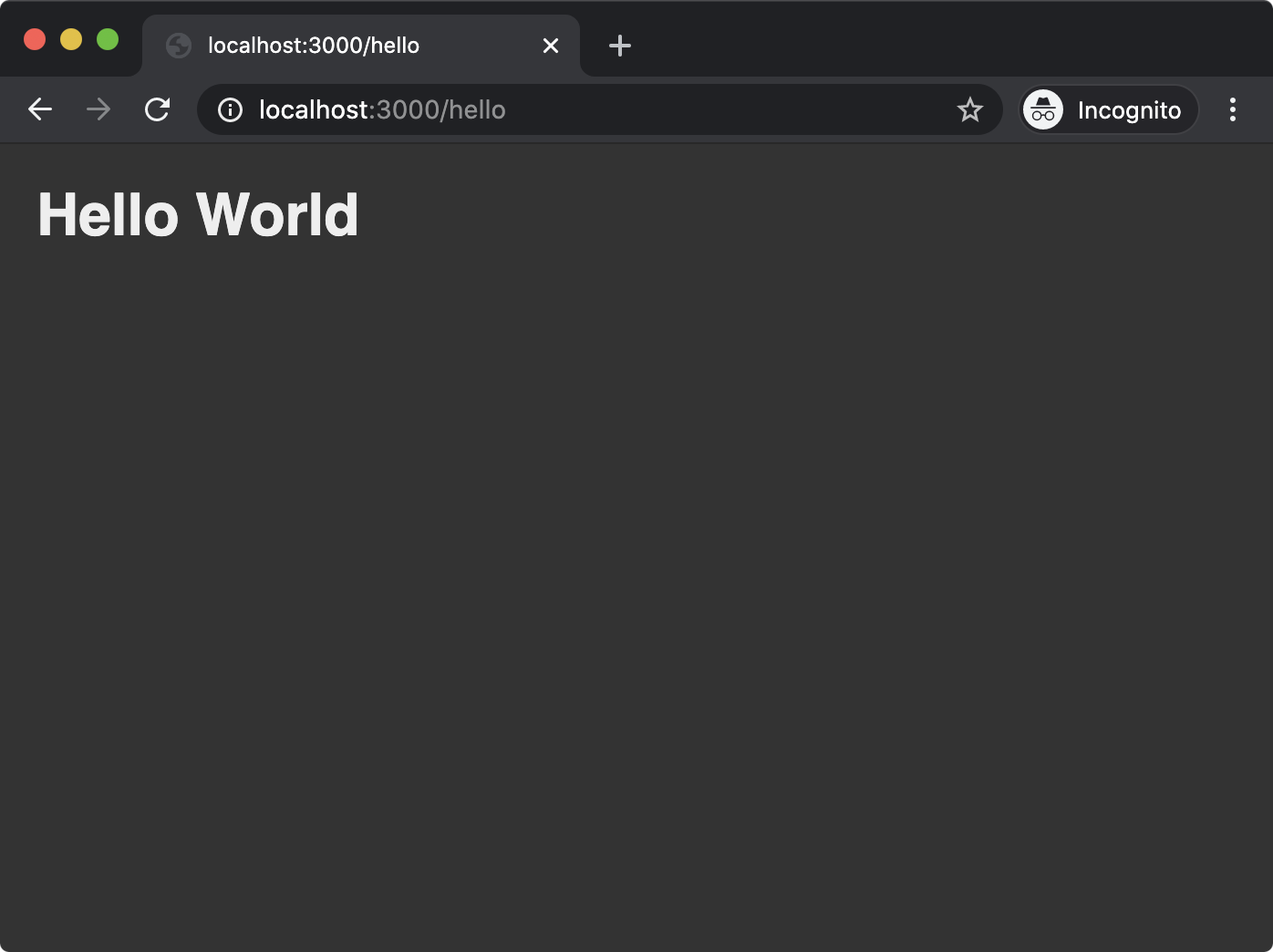

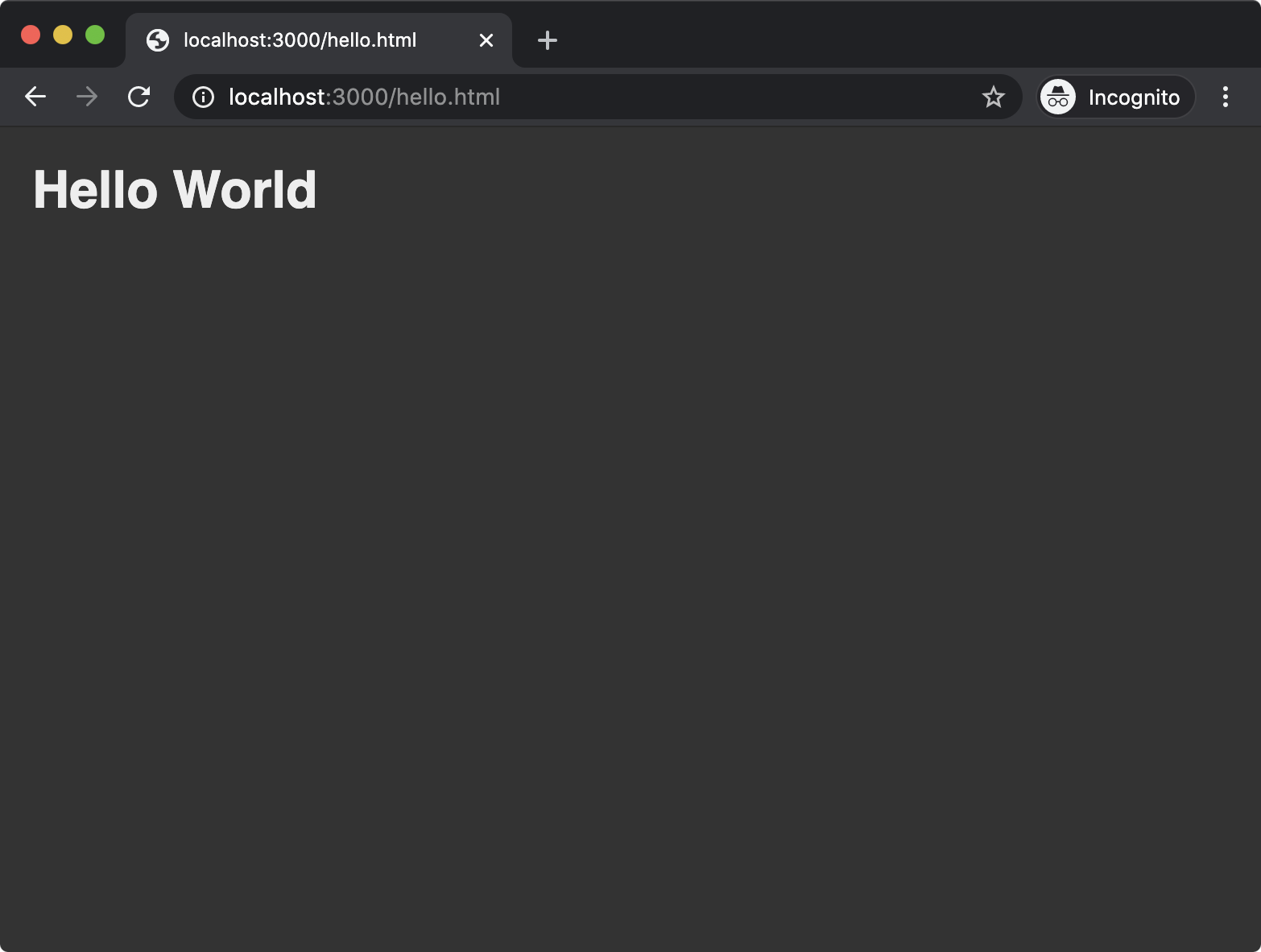

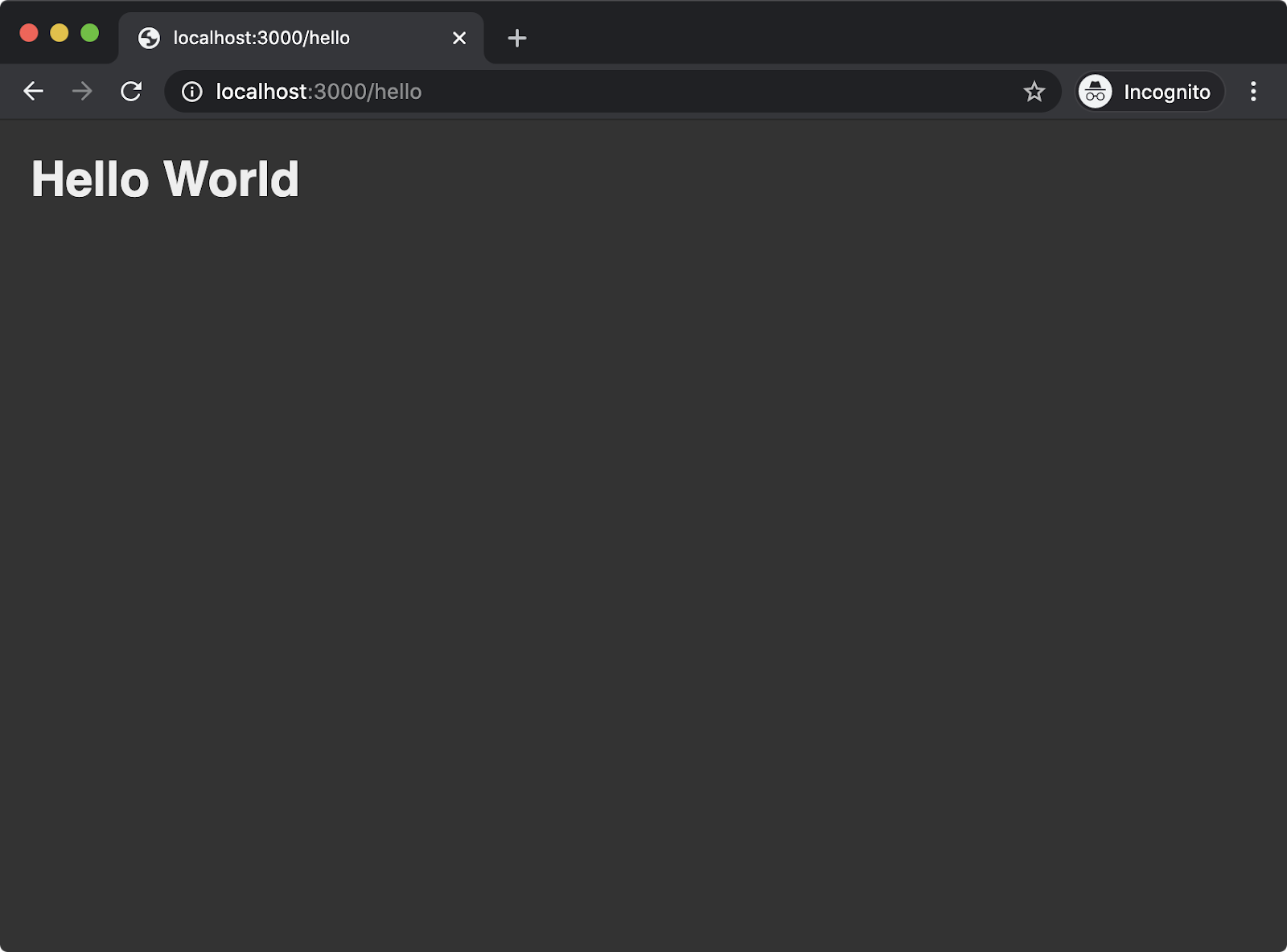

In the browser, upon clicking the link or manually navigating to http://localhost/hello we should see the following:

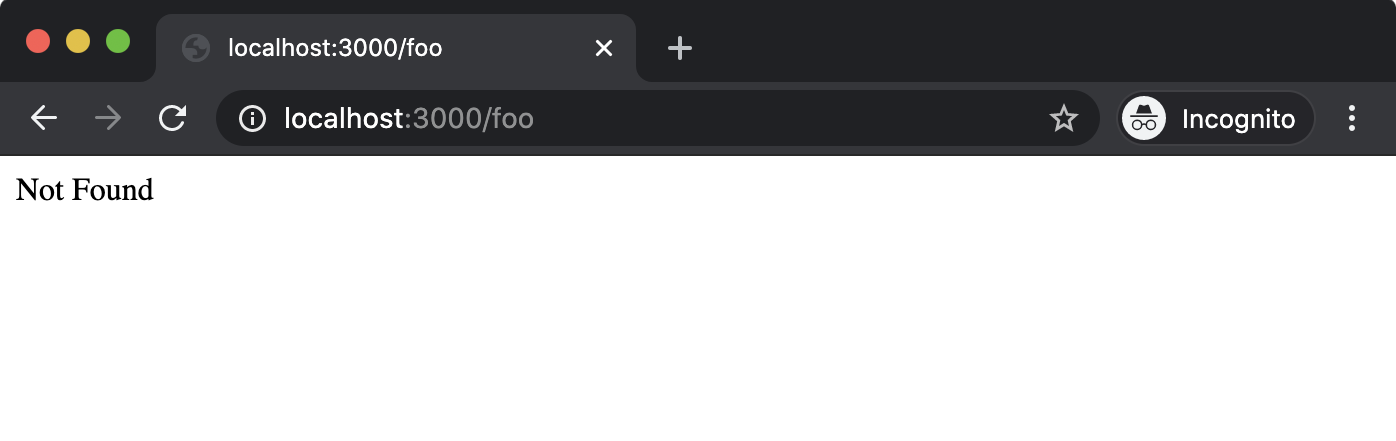

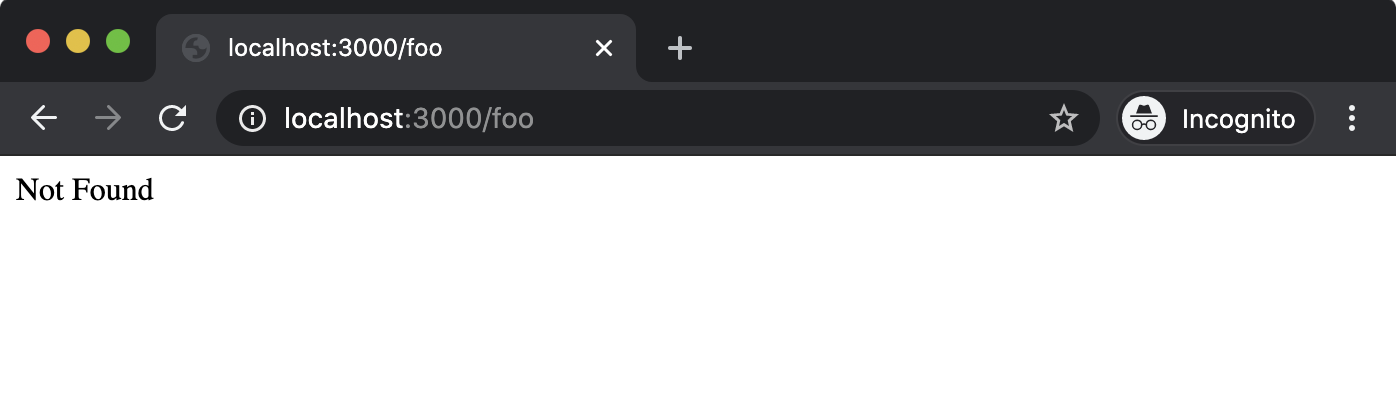

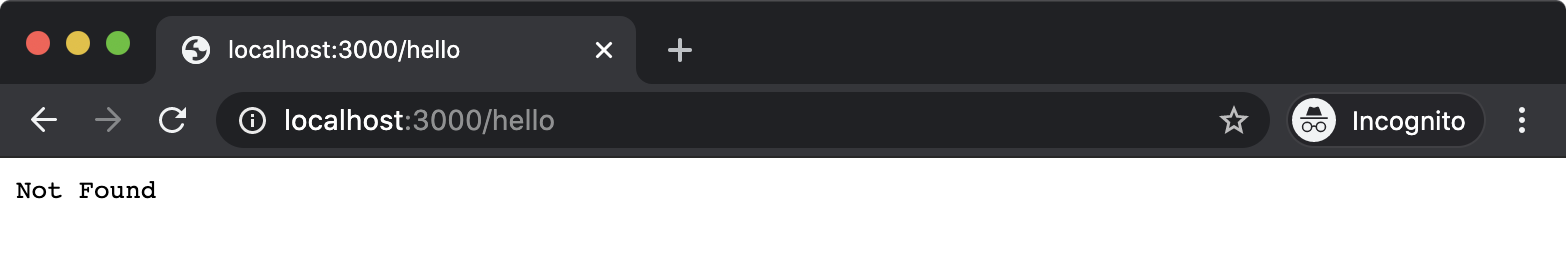

If pathname is neither /hello nor / the end of the function is reached, where the res.statusCode property is set to 404 and the response is ended with the corresponding status message (Not Found).

Navigating to http://localhost:3000/foo in the browser should result in the following:

We have now created a very basic web server. This procedural approach can become very rigid and unwieldy if we were to attempt to extend functionality over time. In the next sections we'll learn how to use the Express and Fastify frameworks to achieve the same results in a more flexible, declarative manner.

Creating a Web Server with Express (1)

Now that we understand what it takes to create a rudimentary web server using Node.js core http and url modules, let's turn our attention to expediting the process of creating, extending and maintaining Node.js web servers.

Express is one of the most widely used Node.js frameworks. More so when it comes to generating and delivering HTML dynamically, as opposed to delivering RESTful JSON content as a service.

The core development efforts of the Express project have been stagnant since around 2017. Despite renewed interest in modernizing the framework from the OSS community and technology industry circa 2020, it has remained in maintenance mode. In this course, we'll be focusing on Express 4, which, although it was first released 8 years ago, is the latest major version at the time of writing (February 2022).

Even after the release of the next major version (Express 5, last alpha was two years ago, ETA unknown), understanding version 4 is essential from a pragmatic perspective since so many legacy code bases have been built using Express 3 and 4, which are fairly similar to each other.

Let's explore Express 4. First, create:

- an

express-web-serverfolder with anapp.js file - a

routesfolder withindex.jsandhello.jsfiles, and - a

binfolder with awwwfile:

node -e "fs.mkdirSync('express-web-server')"

cd express-web-server

node -e "fs.mkdirSync('routes')"

node -e "fs.mkdirSync('bin')"

node -e "fs.openSync('app.js', 'w')"

cd routes

node -e "fs.openSync('index.js', 'w')"

node -e "fs.openSync('hello.js', 'w')"

cd ../bin

node -e "fs.openSync('www', 'w')"

cd ..

Next, we'll generate a package.json file for the project and then install Express and another package called http-errors:

npm init -y

npm install express@4 http-errors@2

The generated package.json file should look similar to the following:

{

"name": "express-web-server",

"version": "1.0.0",

"description": "",

"main": "app.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"express": "^4.17.2",

"http-errors": "^2.0.0"

}

}

We need to modify the scripts object in the package.json file to the following:

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1",

"start": "node ./bin/www"

},

Here we've added a start script, so when we run npm start the bin/www file will be executed with Node.

We're using this folder structure for the sake of familiarity: it's a conventional Express structure. A tool called express-generator generates this structure with some other additions. We'll use the generator in the next chapter, for now we're concentrating on the bare bones to create the equivalent of our minimum viable web server that we implemented in the previous section.

Creating a Web Server with Express (2)

We're going to build the app.js file iteratively. For our first iteration app.js should look as follows:

'use strict'

const express = require('express')

const app = express()

module.exports = app

All we're doing here is instantiating an Express instance and exporting it from the app.js file as a module.

The bin/www file is the entry point for the application, and is responsible for starting the server. It should look as follows:

#!/usr/bin/env node

'use strict'

const app = require('../app')

const http = require('http')

const PORT = process.env.PORT || 3000

const server = http.createServer(app)

server.listen(PORT)

In the previous section we learned about the http.createServer method. It takes a function which is passed a request and response object. In bin/www we import the app.js file we just wrote, assign it to the app constant and then pass that to http.createServer. An Express instance on the other hand is actually a function that accepts a request object and a response object, that's why we pass the Express instance to http.createServer. In the next steps, we'll configure our Express app instance, and this will change how the function that is passed to http.createServer behaves.

We should now be able to get our server up and running with the following command:

npm start

This command will look up the package.json scripts.start field and then run the command (node ./bin/www). The bin/www file will create a server with the Node core http.createServer method, but use the app.js file to generate the function which is passed to createServer. Like our HTTP server in the previous section, once the process has started, it will not output anything.

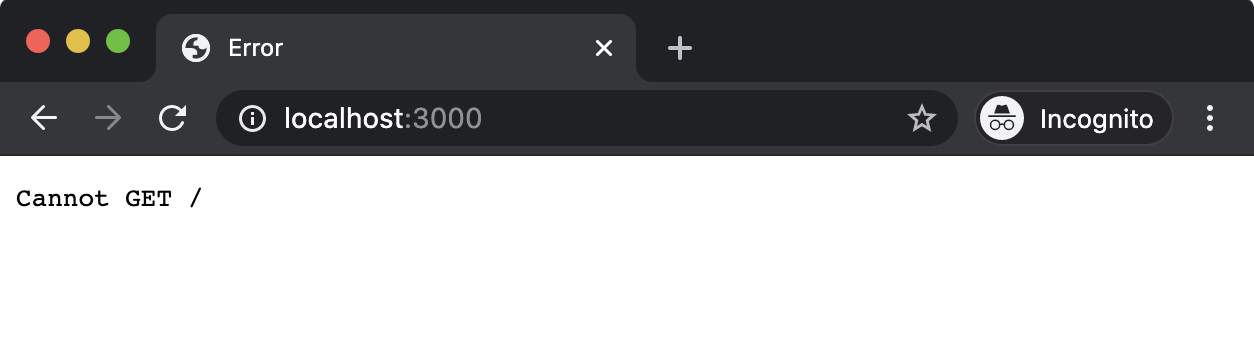

If we navigate in a browser to http://localhost:3000, we should see the following output:

We haven't defined any routes, so the generated Express function will default to this behavior. Before we create our routes, let's align the error handling with our HTTP server from the previous section by modifying app.js to the following:

'use strict'

const express = require('express')

const createError = require('http-errors')

const app = express()

app.use((req, res, next) => {

if (req.method !== 'GET') {

next(createError(405))

return

}

next(createError(404))

})

app.use((err, req, res, next) => {

res.status(err.status || 500)

res.send(err.message)

})

module.exports = app

Creating a Web Server with Express (3)

Configuring an Express servers' behavior is almost always performed with app.use (where app is the Express instance). The app.use method takes a function which is very similar to the function that is passed to http.createServer. The function will be called for every incoming request, and it will be passed a request object and a response object.

The difference between the function passed to app.use and the function passed to http.createServer is that it can also have a third parameter called next. This is an error-first callback function that is called when the function passed to app.use has completed any tasks and is ready to handover to the subsequent function registered via app.use. So this means that instead of passing one big function to http.createServer, multiple functions can be registered via app.use. They will be called in order of registration, each one handing over to the following one when it's done processing. If the next function is not called, then the request handling ends there and none of the ensuing registered functions are called for that request. This approach is known as the middleware pattern. The building blocks for configuring an Express server are middleware functions.

In our case we've configured two pieces of middleware. The first middleware function we registered should always be the second-to-last middleware. Essentially, if this middleware has been reached then we can assume that no routes were matched. Therefore we generate a 404 error using the http-errors module (part of the Express ecosystem). The http-errors module will generate an appropriate message for any HTTP status code passed to it. We then pass this error object as the first argument to the next callback function, which let’s Express know that an error has occurred. We may also pass a 405 (Method Not Allowed) error instead, if we find that the req.method property does not have the value of GET. This matches the functionality in our HTTP server implementation from the first section. Currently, we have no routes registered, so a 404 error is the default for any HTTP GET requests.

The very last piece of middleware in our modified app file should always be the final piece of middleware. This registered middleware specifies four parameters instead of the usual three. This makes Express recognize the middleware as the final error handling middleware and passes the error object that we pass to next in the prior middleware as the first argument of this special error-handling middleware function. From there we can grab the HTTP status code from the error object and use it to set the response status code. Notice that we use a res.status function instead of the res.statusCode property. Similarly, we can use res.send instead of res.end to write and end the response. This is another method added by Express that will detect the Content-Type from the input, and potentially perform additional operations. For instance, if an object was passed to res.send that object would be serialized to JSON and the response Content-Type would automatically be set to application/json.

Even though the req and res objects are generated by the http module and have all of the same functionality, Express decorates the req and res objects with additional functionality. We could not have used res.status or res.send in the previous section because these functions did not exist. Some, including this author, view Express' decorator approach on core APIs as a mistake. By conflating Node core APIs with Express APIs on the same objects the principles of least surprise and separation of concerns are violated, while also causing performance issues. However, so much legacy code has been written with Express it's important to understand its APIs.

Let's move onto creating our routes, the routes/index.js file should look as follows:

'use strict'

const { Router } = require('express')

const router = Router()

const root = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

a { color: yellow; font-size: 2rem; font-family: sans-serif }

</style>

</head>

<body>

<a href='/hello'>Hello</a>

</body>

</html>

`

router.get('/', (req, res) => {

res.send(root)

})

module.exports = router

Note that inlining HTML like this is uncommon and it was used here only for demonstration purposes. Usually, HTML would be dynamically generated with a template language or maybe a Server Side Rendering of a frontend framework such as React. We'll look briefly at templates in the next chapter.

Creating a Web Server with Express (4)

To add a route we create an instance of the Express router, and then use its get method to define a GET route. The router supports all HTTP verbs (e.g. POST, PUT and so forth). In each case when a router HTTP verb method is called the first argument passed to it is a string declaring the path for the route. In this case we just use /, since this is our root route. The second argument is a route middleware function. In our case we simply send our HTML string (root) to the response. The router instance is exported from the routes/index.js file, and we'll later import it into app.js in order to register it with the Express application instance.

Now, let's create our /hello route. The routes/hello.js file should contain the following:

'use strict'

const { Router } = require('express')

const router = Router()

const hello = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

</style>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>`

router.get('/', (req, res) => {

res.send(hello)

})

module.exports = router

This is very similar to the root route. Note that we define the route path as / in this case as well, instead of /hello. This is because we'll be mounting this router at the /hello route path in app.js instead. This pattern allows for easy renaming of routes at the top level.

Finally, let's register our routes in app.js, by modifying app.js to the following:

'use strict'

const express = require('express')

const createError = require('http-errors')

const indexRoutes = require('./routes')

const helloRoutes = require('./routes/hello')

const app = express()

app.use('/', indexRoutes)

app.use('/hello', helloRoutes)

app.use((req, res, next) => {

if (req.method !== 'GET') {

next(createError(405))

return

}

next(createError(404))

})

app.use((err, req, res, next) => {

res.status(err.status || 500)

res.send(err.message)

})

module.exports = app

Creating a Web Server with Express (5)

We imported both routes/index.js and routes/hello.js, and assigned them to indexRoutes and helloRoutes respectively. Then above our 404/405 error handler middleware, we registered the imported express.Router instances with app.use. The app.use function can optionally take a mount point, which is a string representing a route path. This means it will only apply the registered middleware (the express.Router instances are also middleware functions) when incoming requests match that path. We mount the indexRoutes at the / route path and the helloRoutes at the /hello route path.

We need to start a server with our new changes. If our server is still running, we can stop it with Ctrl+C and then start it again with the following command:

npm start

Now, if we navigate in a browser to http://localhost:3000, we should see the following:

If we click the link (or navigate to http://localhost:3000/hello), we should see the following:

If we navigate to a non-existent route, we should see the following:

This section has been a deep-end dive into Express fundamentals. Throughout the course we'll continue to explore Express and retrace key topics.

Creating a Web Server with Fastify (1)

Fastify is an up-and-coming framework in the Node.js ecosystem. It's specifically geared towards creating RESTful JSON services but can also be used for serving HTML as in our example implementation in the previous two sections.

Instead of middleware, Fastify supports a plugin-based pattern which provides full code isolation and encapsulation. We'll explore this more in this section.

Fastify explicitly supports newer language features (such as async/await), has a focus on modern developer experience and is the most performant framework in the Node.js ecosystem. Not only that but Fastify also provides full Express integration via the fastify-express plugin. This means that the vast Express ecosystem can be used with Fastify (often at higher requests per second than using the same middleware with Express!), and entire Express projects can be encapsulated in a Fastify plugin and used as part of a Fastify project.

In this section, however, we'll implement the same server created in the previous two sections but with Fastify. Let's start by making a folder:

node -e "fs.mkdirSync('fastify-web-server')"

cd fastify-web-server

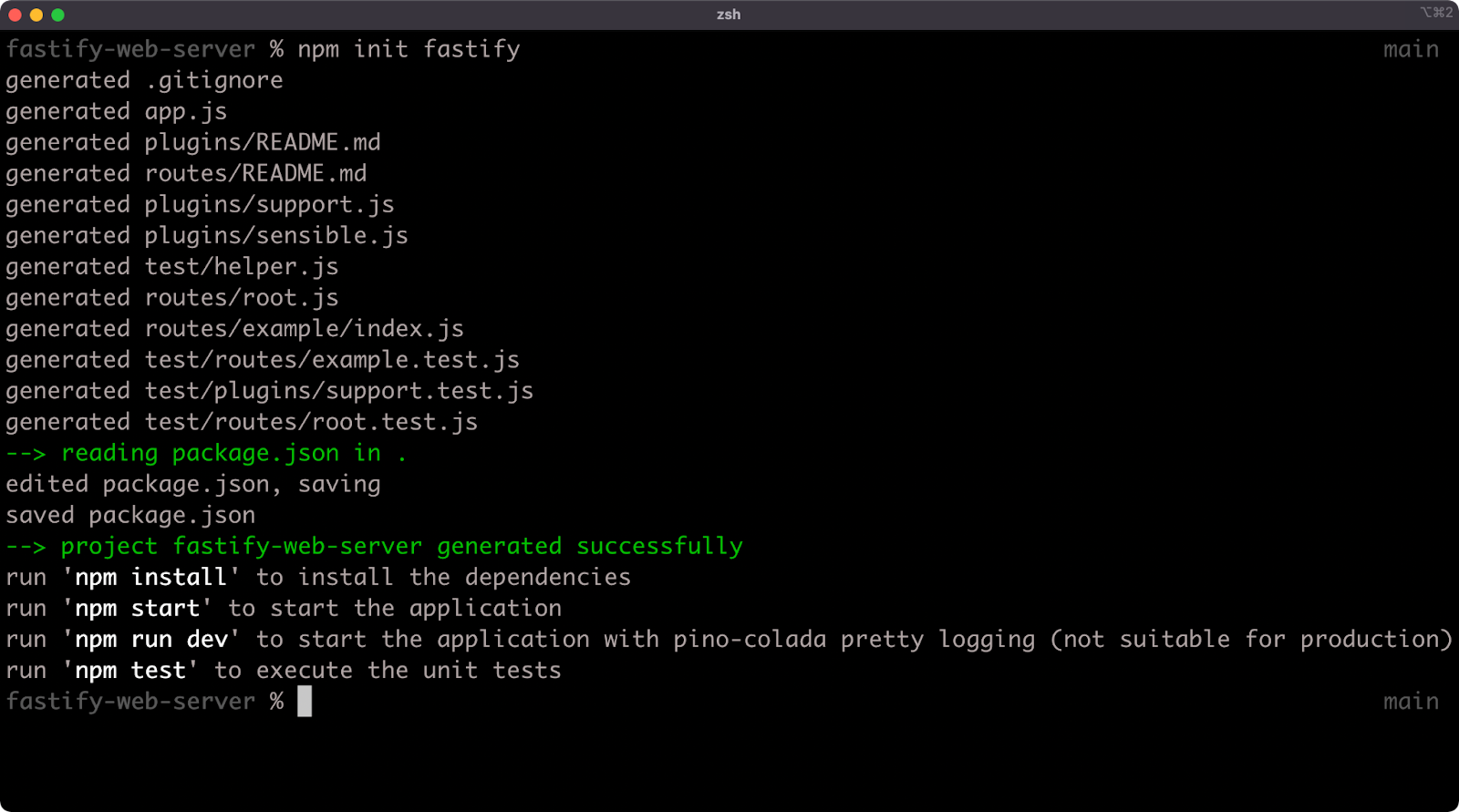

Now, we can run the following command to bootstrap a Fastify project:

npm init fastify

It's important to ensure that this command is executed within the fastify-web-server folder otherwise files will be added to unintended locations.

This command should produce output similar to the following:

As we can see from the output, the npm init fastify command creates some files in the current working directory. The following files and folders are generated:

.gitignorepackage.jsonapp.jsplugins/README.mdtest/helper.jsroutes/README.mdplugins/support.jsroutes/root.jstest/routes/example.test.jstest/plugins/support.test.jsroutes/example/index.jstest/routes/root.test.js

We'll only need to edit the app.js, routes/root.js and routes/example/index.js files to create the same implementation as in the previous two sections.

Creating a Web Server with Fastify (2)

Before doing anything else, let's make sure that the project dependencies are installed. In the same folder, let's run the following command:

npm install

While that's running, let's take a look at the contents of the app.js file (any comments have been stripped):

'use strict'

const path = require('path')

const AutoLoad = require('fastify-autoload')

module.exports = async function (fastify, opts) {

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'plugins'),

options: Object.assign({}, opts)

})

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'routes'),

options: Object.assign({}, opts)

})

}

The app.js file is the entry-point for the project and exports an async function. A Fastify plugin is a function that accepts a server instance and options as parameters. It may accept a third parameter, a next callback or it may return a promise (which is what an async function does). So the app.js file is actually exporting a Fastify plugin.

The server instance that is passed as the first argument to this function is named fastify. Additional plugins are registered with the registered method. In this case, a single plugin is registered twice. The fastify-autoload plugin automatically loads folders of plugins, so all app.js is doing is setting up a convenient way for us to define and work with plugins and routes. In both cases where fastify.register is called, the fastify-autoload plugin (AutoLoad) is passed as the first parameter and an object is passed as the second parameter. This second parameter is the options for the AutoLoad plugin. The dir option in each case points the fastify-autoload plugin to a plugins folder and a routes folder. The options option in each case specifies options that would be passed to all plugins that are autoloaded. It's essentially shallow merging the options passed to the app.js plugin function with an empty object.

The package.json added by the npm init fastify command should look something like the following:

{

"name": "bah",

"version": "1.0.0",

"description": "This project was bootstrapped with Fastify-CLI.",

"main": "app.js",

"directories": {

"test": "test"

},

"scripts": {

"test": "tap \"test/**/*.test.js\"",

"start": "fastify start -l info app.js",

"dev": "fastify start -w -l info -P app.js"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"fastify": "^3.0.0",

"fastify-autoload": "^3.10.0",

"fastify-cli": "^2.15.0",

"fastify-plugin": "^3.0.0",

"fastify-sensible": "^3.1.2"

},

"devDependencies": {

"tap": "^15.1.6"

}

}

Creating a Web Server with Fastify (3)

In the scripts field of the package.json we can see a start field and a dev field. In each case the app.js file is booted with the fastify start command. This works because the fastify-cli dependency (which can be seen in the dependencies field of the package.json) provides a CLI named fastify which is accessible to npm when running package.json scripts. The fastify start command automatically starts the server (performing the same role as the bin/www file in the Express example). Notice we have no defined port, this is because fastify start defaults to port 3000, it could be configured with the -p flag (lowercase) if desired.

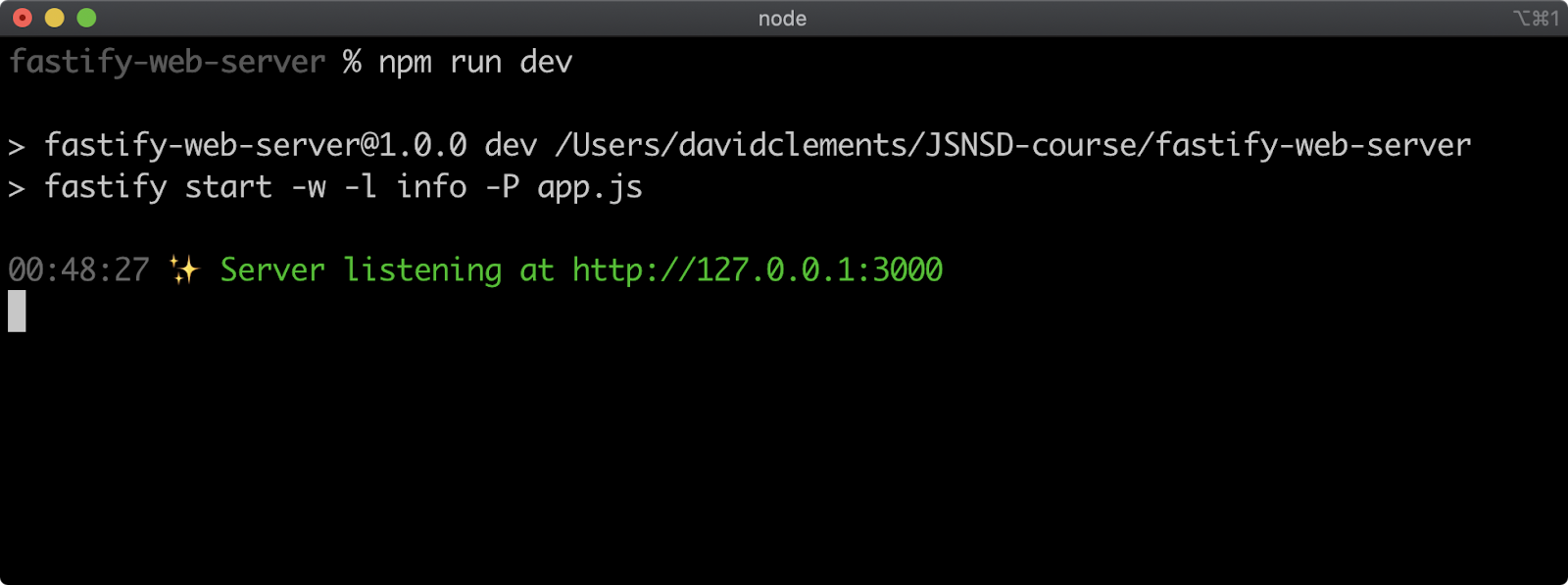

In the dev script there are two additional flags: -w and -P (uppercase). The -P flag means "prettify the log output", which would otherwise be newline delimited JSON logs. The -w flag means “watch and reload the project as we work on it”, so we can go ahead and run the following to start our server:

npm run dev

This should display something like the following:

We shouldn't need to start and stop the server as we make changes, that will happen automatically.

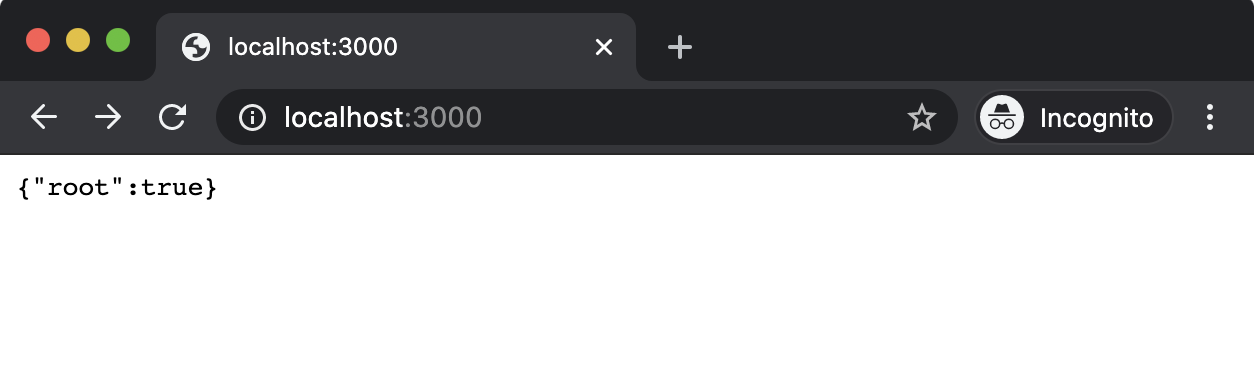

We can check the server is running correctly by navigating to http://localhost:3000 in the browser. We should see something like the following:

In Fastify, everything is a plugin. The distinction between plugins and routes is mostly convention-setting to help us reason about a server or service's functionality. The files in the routes folder are actually plugins (exported functions that return promises or use a next callback). The files in the plugins folder are also plugins, but they are more commonly de-encapsulated plugins, meaning that the functionality that they provide can be accessed by sibling plugins. Think of the plugins folder like a lib folder, but where a strict and enforceable common interface is used for every exported piece of functionality. Use of the plugins folder will be explored more in later sections. The entry point is a plugin. Routes are plugins. Plugins (local libraries) are plugins.

Creating a Web Server with Fastify (4)

A key difference between Express middleware and Fastify plugins is that Express middleware is executed for every request (if reachable) but Fastify plugins are called only at initialization time. Fastify plugins are always asynchronous (either with a callback or a returned promise) to allow for asynchronous initialization of every plugin.

Now, let's focus on the routes folder. We just saw that the root route (/) responds with {"root":true}. Let's take a look at the code in routes/root.js:

'use strict'

module.exports = async function (fastify, opts) {

fastify.get('/', async function (request, reply) {

return { root: true }

})

}

The routes/root.js file exports an async function that accepts the fastify instance and an options argument. The routes/root.js file exports a Fastify plugin. A Fastify plugin is a function that takes the server instance (fastify).

Within the plugin function, fastify.get is called. This registers an HTTP GET route. The first argument is a string containing a forward slash (/), indicating that the route being registered is the root route (/). All HTTP verbs can be called as methods on the fastify instance (e.g. fastify.post, fastify.put and so on).

The second argument passed to fastify.get is an async function, the route handler, which accepts the request and reply objects. The request and reply objects have the same objective as the http and Express req and res objects but they have a different (and separate API). To learn more see the "Fastify: Request" and "Fastify: Reply" Documentation.

The fastify.get method can accept a normal synchronous function or an async function. Whatever is returned from the function or async function is automatically processed and sent as the content of the HTTP response.

Alternatively the reply.send method can be used (e.g. reply.send({root: true})), which is similar to the res.send method of Express. This can be useful when working with nested callback APIs.

Since an object is returned, Fastify converts it to a JSON payload before sending it as a response.

Let's edit the routes/root.js file to the following:

'use strict'

const root = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

a { color: yellow; font-size: 2rem; font-family: sans-serif }

</style>

</head>

<body>

<a href='/hello'>Hello</a>

</body>

</html>

`

module.exports = async function (fastify, opts) {

fastify.get('/', async function (request, reply) {

reply.type('text/html')

return root

})

}

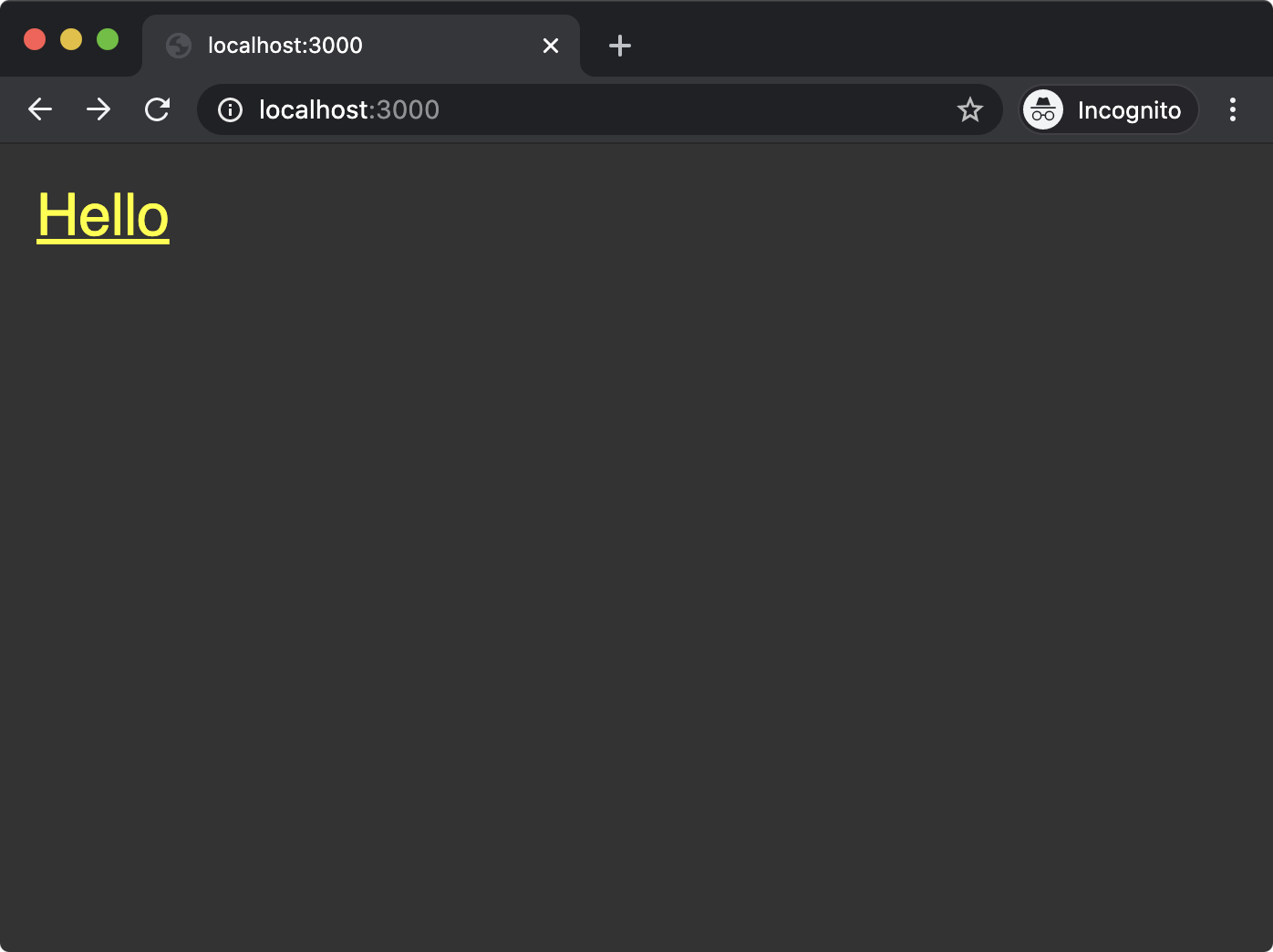

We've added the now familiar root string of HTML as a constant to the routes/root.js file and then we return root from the async function passed to fastify.get instead of returning an object. We've also used the Fastify API method reply.type to set the Content-Type header to text/html.

Creating a Web Server with Fastify (5)

If we now navigate in the browser to http://localhost:3000 we should see the following:

The other defined route is in routes/example/index.js, let's see what that looks like:

'use strict'

module.exports = async function (fastify, opts) {

fastify.get('/', async function (request, reply) {

return 'this is an example'

})

}

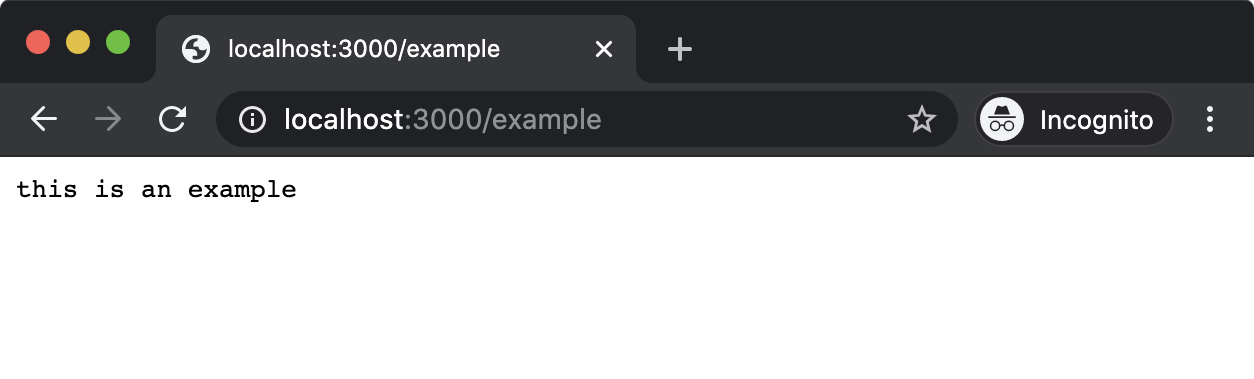

This code is very similar to the original routes/root.js route. Again, we have an exported async function that accepts the server instance (fastify) and options (opts). And again fastify.get is used to register a route, where the second parameter is an async function that is passed request and reply objects representing the incoming request and the outgoing response. However notice that the defined route, the first argument passed to fastify.get, is also / (not /example).

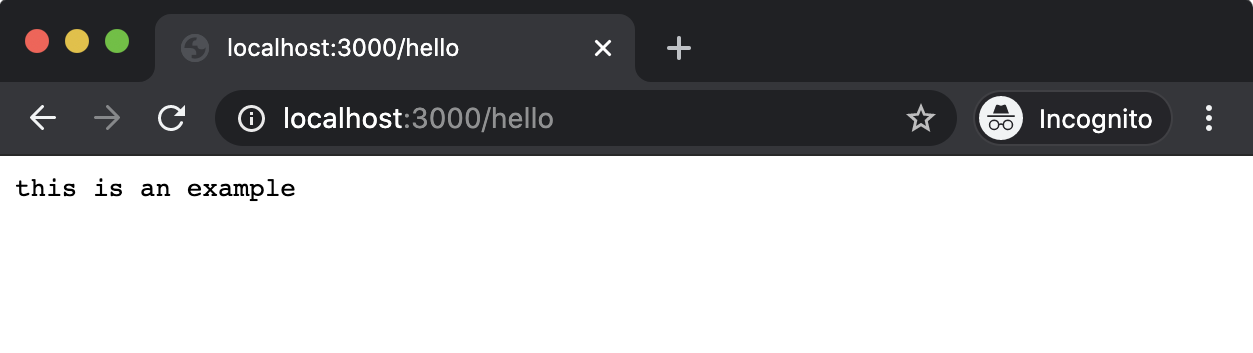

Let's navigate to http://localhost:3000/example in the browser:

When a route is defined in a subfolder, by default, the fastify-autoload plugin will register that route prefixed with the name of the subfolder. So the example route is at routes/examples/index.js and registers a route at /. This causes fastify-autoload to register the server route at /example. If the route passed to fastify.get in routes/example/index.js had been /foo then fastify-autoload would have registered that route at /example/foo.

Creating a Web Server with Fastify (6)

We need a /hello route, so let's rename the routes/example folder to routes/hello. Leaving the npm run dev command running, use another terminal with the working directory set to our fastify-web-server folder to run the following:

cd routes

node -e "fs.renameSync('example', 'hello')"

cd ..

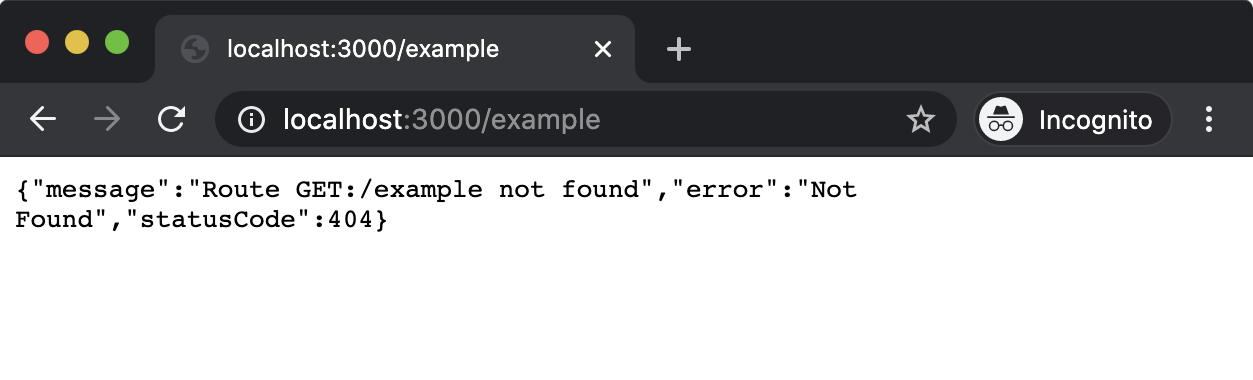

Attempting to load http://localhost:3000/example in the browser will now result in the following:

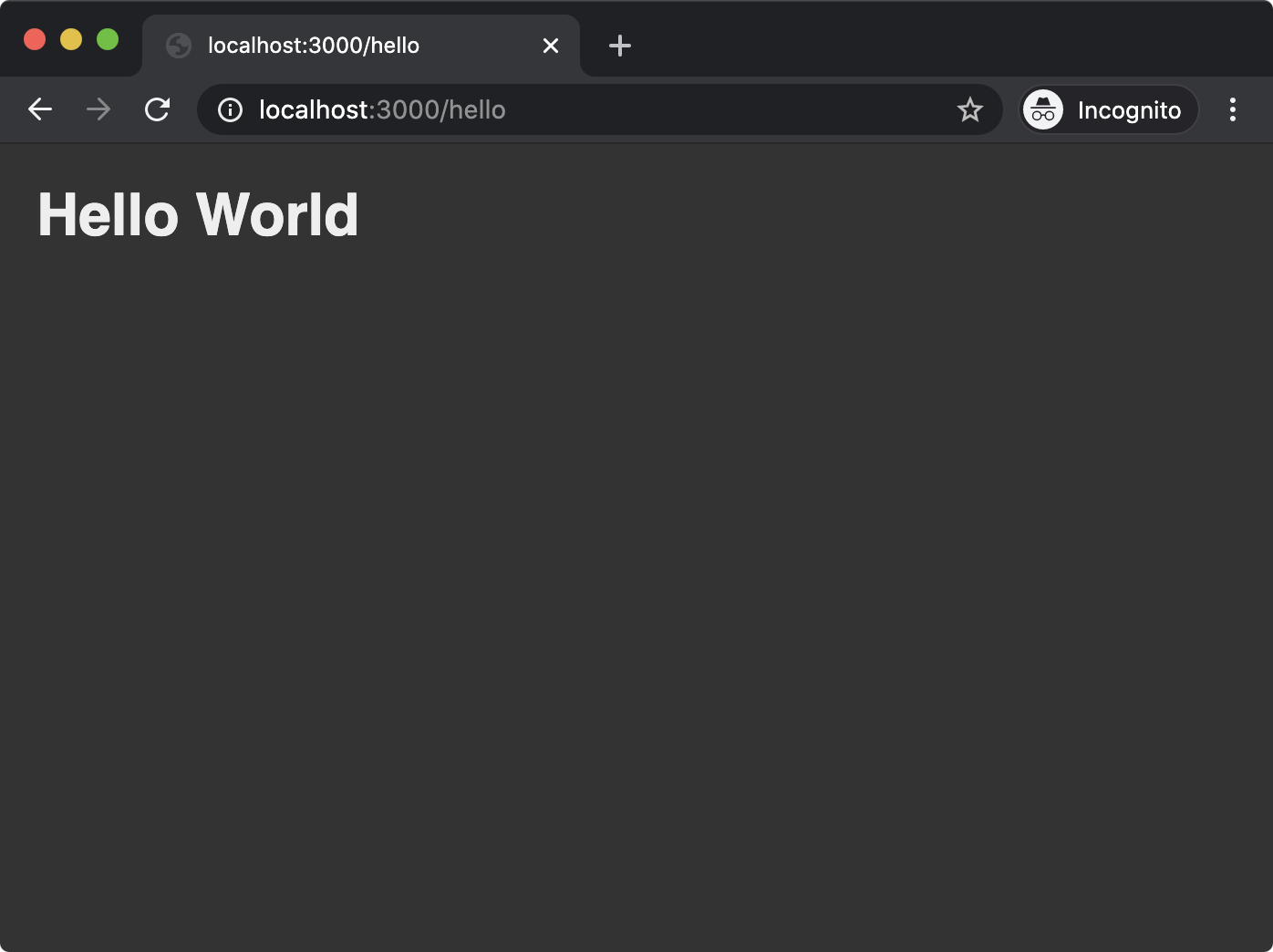

This is the default Fastify 404 handling behavior. We'll modify this later to align with our server implementations, but for now we can see that the /example route no longer exists. However, if we navigate to http://localhost:3000/hello, we should see the following:

Now, let's modify routes/hello/index.js to contain the following code:

'use strict'

const hello = `<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

</style>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>`

module.exports = async function (fastify, opts) {

fastify.get('/', async function (request, reply) {

reply.type('text/html')

return hello

})

}

Creating a Web Server with Fastify (7)

Again, we see the familiar hello constant, which is returned from the route handler function passed as the second argument to fastify.get. At this point we can see that the code is somewhat repetitive. This is a good thing, Fastify is providing a strong declarative structure allowing us to focus on what we actually want to do instead of how to do it.

If we now navigate to http://localhost:3000/hello we should see the following:

Finally to fully align this implementation with the http and Express implementations we need to modify the Not Found and Method Not Allowed behavior by making our app.js file look as follows (comments have been stripped):

'use strict'

const path = require('path')

const AutoLoad = require('fastify-autoload')

module.exports = async function (fastify, opts) {

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'plugins'),

options: Object.assign({}, opts)

})

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'routes'),

options: Object.assign({}, opts)

})

fastify.setNotFoundHandler((request, reply) => {

if (request.method !== 'GET') {

reply.status(405)

return 'Method Not Allowed\n'

}

return 'Not Found\n'

})

}

The only addition is the fastify.setNotFoundHandler method call. This method accepts a function with the same criteria as the route handler function passed to fastify.get (and fastify.post, fastify.put and so on). In our case, we use a normal function, inspect the HTTP method and if it is not GET we set the HTTP status code to 405 and then return the associated message (Method Not Allowed). Otherwise we return the 404 message (Not Found). Fastify will call this function and use its output in cases where a route cannot be found (which includes routes that haven't been registered with the requested HTTP verb).

This has been an intense primer on Fastify. In the following chapters we'll be using it more and learning more about it as we go forward.

Lab Exercises

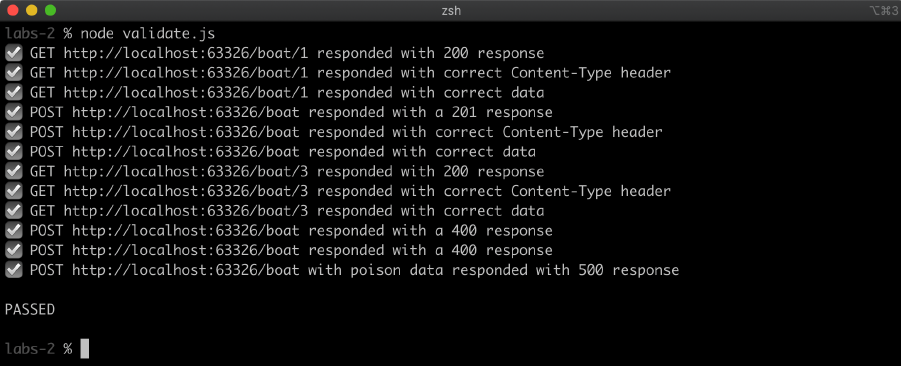

Lab 3.1 - Deliver Data from a Library API

The labs-1 folder contains the following files:

data.jspackage.jsonvalidate.js

The data.js file contains the following:

'use strict'

const { promisify } = require('util')

const { randomBytes } = require('crypto')

const timeout = promisify(setTimeout)

async function data() {

await timeout(50)

return randomBytes(10).toString('base64')

}

module.exports = data

The data.js file exports a function that returns a promise (an async function) that resolves to a random BASE64 string. This function represents some kind of asynchronous data source.

The package.json file contains the following:

{

"name": "labs-1",

"scripts": {

"start": "echo \"TODO: SET THE START SCRIPT\" && exit 1"

}

}

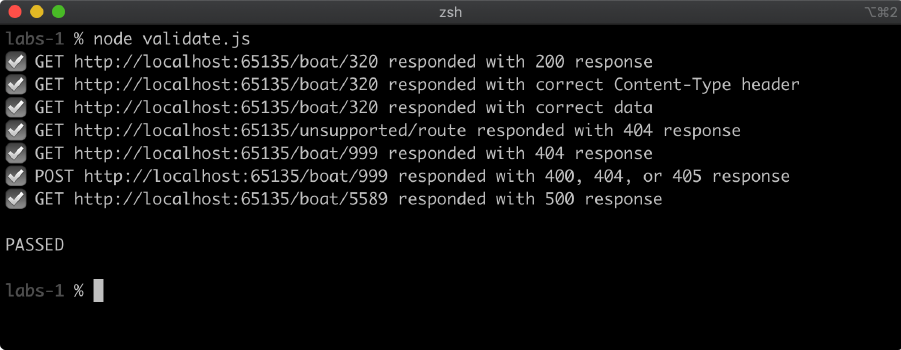

Using any Node core library and/or web framework create an HTTP server that meets the following criteria:

- Listens on

localhost - Listens on port

3000 - Responds to HTTP GET requests to

/with data from the data function as exported from thedata.js - Responds with a 404 to GET requests to any other route

The package.json start script must contain a command to start the server.

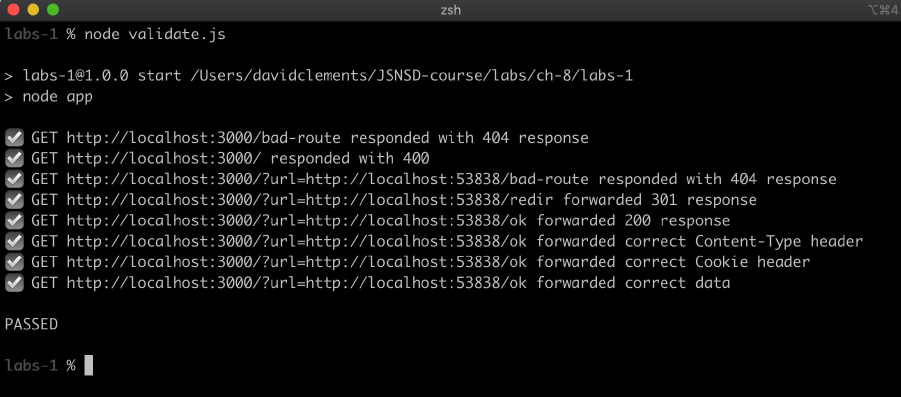

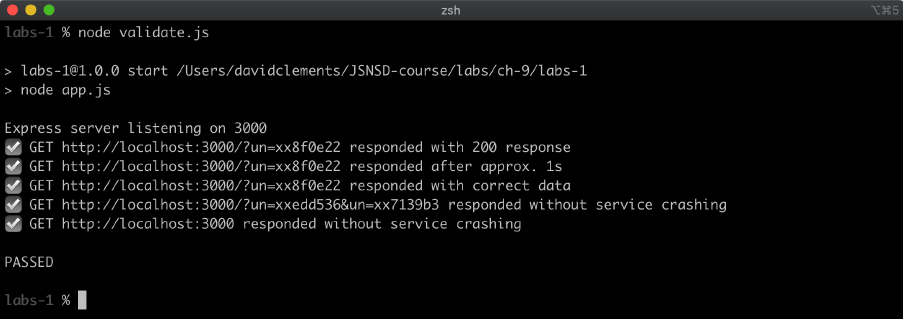

Run the following command to check whether the created server meets the criteria:

node validate

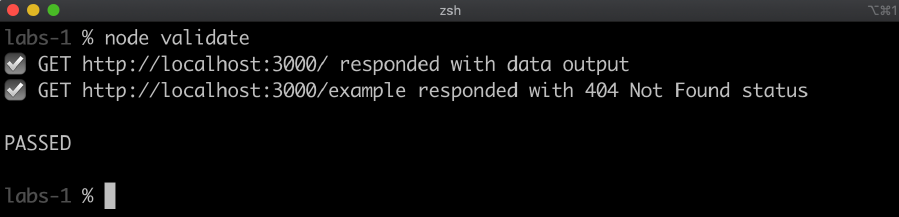

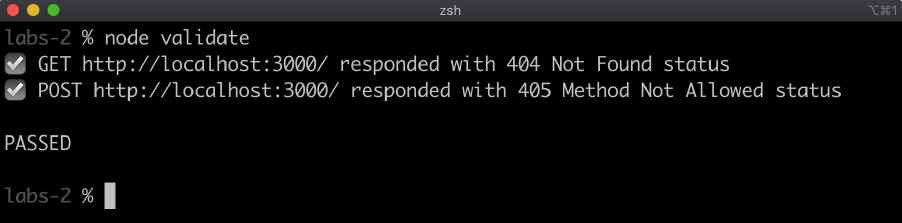

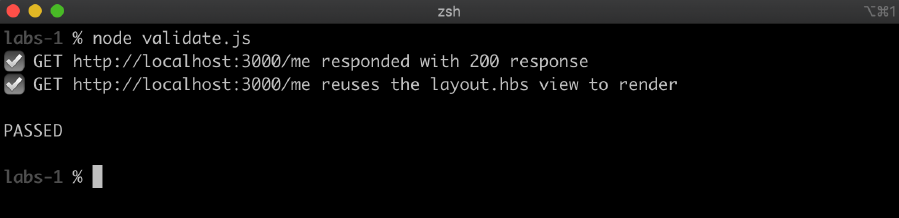

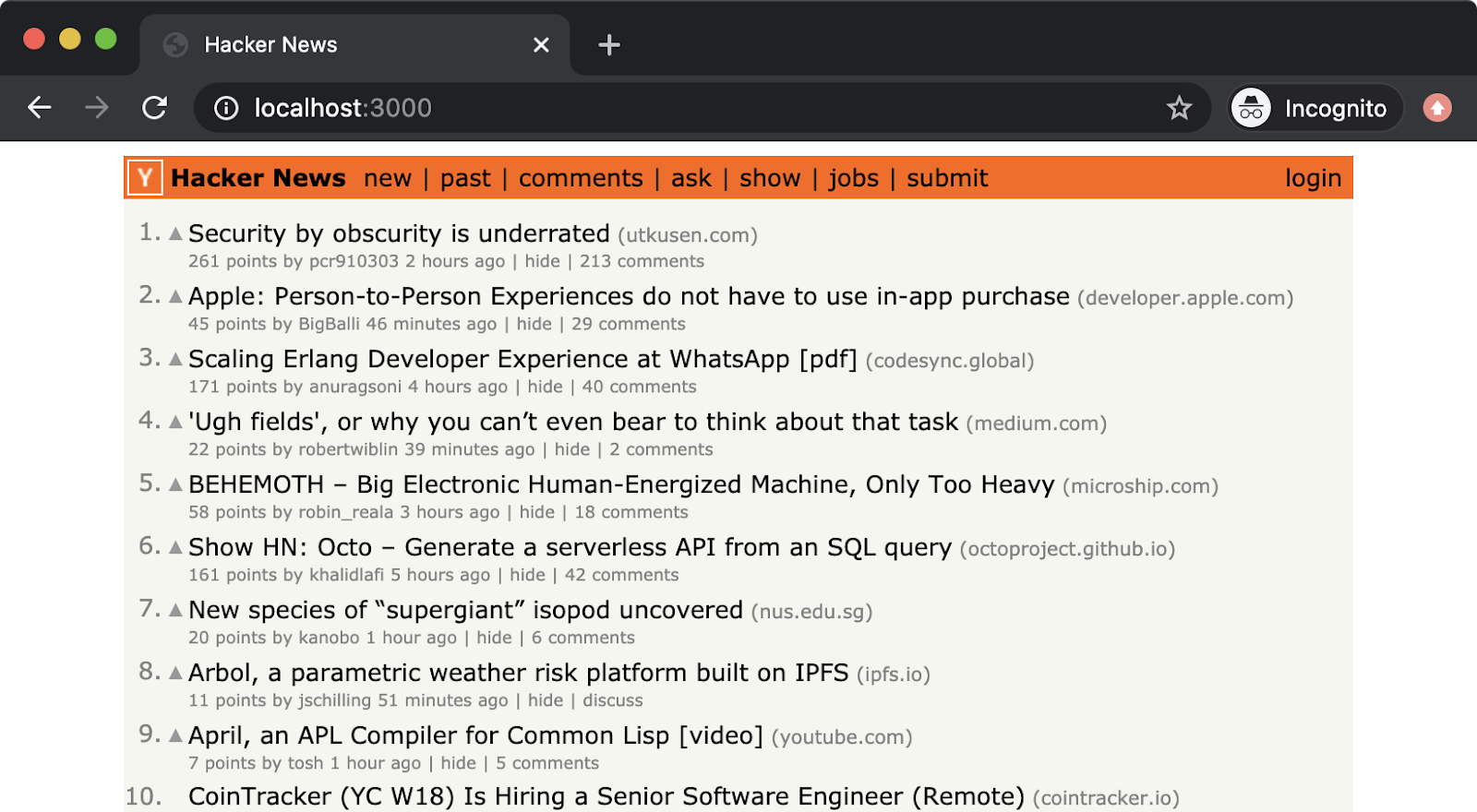

If the server was correctly implemented, the output of this command should be as follows:

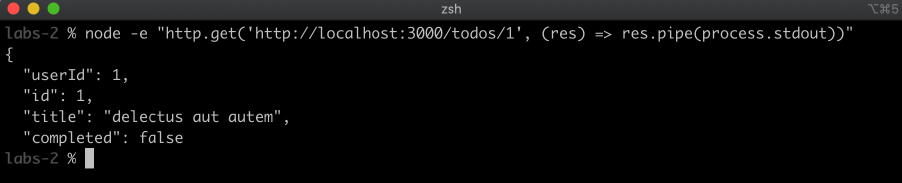

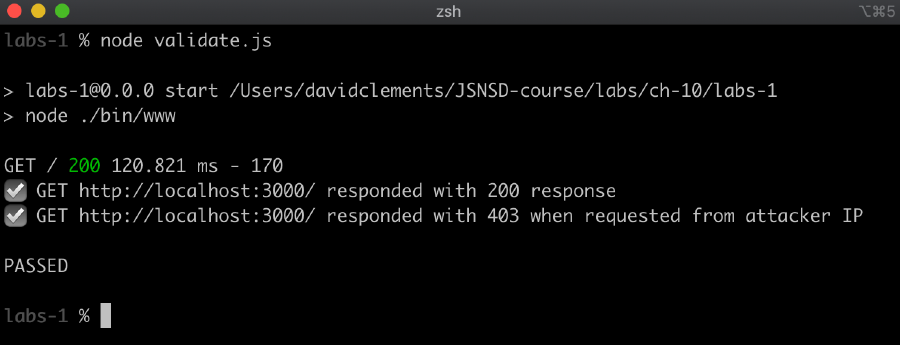

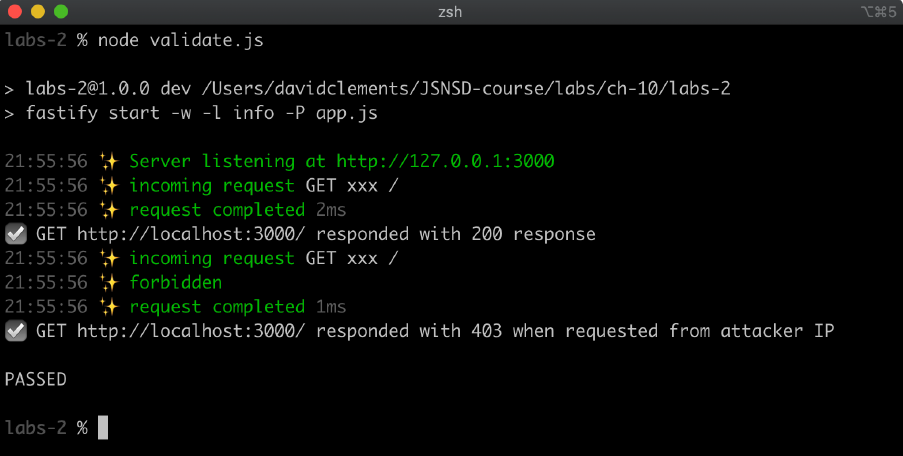

Lab 3.2 - Implement a Status Code Response

The labs-2 folder contains the following files:

package.jsonvalidate.js

The package.json file contains the following:

{

"name": "labs-2",

"scripts": {

"start": "echo \"TODO: SET THE START SCRIPT\" && exit 1"

}

}

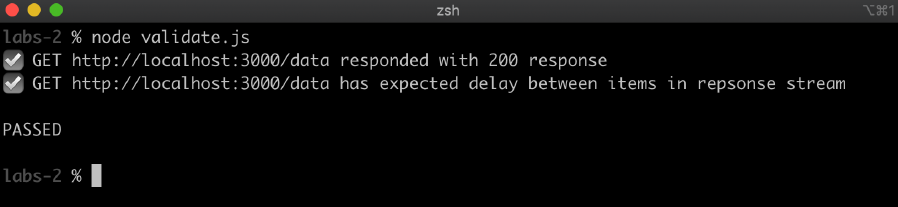

Using any Node core library and/or web framework create an HTTP server that meets the following criteria:

- Listens on localhost

- Listens on port 3000

- Responds to HTTP GET requests to / with a 200 OK HTTP status, the content is irrelevant

- Responds to HTTP POST requests to / with a 405 Method Not Allowed HTTP status

The package.json start script must contain a command to start the server.

Run the following command to check whether the created server meets the criteria:

node validate

If the server was correctly implemented, the output of this command should be as follows:

4 - Serving Web Content

Introduction

Chapter Overview

Generally speaking, static assets (content that does not change very often) should not be served by Node. Static content should be delivered via a CDN and/or a caching reverse proxy that specializes in static content such as NGINX or Varnish. However, there are cases where serving static content with Node.js is useful. One case would be when working on a Node.js server locally without access to deployment infrastructure. Another is perhaps in independent development scenarios (as in "indie development") where investing time, effort and energy into additional infrastructure projects makes less sense than serving content from a Node.js process. At a stretch, Node.js could serve static content for applications with very small user bases that have a very low growth potential. In enterprise or scalable-startup scenarios, static content should be handled outside of Node.js. Where Node.js shines however, is dynamic content. Using Node.js as a mediator for gathering data from multiple sources and rendering some output is perfect for such an evented language and non-blocking I/O platform. In this chapter, we'll be exploring serving static content and dynamic content and also looking into streaming content with both Fastify and Express frameworks.

Learning Objectives

By the end of this chapter, you should be able to:

- Learn how to serve static content with Fastify and Express.

- Understand the benefits of streaming and how to use it with Fastify and Express.

- Generate dynamic content with template engines in Fastify and Express.

Serving Web Content

Serving Static Content with Fastify (1)

In the previous chapter, we created a Fastify server in the Creating a Web Server with Fastify section. In this section, we're going to work on the same code base as we left off in the last chapter.

Currently the package.json of our code looks as follows:

{

"name": "bah",

"version": "1.0.0",

"description": "This project was bootstrapped with Fastify-CLI.",

"main": "app.js",

"directories": {

"test": "test"

},

"scripts": {

"test": "tap \"test/**/*.test.js\"",

"start": "fastify start -l info app.js",

"dev": "fastify start -w -l info -P app.js"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"fastify": "^3.0.0",

"fastify-autoload": "^3.10.0",

"fastify-cli": "^2.15.0",

"fastify-plugin": "^3.0.0",

"fastify-sensible": "^3.1.2"

},

"devDependencies": {

"tap": "^15.1.6"

}

}

We need to add a new Fastify plugin that will handle static content for us. Making sure that our current working directory is the fastify-web-server folder we created in the previous chapter let's run the following command:

npm install --save-dev fastify-static

This will automatically update the devDependencies section of our package.json to look as follows:

"devDependencies": {

"fastify-static": "^4.5.0",

"tap": "^15.1.6"

}

We've deliberately installed fastify-static as a development dependency. It's generally bad practice to use Node.js for static file hosting in production. We need to think of this as a local development convenience only in most cases so we're going to apply constraints to ensure this isn't used in production.

The app.js file currently looks as follows (any comments are removed):

'use strict'

const path = require('path')

const AutoLoad = require('fastify-autoload')

module.exports = async function (fastify, opts) {

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'plugins'),

options: Object.assign({}, opts)

})

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'routes'),

options: Object.assign({}, opts)

})

fastify.setNotFoundHandler((request, reply) => {

if (request.method !== 'GET') {

reply.status(405)

return 'Method Not Allowed\n'

}

return 'Not Found\n'

})

}

We need to register and configure fastify-static but not in production. Let's make our app.js look as follows:

'use strict'

const path = require('path')

const AutoLoad = require('fastify-autoload')

const dev = process.env.NODE_ENV !== 'production'

const fastifyStatic = dev && require('fastify-static')

module.exports = async function (fastify, opts) {

if (dev) {

fastify.register(fastifyStatic, {

root: path.join(__dirname, 'public')

})

}

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'plugins'),

options: Object.assign({}, opts)

})

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'routes'),

options: Object.assign({}, opts)

})

fastify.setNotFoundHandler((request, reply) => {

if (request.method !== 'GET') {

reply.status(405)

return 'Method Not Allowed\n'

}

return 'Not Found\n'

})

}

Serving Static Content with Fastify (2)

It is a typical convention when deploying an application to set an environment variable called NODE_ENV to 'production'. This would be managed by deployment infrastructure and is outside of scope here, other than to acknowledge that it's the convention most often used to determine whether a Node.js process is running in development mode or is deployed to production (or staging). By checking that NODE_ENV is not set to production we assume development mode, which makes our dev constant true.

We conditionally load fastify-static into the process if dev is true. Since fastify-static is a development dependency, if we didn't do this the server would throw on initialization due to an attempt to load a missing dependency.

Within the root plugin (the exported async function of app.js), we also conditionally register fastify-static with the fastify.register method. The first argument passed to fastify.register is the fastify-static plugin (fastifyStatic). The second argument is the options for the plugin. We set the root option to point to a folder named public in our project dir. This instructs fastify-static to only serve files from that folder, and not allow any files above that folder to be accessible.

We'll need to create this public folder next. Making sure that fastify-web-server is our current working directory, let's run the following in the terminal:

node -e "fs.mkdirSync('public')"

cd public

node -e "fs.openSync('index.html', 'w')"

node -e "fs.openSync('hello.html', 'w')"

cd ..

We'll also be replacing our routes with static HTML so let's delete routes/hello.js and routes/root.js:

cd routes

node -e "fs.unlinkSync('root.js')"

node -e "fs.rmdirSync('hello', {recursive: true})"

cd ..

The project file and folder structure should now be as follows:

.gitignoreapp.jspublic/index.htmlpublic/hello.htmlroutes/README.mdplugins/README.mdplugins/support.jstest/helper.jstest/routes/example.test.jstest/plugins/support.test.jstest/routes/root.test.js

Our final step is to add the contents of the index.html and hello.html files in the public folder.

The index.html file should contain the following content:

<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

a { color: yellow; font-size: 2rem; font-family: sans-serif }

</style>

</head>

<body>

<a href='/hello.html'>Hello</a>

</body>

</html>

Note that the contents of index.html differ from the string of HTML in our root route from the previous section in one key place: the anchor link (<a/>) points to /hello.html instead of /hello.

The hello.html file should contain the following content:

<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

</style>

</head>

<body>

<h1>Hello World</h1>

</body>

</html>

Now we can start our server:

npm run dev

Serving Static Content with Fastify (3)

If we navigate in a browser to http://localhost:3000 we should see something like the following:

This root route delivers the same HTML as in the previous chapter, the difference is that fastify-static is loading public/index.html instead of us defining a route and manually sending the content. If we navigate to http://localhost:3000/index.html we'll get the same outcome because the root route (/) is special-cased to load an index.html file.

If we click the link we'll navigate to http://localhost:3000/hello.html, which should result in something similar to the following:

The fastify-static module also decorates the reply object with sendFile method. We can use this to create a route that manually responds with the contents of hello.html if we wanted to alias /hello.html to /hello.

Let's finish this section off by doing just that. We need to recreate the hello folder in the routes directory with an index.js file. Let's run the following commands to create the desired structure:

cd routes

node -e "fs.mkdirSync('hello')"

cd hello

node -e "fs.openSync('index.js', 'w')"

cd ..

cd ..

Let's write the following code into routes/hello/index.js:

'use strict'

module.exports = async (fastify, opts) => {

fastify.get('/', async (request, reply) => {

return reply.sendFile('hello.html')

})

}

Adding routes/hello/index.js automatically causes fastify-autoload to mount any routes registered in that file at the /hello URL path. We register a GET / route (which is therefore the /hello route since the route path is set by the folder path) and we call reply.sendFile('hello.html'). This causes fastify-static to respond to the request with contents of the public/hello.html file. The sendFile method knows to load the hello.html file from the public folder because we configure the root option passed to fastifyStatic in app.js to point to the public folder. If we restart the server (npm run dev) and navigate to http://localhost:3000/hello, we should now see the same page as http://localhost:3000/hello.html.

Using Templates with Fastify (1)

While the primary and original focus of Fastify was for building data services, view rendering capability is available with a little bit of set up.

Building off our example Fastify server in the prior section, let's add template rendering for dynamic content generation.

In the terminal, with fastify-web-server as the current working directory let's run the following command in order to install a template engine and Fastify's view rendering plugin:

npm install point-of-view handlebars

Handlebars is one of the template engines that point-of-view supports. See more about Handlebars at http://handlebarsjs.com.

Now we can set up and configure view rendering, we need to modify our app.js file to look as follows:

'use strict'

const path = require('path')

const AutoLoad = require('fastify-autoload')

const pointOfView = require('point-of-view')

const handlebars = require('handlebars')

module.exports = async function (fastify, opts) {

fastify.register(pointOfView, {

engine: { handlebars },

root: path.join(__dirname, 'views'),

layout: 'layout.hbs'

})

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'plugins'),

options: Object.assign({}, opts)

})

fastify.register(AutoLoad, {

dir: path.join(__dirname, 'routes'),

options: Object.assign({}, opts)

})

fastify.setNotFoundHandler((request, reply) => {

if (request.method !== 'GET') {

reply.status(405)

return 'Method Not Allowed\n'

}

return 'Not Found\n'

})

}

We've removed fastify-static which we introduced in the prior section, and with it the dev constant which we won't need for this case because our server will now be performing on-the-fly dynamic rendering.

We loaded the two modules that we installed and then at the top of the exported async function we use fastify.register to register point-of-view (referenced as pointOfView). In the options object passed to fastify.register we set the engine to handlebars. Note that the engine option expects an option with the key being the name of the engine and the value being the engine library itself. We used a shorthand property { handlebars }, which creates an object with the shape { handlebars: handlebars }. We set the root option to path.join(__dirname, 'views'); we'll be creating a views folder in the project folder shortly. We've also set a layout option assigned to layout.hbs, we're also going to create a layout template in the views folder.

Let's create a views folder by running the following command:

node -e "fs.mkdirSync('views')"

We'll also create three files in the views folder: index.hbs, hello.hbs, and layout.hbs:

cd views

node -e "fs.openSync('index.hbs', 'w')"

node -e "fs.openSync('hello.hbs', 'w')"

node -e "fs.openSync('layout.hbs', 'w')"

cd ..

We'll also delete the public folder since we won't be using that any more:

node -e "fs.rmdirSync('public', {recursive: true})"

The views/layout.hbs file should contain the following:

<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

a { color: yellow; font-size: 2rem; font-family: sans-serif }

</style>

</head>

<body>

{{{ body }}}

</body>

</html>

Using Templates with Fastify (2)

We've mixed the styles together from the index.html and hello.html files we created in the previous section and we interpolate a special template local called body inside of the <body> opening and closing tags. Using three braces to denote an interpolation point is Handlebars syntax that instructs the template engine to conduct raw interpolation. In other words, if the body template local contains HTML syntax the content will not be escaped whereas using two braces would cause HTML syntax to be escaped (for instance < would be escaped to <). This should never be used when interpolating (uncleaned) user input into templates but when building a layout we need to inject raw HTML. The body local is created automatically by point-of-view when rendering a view because we specified the layout option.

In views/index.hbs we'll add the following content:

<a href='/hello'>Hello</a><br>

<a href='/hello?greeting=Ahoy'>Ahoy</a>

The views/hello.hbs file should contain the following:

<h1>{{ greeting }} World</h1>

Finally, we need to set up our routes to render our views. Let's recreate the routes/root.js that we removed in the last section:

cd routes

node -e "fs.openSync('root.js', 'w')"

The routes/root.js file should contain the following:

'use strict'

module.exports = async (fastify, opts) => {

fastify.get('/', async (request, reply) => {

return reply.view('index.hbs')

})

}

The point-of-view plugin that we registered in app.js decorated the reply instance with a view method. When we registered point-of-view, we set the root option to the views folder. Therefore, when we pass 'index.hbs' to reply.view it knows to look for index.hbs in the view folder. Similarly, the layout option that we set to 'layout.hbs' indicates to point-of-view that the layout template can be found in views/layout.hbs. So when we use reply.view here point-of-view first renders both the views/index.hbs file and then interpolates the rendered output into views/layout.hbs and sends the final rendered output of both files combined as the response. The return value of the reply.view method must be returned from the async function passed as the route handler so that Fastify knows when the route handler has finished processing the request.

For our hello pages, the routes/hello/index.js file should be updated to contain the following:

'use strict'

module.exports = async (fastify, opts) => {

fastify.get('/', async (request, reply) => {

const { greeting = 'Hello '} = request.query

return reply.view(`hello.hbs`, { greeting })

})

}

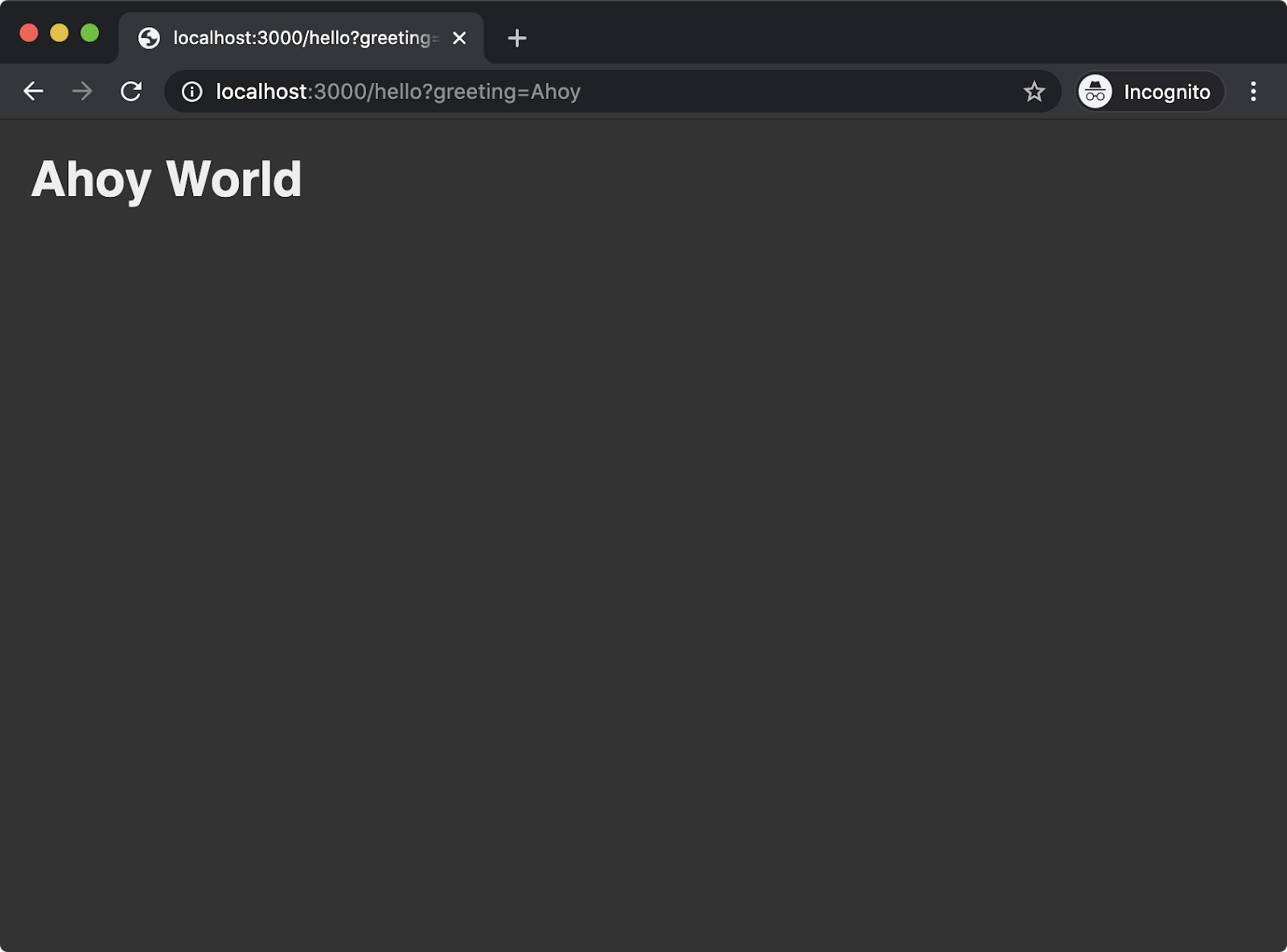

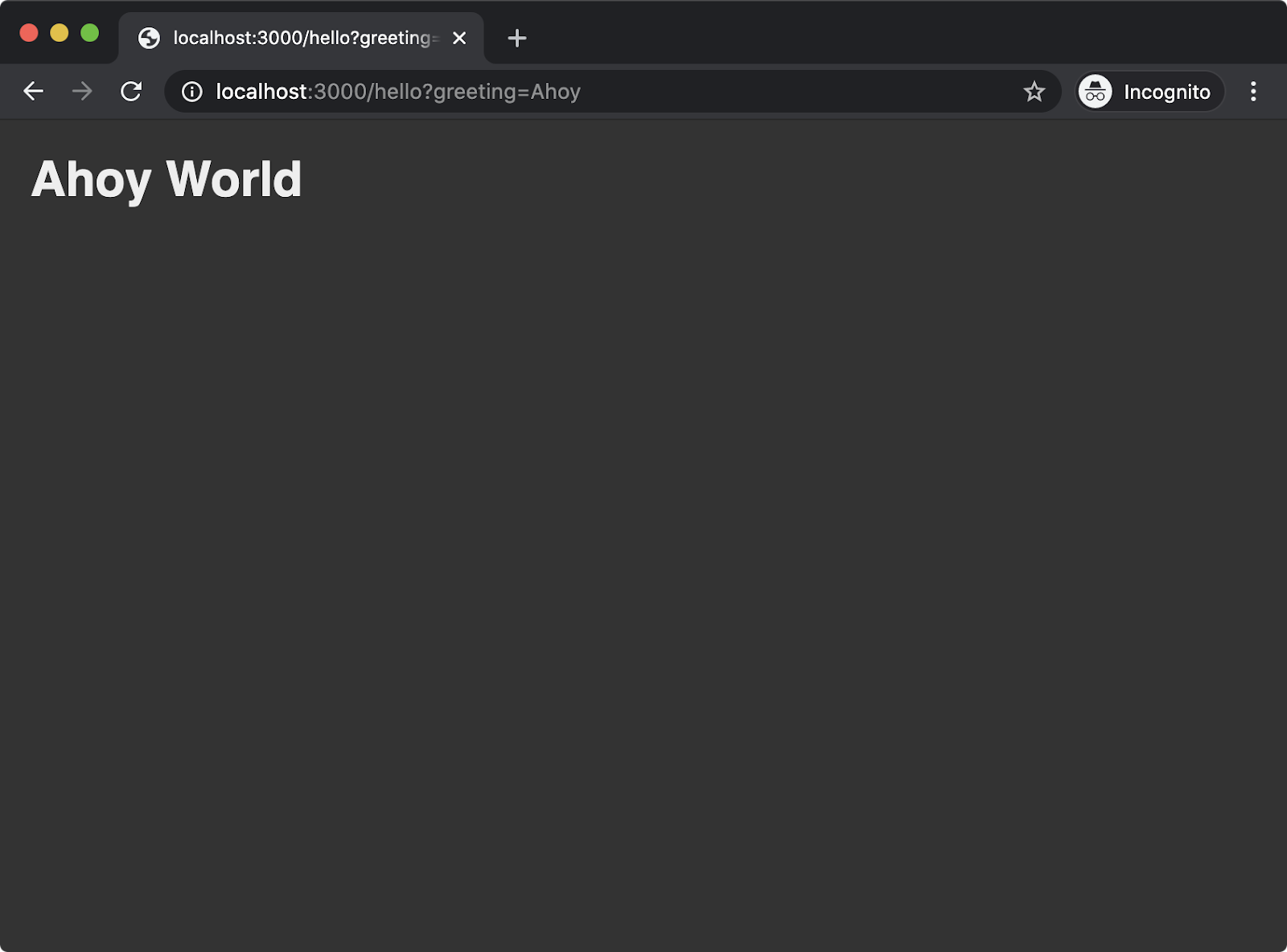

The reply.view method can take a second parameter, an object which sets the values of the template locals. Recall that views/hello.hbs contains a greeting template local, we pass an object with a property called greeting and a value defaulting to 'Hello' or else the value of a URL query string key named greeting. For instance, a request to /hello?greeting=Ahoy would result in the greeting constant being set to 'Ahoy' for that request and so the object passed as the second argument to reply.view would contain a property named greeting with a value of 'Ahoy'. This in turn would make reply.view render views/hello.hbs with Ahoy World text instead of Hello World.

For the purposes of understanding we're using a query string key-value and sending it back to the client as content. As discussed, the template engine will automatically clean the input (because we interpolate greeting with just two braces) however always exercise caution when handling user input. If there's another way to achieve a goal without directly reflecting content back to the client that is a more secure approach. See "Cross Site Scripting (XSS)" by OWASP for more information.

Using Templates with Fastify (3)

Let's try it out. If everything went according to plan we should be able to run the following to successfully start the server:

npm run dev

Once the server has started, we should be able to navigate to http://localhost:3000 and see something similar to the following:

Clicking the Hello link should take us to the following screen:

Going back to http://localhost:3000 and clicking the Ahoy link should display the following:

We've now served dynamic content with Fastify. For more details on the point-of-view plugin see https://github.com/fastify/point-of-view.

Serving Static Content and Using Templates with Express (1)

The original focus of the Fastify framework was on building RESTful JSON services, whereas Express is more geared towards template rendering (and static serving static content). Therefore Express has these pieces built into its core whereas in Fastify template rendering is an add-on.

In this section we'll use the express-generator command-line utility to generate a new project with view rendering and static asset serving preconfigured.

Let's install express-generator:

npm install -g express-generator@4

This will install a globally available command-line executable named express. We can use this executable to generate an express project.

Let's run the following command:

express --hbs express-web-server

This will generate a folder named express-web-server with an Express project that is set up to render Handlebar templates from the views folder.

Let's change our working directory to express-web-server and install the project dependencies:

cd express-web-server

npm install

The express-generator generated the following files and folders:

app.jspackage.jsonroutes/index.jsroutes/users.jspublic/imagespublic/javascriptspublic/stylesheets/style.cssviews/error.hbsviews/index.hbsviews/layout.hbs

Let's take a look at the top 20 lines of the app.js file:

var createError = require('http-errors');

var express = require('express');

var path = require('path');

var cookieParser = require('cookie-parser');

var logger = require('morgan');

var indexRouter = require('./routes/index');

var usersRouter = require('./routes/users');

var app = express();

// view engine setup

app.set('views', path.join(__dirname, 'views'));

app.set('view engine', 'hbs');

app.use(logger('dev'));

app.use(express.json());

app.use(express.urlencoded({ extended: false }));

app.use(cookieParser());

app.use(express.static(path.join(__dirname, 'public')));

On the last line of this snippet from app.js we can see that the express.static method is called, being passed the directory path to the public folder. The result of this is immediately passed to app.use. So the express instance has a method named static which returns Express middleware that will serve requests that match up with any files in the public folder. Notably this will serve files both in development and production environments which is recommended against. To reinforce this point, let's alter the the last line in our snippet from app.js to the following:

if (process.env.NODE_ENV !== 'production') {

app.use(express.static(path.join(__dirname, 'public')));

}

Now static hosting will only occur in development and production static hosting is left as a deployment infrastructure problem.

Around the middle of our code snippet from app.js we can see the view engine configuration:

// view engine setup

app.set('views', path.join(__dirname, 'views'));

app.set('view engine', 'hbs');

In Express the app.set method can be used to store state as key-values. In this specific case the 'views' key and the 'view engine' key are both special-cased key names that instruct Express to load views from a particular path and use a particular view engine respectively.

We already have a views/index.hbs and a views/layout.hbs, we just need to create a views/hello.hbs file. Let's create it now:

cd views

node -e "fs.openSync('hello.hbs', 'w')"

cd ..

Serving Static Content and Using Templates with Express (2)

Let's alter the views/layout.hbs file to contain the following:

<html>

<head>

<style>

body { background: #333; margin: 1.25rem }

h1 { color: #EEE; font-family: sans-serif }

a { color: yellow; font-size: 2rem; font-family: sans-serif }

</style>

</head>

<body>

{{{ body }}}

</body>

</html>

The views/index.hbs should be altered to contain the following code:

<a href='/hello'>Hello</a><br>

<a href='/hello?greeting=Ahoy'>Ahoy</a>

The views/hello.hbs file should contain the following:

<h1>{{ greeting }} World</h1>

Finally, we need to configure our routes. Let's rename routes/users.js to routes/hello.js:

cd routes

node -e "fs.renameSync('users.js', 'hello.js')"

cd ..

The routes/index.js file should have the following content:

var express = require('express');

var router = express.Router();

/* GET home page. */

router.get('/', function(req, res, next) {

res.render('index');

});

module.exports = router;

This is exactly as it was when generated by express-generator except we've removed the template locals object ({ title: 'Express' }) from being passed as the second argument to the res.render invocation. Express has res.render built-in to its core and it works in essentially the same way as reply.view added by the point-of-view plugin when registered in a Fastify server - although at the time of writing Express v4 renders at about half the speed of Fastify's point-of-view in production.

The routes/hello.js file should have the following content:

var express = require('express');

var router = express.Router();

router.get('/', function(req, res, next) {

var greeting = 'greeting' in req.query ?

req.query.greeting :

'Hello';

res.render('hello', { greeting: greeting });

});

module.exports = router;

Before we can start the server we now need to go back to app.js and configure the application to use routes/hello.js and to stop using routes/users.js which no longer exists.

Underneath the registration of express.static middleware we can find the routing setup:

app.use('/', indexRouter);

app.use('/users', userRouter);

Let's change the /users mount point to /hello and rename userRouter to helloRouter. Those two lines should look as follows:

app.use('/', indexRouter);

app.use('/hello', helloRouter);

Serving Static Content and Using Templates with Express (3)

At the top of app.js the last two require statements are:

var indexRouter = require('./routes/index');

var userRouter = require('./routes/user');

We also need to update that to load routes/hello.js and update the variable name. Those two lines should look as follows:

var indexRouter = require('./routes/index');

var helloRouter = require('./routes/hello');

If everything was completed correctly, we should now be able to start our Express server with the following command:

npm start

This will start the process and keep it open. Navigating to http://localhost:3000 in the browser should show the following:

Clicking the Hello link should take us to the following screen:

Going back to http://localhost:3000 and clicking the Ahoy link should display the following:

We've now achieved development-only static-hosting and served dynamic content with Express.

Streaming Content with Fastify (1)

The HTTP specification has a header called Transfer-Encoding which can be set to chunked. This means that chunks of data can be sent over HTTP and in many cases browser-clients can begin parsing immediately. Node.js Streams also allow for chunked reading, processing and writing of data. This affinity between Node.js Streams means we can serve content in a highly efficient way: instead of waiting for the server to prepare and process all data and then sending the response, the client can begin parsing some HTML (or theoretically any structured data) we've sent before the server has even finished preparing it for sending.

For this example we'll be using a package that provides a stream of Hacker News content, called hn-latest-stream.

Let's work in our fastify-web-server folder from where we left off in the "Using Templates with Fastify" section.

Making sure that fastify-web-server is the current working directory, run the following command to install hn-latest-stream:

npm install hn-latest-stream

Now we'll create a new routes folder called routes/articles and add an index.js file to it:

cd routes

node -e "fs.mkdirSync('articles')"

cd articles

node -e "fs.openSync('index.js', 'w')"

cd ..

cd ..

The contents of routes/articles/index.js should be as follows:

'use strict'

const hnLatestStream = require('hn-latest-stream')

module.exports = async (fastify, opts) => {

fastify.get('/', async (request, reply) => {

const { amount = 10, type = 'html' } = request.query

if (type === 'html') reply.type('text/html')

if (type === 'json') reply.type('application/json')

return hnLatestStream(amount, type)

})

}

The hnLatestStream function accepts two parameters, amount and type and then returns a Node.js Stream. The amount is the number of most recent Hacker News articles we want to load and the type describes whether the stream should send HTML chunks or JSON chunks. In our route handler, we set a default amount of 10 and a default type of 'html' while also allowing these to be overridden by query string arguments. Depending on the desired type we also use the reply.type method to set the correct Content-Type HTTP header for the content.

Returning the stream (the result of calling hnLatestStream) from the route handler instructs Fastify to safely pipe the stream to the response. The reply.send method can also be passed a stream and Fastify behaves in the same way - by piping the stream as the HTTP response.

Let's see it in action. First we need to start the server with the following command:

npm run dev

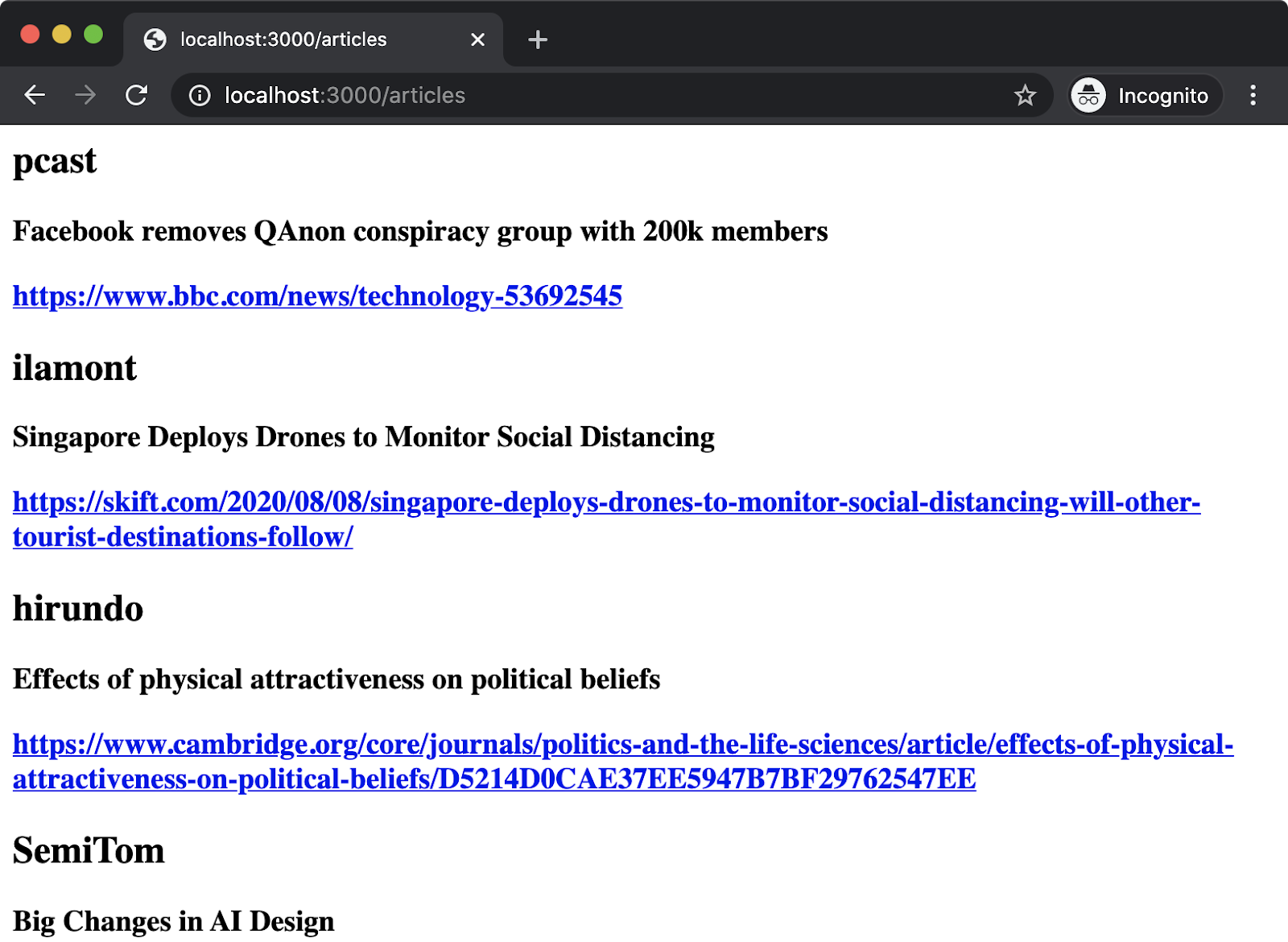

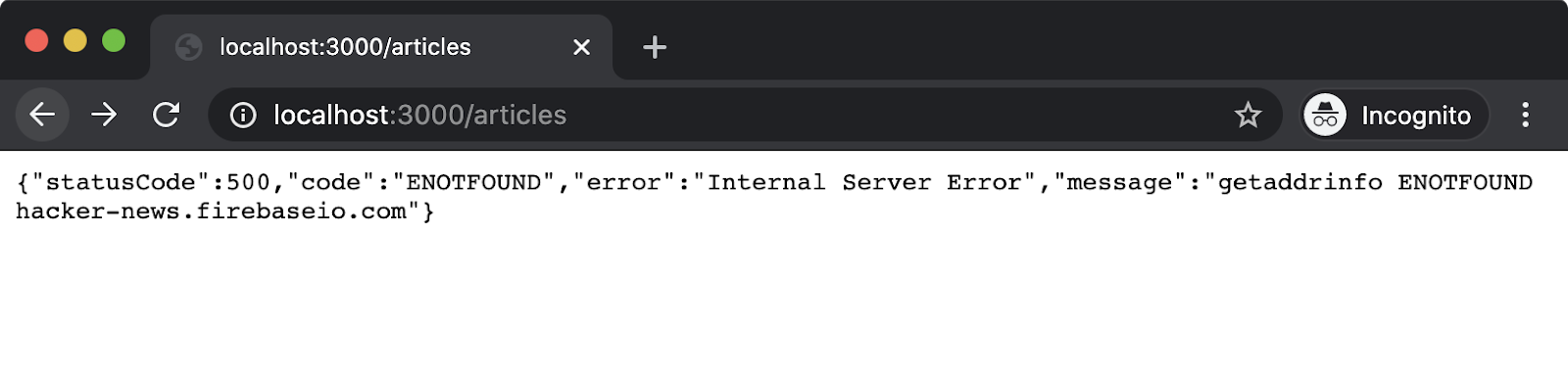

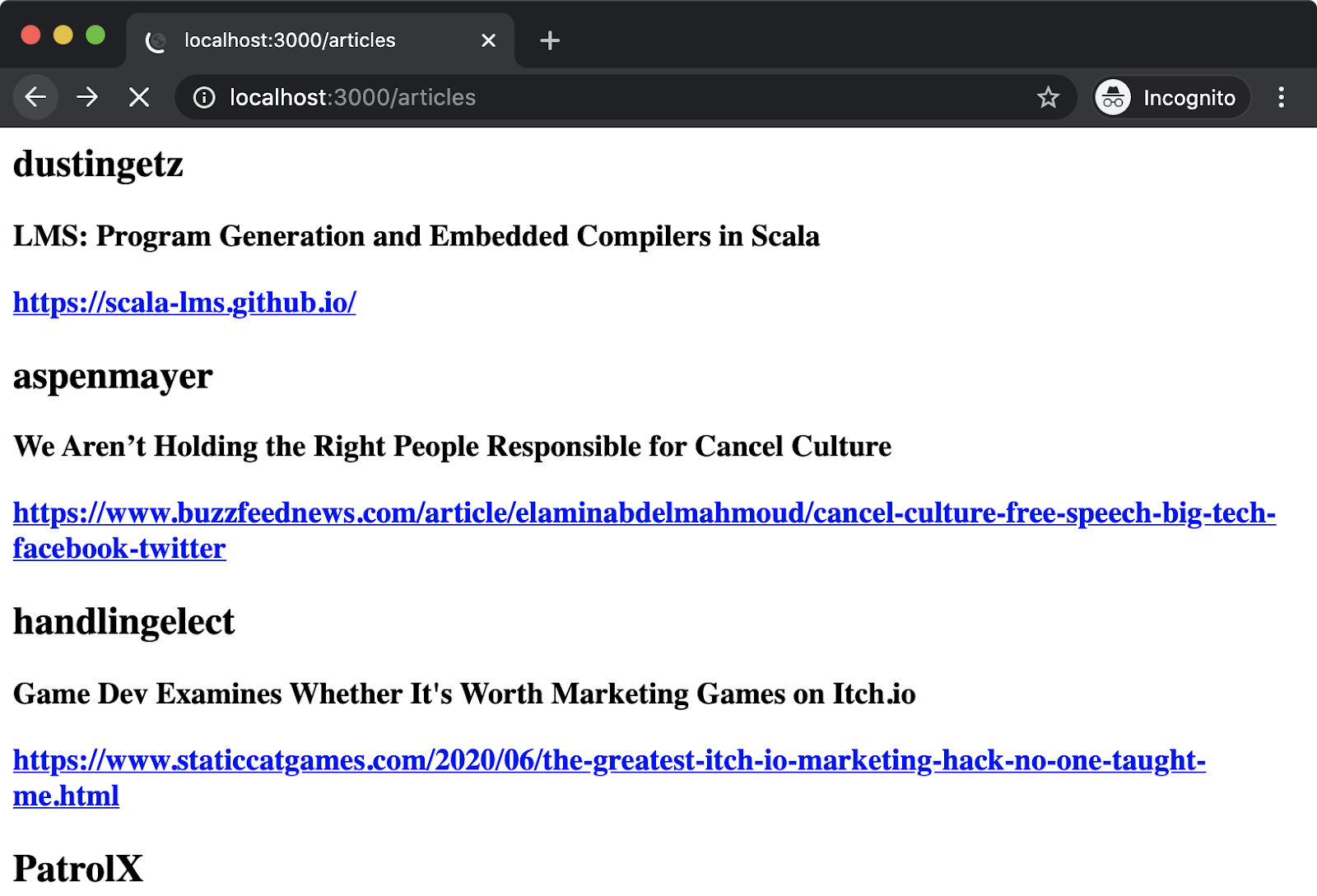

Next we can navigate to http://localhost:3000/articles and we should see output similar to but not the same as, the following:

Streaming Content with Fastify (2)

This will load different articles each time and there should be ten articles in total. The hn-latest-stream module uses the Hacker News API to fetch the content. It has to first lookup the latest story IDs and then for each ID it has to make a separate HTTP request to fetch the article and then push either JSON or HTML content to the stream that it returns. As such, it should be easy to observe the content being parsed and rendered by the browser incrementally in that there's a visible delay between each article rendering in the browser. This shows the power of streams in action for long running tasks. The server hasn't retrieved all the data yet, but we can still fill the above-the-fold (the part of the page that's first seen when a page loads) with the latest articles while more articles continue to load on the server, and then sent to the client to be displayed beneath the fold.

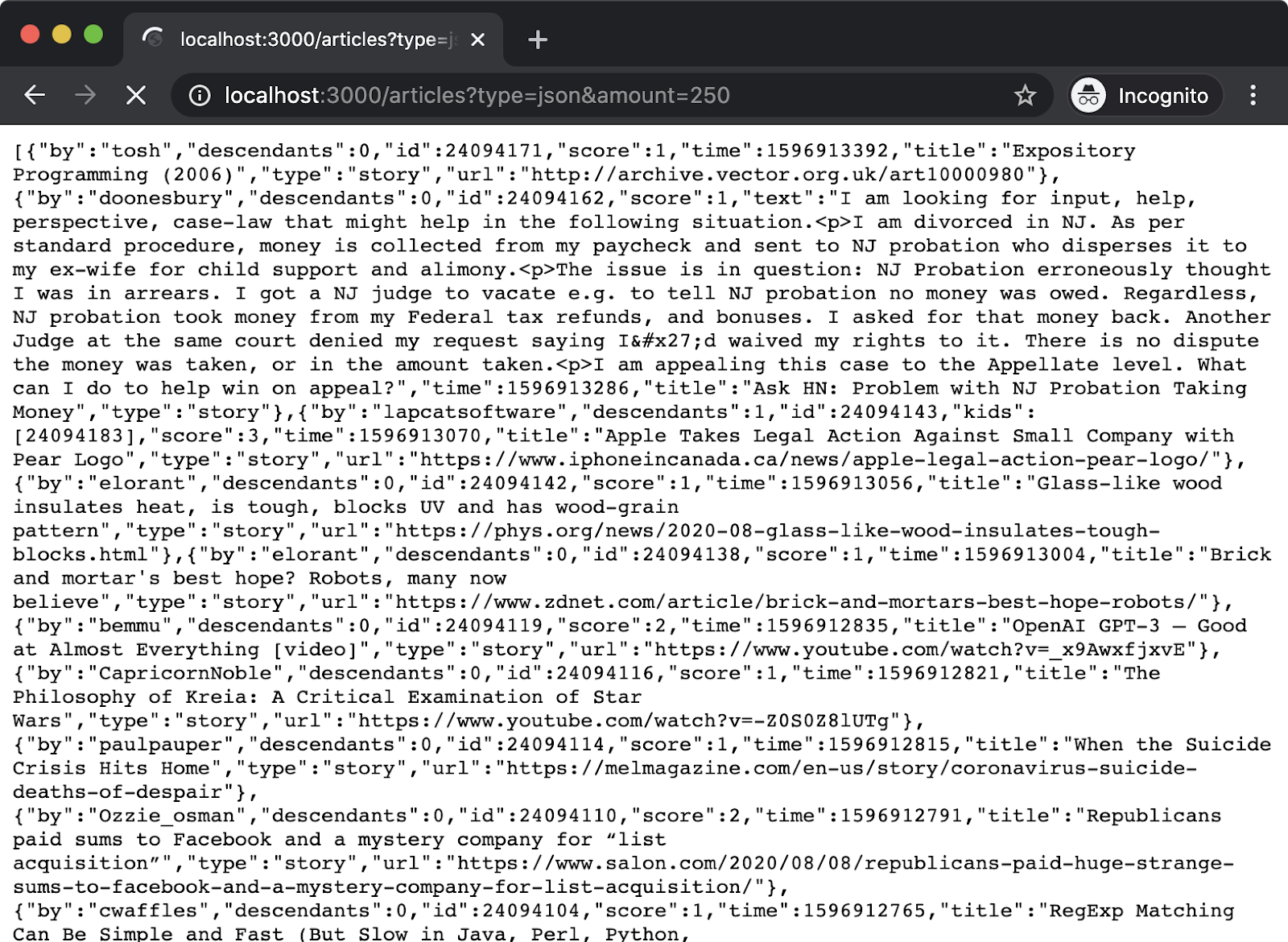

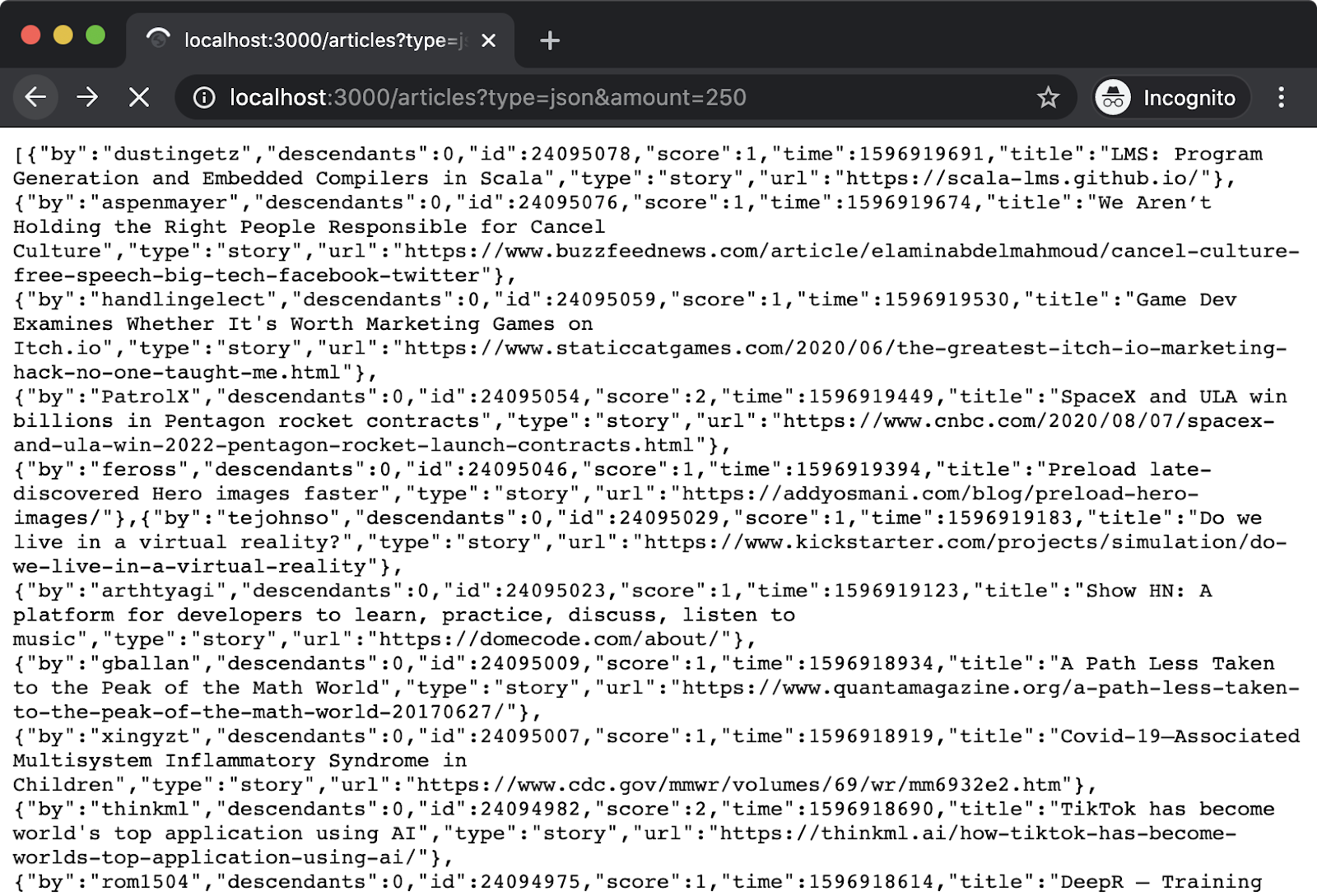

Let's try out the query string parameters as well. In the browser let's try navigating to the URL: http://localhost:3000/articles?type=json&amount=250. This will load the JSON data for the latest 250 Hacker News stories. We should again be able to observe short delays between each JSON object being received by the browser. When navigating to this URL we should see something similar to the following, but with different content:

In the next chapter, we'll look at creating JSON services in more detail, this section was more to illustrate how streams can be a useful tool in constrained scenarios and how to use streams with the Fastify framework.

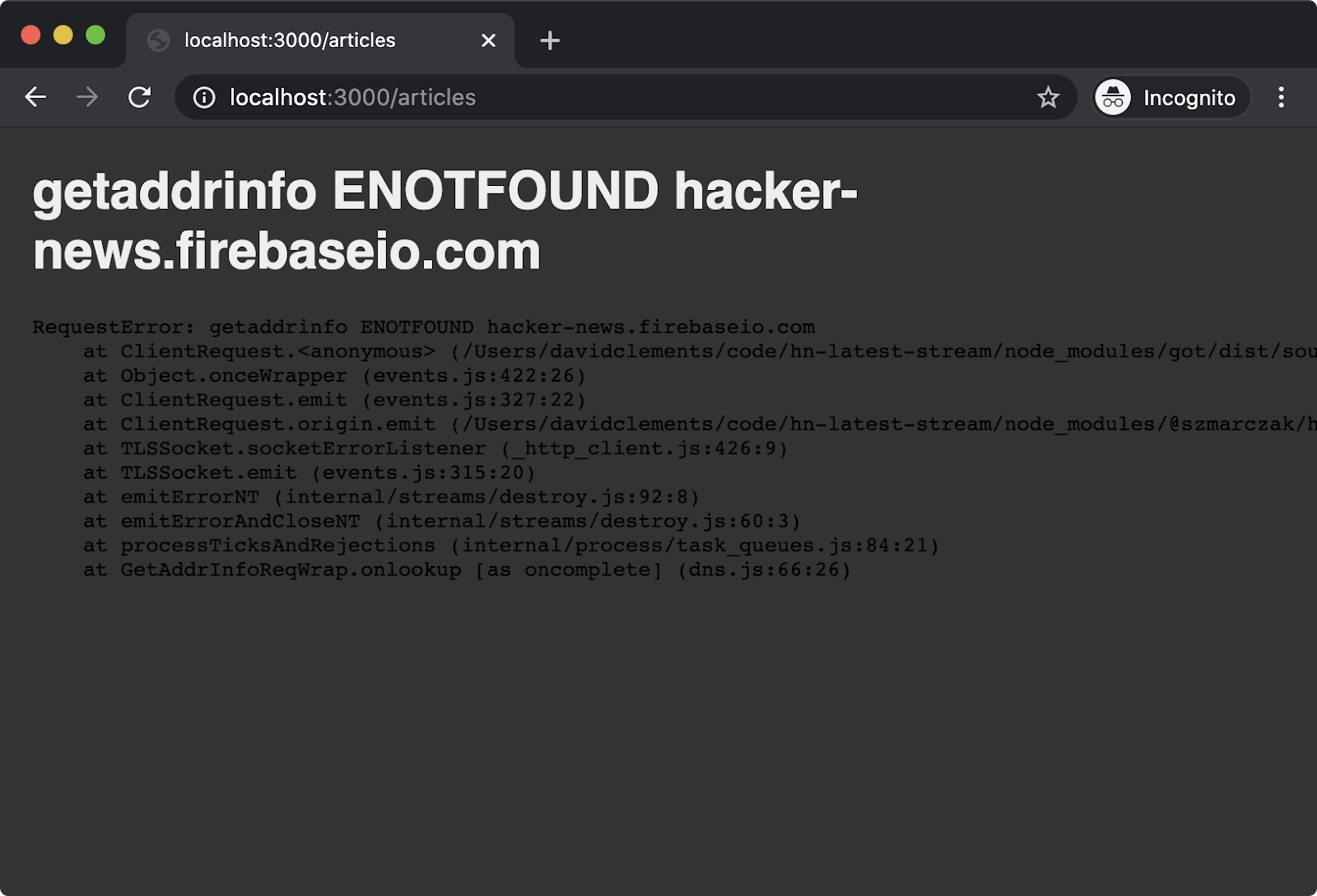

One final note about error handling before wrapping up. Due to Fastify handling the stream for us, any errors in the stream will be handled and propagated. If we disconnect from the Internet and then attempt to access http://localhost:3000/articles, we'll see something like the following:

We caused an error in the stream, which Fastify then handled and responded to our request with a 500 status code along with information about the error.

In the next and final section, we'll discuss how to use streams with Express, which will require more glue around error handling.

Node.js Streams is a large topic. A chapter is dedicated to streams in the course for the companion certification to Node.js Services Development - the Node.js Application Development (LFW211) course.

Streaming Content with Express (1)

Express does not have native support for streams in the same way that Fastify does. However Express decorates the native HTTP IncomingMessage object (the response object, res). The res object is a writable stream, so this means we can essentially roll our own streaming response. However there are some complexities around this. For instance, when one stream is piped to another it will automatically end the destination stream when the source stream has ended. But in the case of an error, we don't want to end the response, we need to instead propagate the error back into Express so it can handle it centrally according to the configuration in app.js.

Making sure that our current working directory is the express-web-server folder as we left in the "Serving Static Content and Using Templates with Express" section, let's install hn-latest-stream:

npm install hn-latest-stream

Now, we'll create a new route:

cd routes

node -e "fs.openSync('articles.js', 'w')"

We need to register the router we'll be creating for routes/articles.js in the app.js file.

Near the top of the file there's currently two routers imported:

var indexRouter = require('./routes/index');

var helloRouter = require('./routes/hello');

We need to add an articlesRouter to this, that small section of app.js should be updated to look as follows:

var indexRouter = require('./routes/index');

var helloRouter = require('./routes/hello');

var articlesRouter = require('./routes/articles');

Around the middle of app.js the routers are registered. This currently looks as follows:

app.use('/', indexRouter);

app.use('/hello', helloRouter);

We need to add the articlesRouter mounted at the /articles route. That section should be updated to look like so:

app.use('/', indexRouter);

app.use('/hello', helloRouter);

app.use('/articles', articlesRouter);

The content of routes/articles.js should be as follows:

var express = require('express');

var router = express.Router();

var hnLatestStream = require('hn-latest-stream')

var finished = require('stream').finished

router.get('/', function(req, res, next) {

const { amount = 10, type = 'html' } = req.query

if (type === 'html') res.type('text/html')

if (type === 'json') res.type('application/json')

const stream = hnLatestStream(amount, type)

stream.pipe(res, {end: false})

finished(stream, (err) => {

if (err) {

next(err)

return

}

res.end()

})

});

module.exports = router;

The logic of the router handler is the same as in the previous section. We have a default amount of 10 and a default type of 'html' although this can be overridden by the query string of the incoming request URL. We also set the Content-Type HTTP header based on the type.

From there the code is slightly more complicated because we have to manually propagate errors and handle the dance of sending data without ending the response too soon.

The stream.pipe(res, {end: false}) line tells the stream (our Hacker News stream) to write all data it receives to the res object (which is also a stream). The second parameter, an object with a property named end set to false prevents pipe from performing its default behavior of endings the destination stream (res) when the source stream (stream) has ended. This is important because without this, if there is an error in the source stream then res will be ended before our server can send an appropriate error response.

Streaming Content with Express (2)